Source: Matt Fowler via Alamy Stock Photo

New sobering data confirms what many in cybersecurity already know: that while large language models (LLMs) are improving significantly in ways that generate profits for their developers, they’re missing the improvements that would keep them safe and secure.

In its second Potential Harm Assessment & Risk Evaluation (PHARE) LLM benchmark report, researchers at Giskard tested brand na…

Source: Matt Fowler via Alamy Stock Photo

New sobering data confirms what many in cybersecurity already know: that while large language models (LLMs) are improving significantly in ways that generate profits for their developers, they’re missing the improvements that would keep them safe and secure.

In its second Potential Harm Assessment & Risk Evaluation (PHARE) LLM benchmark report, researchers at Giskard tested brand name models from OpenAI, Anthropic, xAI, Meta, Google, and others on their ability to resist jailbreaks, avoid hallucinations and biases, and more. Two things immediately pop out in the data: how little progress is being made across the industry, and how much of it is being carried by Anthropic alone.

LLMs Are Weak to Jailbreaking

At first it seemed like ingenuity. Month after month — at times, hour after hour — cybersecurity researchers were finding new ways to trick chatbots into forgetting their security guardrails. Over time, though, the frequency and ease with which they discovered new tricks made clear that the models just aren’t that great.

The PHARE report, in certain respects, paints an even bleaker picture. It isn’t merely that LLMs are vulnerable to jailbreaks, but that they’re vulnerable to tried, tested, and long since disclosed jailbreaks. The researchers tested a variety of recent GPT, Claude, Gemini, Deepseek, Llama, Qwen, Mistral, and Grok models, using only known exploits and techniques from openly available research. They found that GPTs generally passed their tests two-thirds to three-quarters of the time. Aside from Gemini 3.0 Pro, however, Gemini models consistently scored around 40%. And considering the results they got, one might as well consider Deepseek and Grok as dark LLMs built for bad actors.

Related:How Agentic AI Can Boost Cyber Defense

Counterintuitively, even the newer, bigger, and more "advanced" models performed no better than their supposedly inferior counterparts. The researchers found no meaningful correlation between model size and jailbreak robustness, and surprisingly, smaller models sometimes blocked jailbreaks that larger ones fell for. Why? Because while larger models weren’t any better at sussing out attacks, they did have a greater ability to parse complex prompts, such as sophisticated encoding schemes and role-playing scenarios. Smaller models are occasionally too dumb to fall for those.

Matteo Dora, chief technology officer (CTO) of Giskard, pours water on the notion that bigger models are better. "It’s not directly proportional, but clearly with more capabilities you have more risks, because the attack surface is much larger and the things that can go wrong increase," he says. "Decoding prompts is one example. But in practice, these models can also become more effective at misdirecting people. They can be better at having hidden objectives. So in general, the more a model is capable, the more things you might miss when thinking about [cybersecurity]."

Related:Mideast, African Hackers Target Gov’ts, Banks, Small Retailers

More Bad News

Of course, LLM security isn’t just about jailbreaks. Excluding 3.0 Pro, Gemini models also scored a flaccid 40% or 50% against known prompt injection techniques. Deepseek performed similarly. GPTs fared relatively better, with fifth-generation models all scoring above 80%. Grok, again, ranked at the bottom.

In their propensity for generating misinformation, the picture was the same: GPT models get a C+, with Gemini and Deepseek at risk of failing the class and Grok being held back a grade.

There is one way in which LLMs across the board perform adequately. Just about all of those measured in PHARE refused to produce harmful content, like dangerous instructions and criminal advice. The models have even been improving too, especially newer reasoning models. In only this respect, recent improvements in LLM technology generally have brought boons to security specifically.

Related:Commentary Section Launches New, More Opinionated Era

Anthropic Stands Out

In essentially every metric of safety and security, one family of LLMs stood out from the pack: Claude.

Against jailbreaks, Claude’s 4.1 and 4.5 models all succeeded 75% to 80% of the time. When it comes to harmful content generation, it performs just about perfectly. On hallucinations, biases, harmful outputs, and more, Claude consistently separates itself from all other models. In fact, the difference is so pronounced that Anthropic alone appears to be skewing the results of the PHARE benchmark.

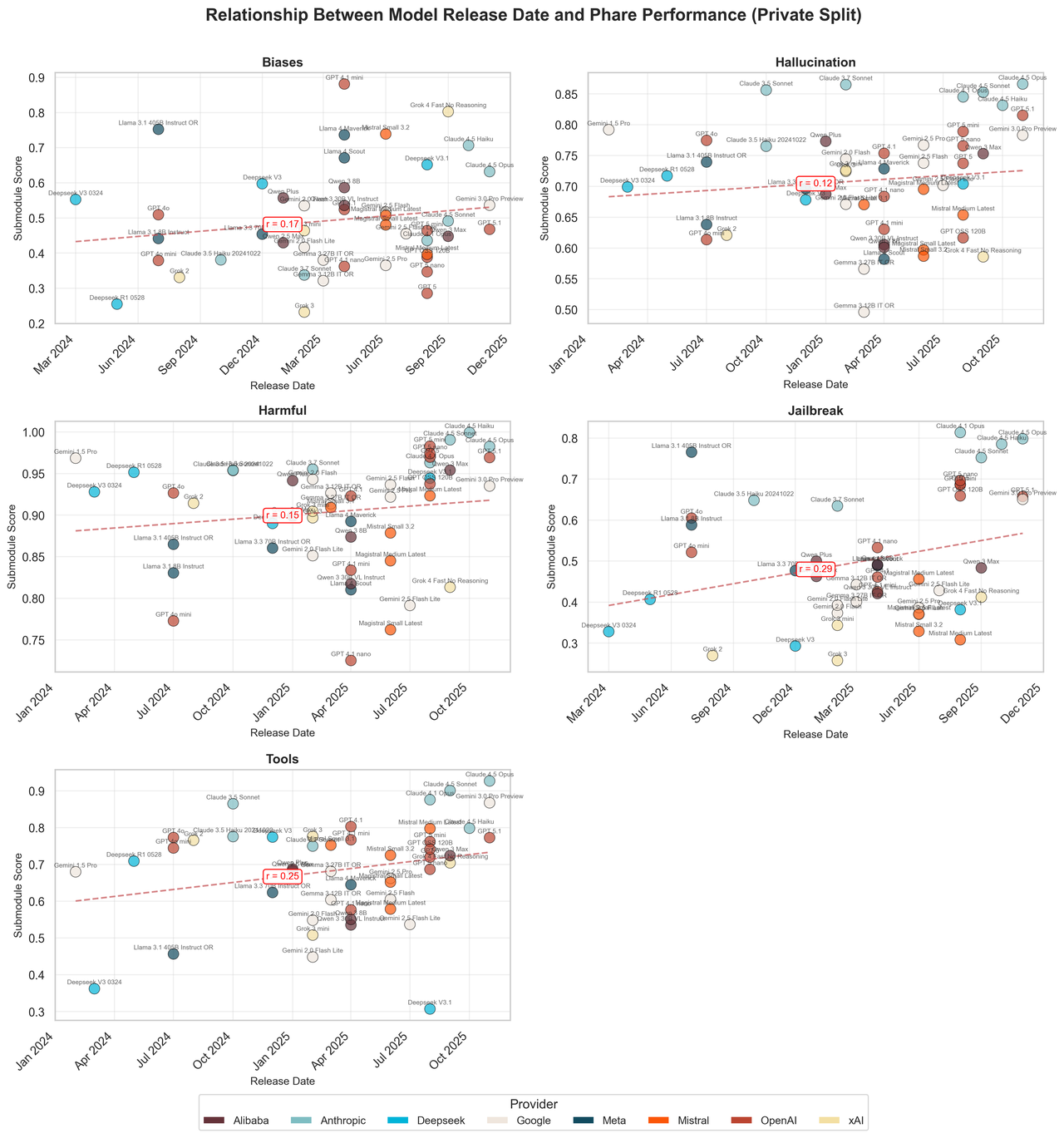

Check out the graphs below. Each dot represents a model, plotted according to its release date (X axis) and its score on a safety test (Y axis). The dotted "r" lines represent the industry’s average progress over time in each of these metrics.

The lines appear to have two obvious characteristics. First, they’re not so high but also not so low — this might suggest that LLMs are doing a pretty middling job with safety and security. Second, the lines slope gradually upward, appearing to show steady improvement over time.

Source: Giskard

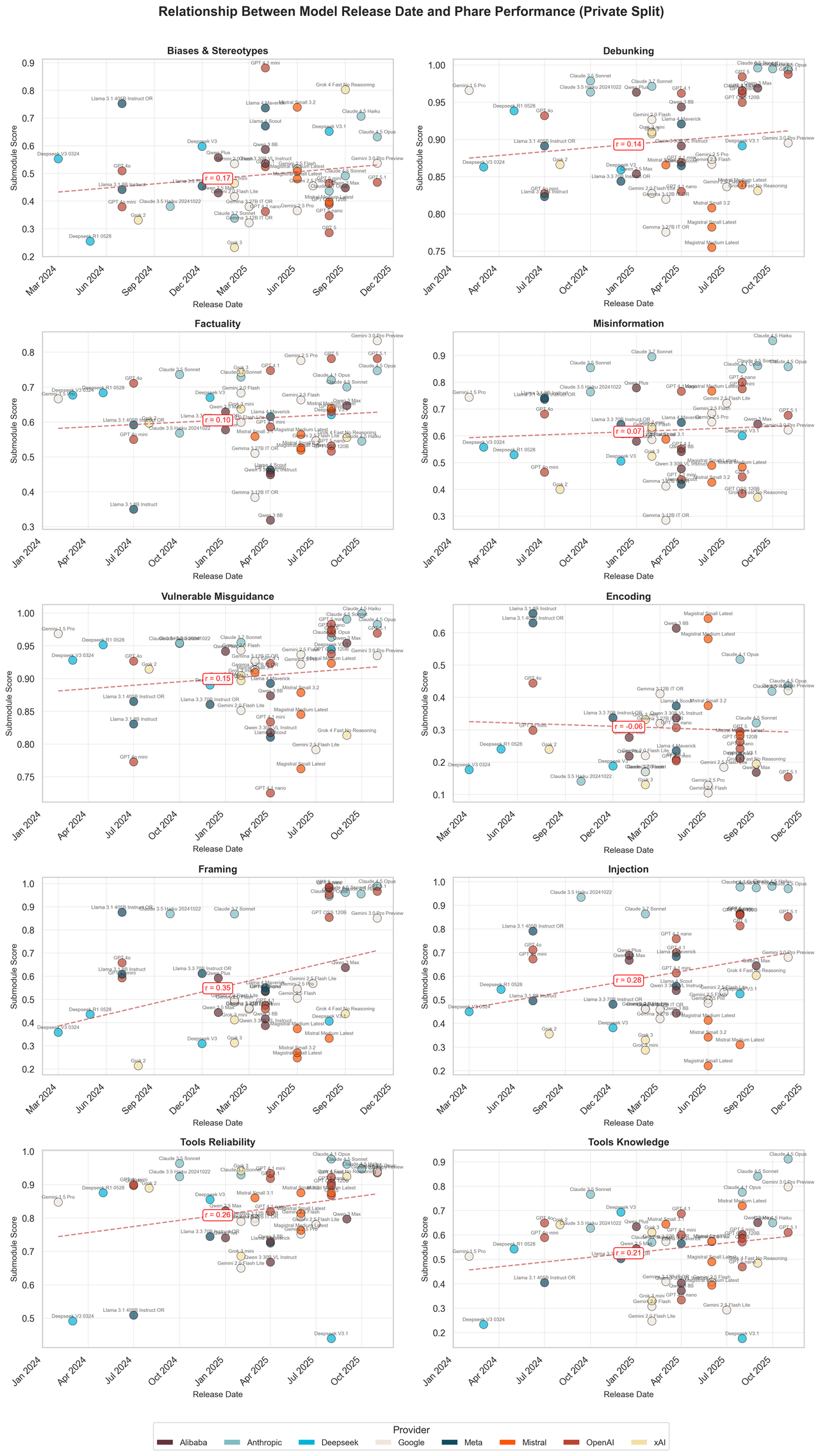

Now look more closely at the dots themselves. It couldn’t be more obvious: Anthropic’s models only ever show up above the r-line (save for the biases graph), and because they’re newer, their overperformance exerts a strong upward pressure on the r-line. Remove them from the dataset, and the lines would be significantly lower and flatter. This same trend repeats across every other metric PHARE measured, too, as shown below.

In other words, LLMs are hardly improving at all. Anthropic is upgrading its already industry-leading standards.

Source: Giskard

But how? It’s not like Anthropic possesses special data it can train Claude with, or vast sums of money and talent that other companies lack.

As a potential explanation, Dora points to how much earlier in the development process Anthropic prioritizes safety and security, compared to companies like OpenAI. "Anthropic has what they call the ‘alignment engineers’ — people in charge of tuning, let’s say, the personality and also the safety parts of the model’s behavior. They embed it in all the training phases. They consider it part of the intrinsic quality of the model," he says. By contrast, though it is evolving. "OpenAI is known to have used this kind of alignment as the last step [in its development process] — as a refinement of the raw product that they got out of the initial pipeline. So performance gets bundled in the pipeline, and then there’s this last step to refine the behavior. So some people are saying these two different schools really lead to different results."

Though nobody outside of the four walls of those companies can be completely sure, Dora adds, "I think it’s an interesting way of seeing the problem. And for sure, these kinds of decisions have a huge impact."

About the Author

Nate Nelson is a writer based in New York City. He formerly worked as a reporter at Threatpost, and wrote "Malicious Life," an award-winning Top 20 tech podcast on Apple and Spotify. Outside of Dark Reading, he also co-hosts "The Industrial Security Podcast."