Three years ago, I announced a new service: Scraper Snitch attempts to identify and snitch on HTTP fediverse scrapers.

The system watches the logs of my Mastodon instance (along with some carefully placed honeypots) and generates behavioural scores.

If a traffic source breaches the scoring threshold for long enough, a receipts file is generated and my bot account will publish a followers-only toot to warn other instance admins of the bot.

Over the last few months, though, I’ve star…

Three years ago, I announced a new service: Scraper Snitch attempts to identify and snitch on HTTP fediverse scrapers.

The system watches the logs of my Mastodon instance (along with some carefully placed honeypots) and generates behavioural scores.

If a traffic source breaches the scoring threshold for long enough, a receipts file is generated and my bot account will publish a followers-only toot to warn other instance admins of the bot.

Over the last few months, though, I’ve started thinking that the time may have come to retire it.

However, I’ve struggled to come to a firm decision, so felt that it might be helpful to lay my thinking out in a more structured manner.

Why?

I feel that the service is no longer providing the benefit that it once was and, as detecting bots has got more difficult, the chances of advertising false positives has probably grown.

Detections and False Positives

So far, the system has avoided generating too many false positives but, because of changes in scraper behaviour, it is also generating fewer true positives.

Although I could adjust alerting thresholds, I don’t currently believe that it’s possible to do so in a way that would also allow instance admins to maintain the necessary level of confidence in the bot’s output.

I’ve always expected that admins would check receipts files themselves before enacting a block, but I don’t think that it’d be reasonable to expect them to sift through high volumes of receipts if noise levels were to substantially increase.

Note: This is solveable, but I don’t currently have the time or energy available to do so (and, as I haven’t for a while, it seems unlikely that I’ll find it in the near future).

Scraper Behaviour

The much harder problem to solve is a change in the overall behaviour of scrapers.

When I first launched the service, ChatGPT had only just launched and the world was yet to properly learn of how they’d collected data to train their model1. Just as importantly, the various ChatGPT-alikes that pepper today’s market hadn’t yet spawned out into the world.

Soon enough, though, an army of AI crawlers began to act quite unethically, including changing User-agent if theirs was blocked and bypassing IP blocks by crawling from undeclared IPs2.

I could go on, at length, about genAI (and particularly about the ethics of overloading services before lecturing site admins for "not understanding the potential of AI") but the key point here is that the average behaviour of scrapers has changed dramatically.

Scraper Snitch is designed to catch scrapers that consistently originate from the same IP (or at least the same subnet): after all, there’s very little benefit in blocking an IP if it’s never used for scraping again.

In fairness to AI, IP-centric blocking has long suffered from this problem. But, it is a problem that AI crawler activities have actively amplified in recent years.

Happily, though, there are already solutions out there.

As the threat landscape has evolved, the world has moved from "detect and block" to "detect and poison":

- Iocaine detects crawlers and traps them in an endless maze of garbage

- Nightshade poisons images

- Cloudflare dumps AI crawlers into a labyrinth

- Anubis attempts to raise the computational cost of access

- The Poison Fountain provides a source of poisoned content (IMO it’s better to proxy to it than to link/redirect)

Although most of these efforts predate it, research by Anthrophic has found that it only takes a small number of documents to start poisoning training data. This significantly alters the value proposition of the "fuck you" crawling strategy that’s currently being employed by AI proponents.

What all of this means is that it doesn’t really make sense for me to expend energy improving Scraper Snitch’s detection when much more effective defences are now available to help deal with the scraper scourge of today.

Is It Still Worth The Effort?

There is an additional question here.

In a world where AI spiders run rampant, do we still need to care about trying to catch random fedi-search projects?

The ethical issues with those projects hasn’t really changed, but in a world where malicious agents can trivially ask Chat-Gippity to help them locate targets for harassment campaigns, is it worth the administration effort of detecting and blocking well intentioned but ill-thought out projects?

The only answer that I have to this is that I’m probably not the one who should be asked.

Technical Hurdles

This is a pretty minor concern, but seems worth noting for the sake of completeness.

As a reminder, Scraper Snitch is underpinned by a time series database (TSDB):

|--------------------|

| Logs |

|--------------------|

1.|

|

V

|--------------------|

| Log Agent |

| (Request Scoring) |

|--------------------|

2.|

|

V

|--------------------|

| Time Series |

| Database |

|--------------------|

^ 4. |

| |

| |

SQL |

3.| V

|--------------------|

| Analysis Bot |

| Gen. Report |

|--------------------|

5.| 6. |

| |

V |

|----------| |

| Receipts | |

|----------| |

|

V

|--------------------|

| Mastodon |

|--------------------|

In the three years since I launched Scraper Snitch, the fediverse has grown considerably (which is a great3).

Although largely driven by a recent spike, there are currently almost double the number of fedi servers compared to January 2023:

The number of servers has fluctuated over time, but the number of monthly posts has continued to grow pretty consistently:

Although my instance (obviously) doesn’t receive all of those, this growth means that my instance logs a lot more lines than it used to. About the same proportion of those logs get sent into the TSDB, so I’ve ended up with a lot more log lines going into the database.

That doesn’t currently affect Scraper Snitch’s operation much (it doesn’t matter if its queries run slowly, as long as they complete), but it does make supervision of the system much harder.

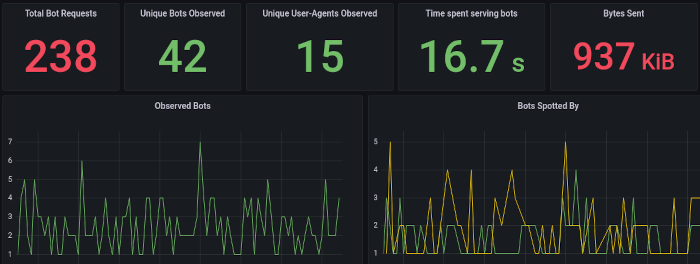

When I announced Scraper Snitch, I talked about some Grafana dashboards that I’d built in order to help monitor and troubleshoot it:

Some of those dashboards no longer work: the underlying dataset is too huge and Grafana’s queries time out.

Eventually, the dataset might even grow to the point that Scraper Snitch’s queries start to fail.

There are ways in which this can be addressed, but realistically, as part of restoring this functionality I need to move from the existing database to a newer and faster stack.

System Performance Over Time

In order to chart detections over time I wrote a script to call Mastodon’s API and fetch toots, filtering out any that weren’t a bot announcement before generating some simple line protocol to be written into InfluxDB:

curl -s \

-D headers.txt \

-H "Accept: application/json" \

-H "Authorization: Bearer $MASTODON_TOKEN" \

https://mastodon.bentasker.co.uk/api/v1/accounts/$MASTO_ID/statuses | jq -r '

.[] |

select(.content|contains("Suspected Scraper Bot Identified")) |

.created_at |

split(".")[0] | # strip microseconds

strptime("%Y-%m-%dT%H:%M:%S") |

strftime("snitchbot_toots count=1 %s000000000")

'

Note: this is a somewhat simplified snippet, the actual script has logic to handle API pagination.

With the data in InfluxDB, it was possible to query hits over time:

from(bucket: "testing_db")

|> range(start: 2023-01-01T00:00:00Z)

|> filter(fn: (r) => r._measurement == "snitchbot_toots")

|> filter(fn: (r) => r._field == "count")

|> aggregateWindow(every: 1mo, fn: sum)

We can already see that, although there have been gaps in the past, detections are way down4.

The total number of tooted hits is 180. Actual detections/receipts are a little higher (198) because the system detected a number of bots before I’d publicly launched.

To help assess the false positive rate, I modified the jq statement to match toots referring to false positives:

.[] |

select(.content| ascii_downcase | contains("positive") or contains("false")) |

.created_at |

split(".")[0] | # strip microseconds

strptime("%Y-%m-%dT%H:%M:%S") |

strftime("snitchbot_toots fp=1 %s000000000")

Note: It’s possible that this resulted in an incomplete data-set. I’ve always tried to make sure that I toot about false positives, but I also don’t know whether I’ve ever forgotten.

Querying this data shows that false positives have been pretty rare:

from(bucket: "testing_db")

|> range(start: 2023-01-01T00:00:00Z)

|> filter(fn: (r) => r._measurement == "snitchbot_toots")

|> filter(fn: (r) => r._field == "fp")

|> aggregateWindow(every: 1mo, fn: sum)

That spike in August was the result of Fedifetcher repeatedly tripping detections.

A quick bit of maths suggests that Scraper Snitch has had a false positive rate of between 2.25% and 2.77% (the variance depends on whether you count tooted disclosures or receipt files).

Although that’s not a terrible false positive rate, it’s still higher than I’d like and I certainly wouldn’t feel comfortable increasing it all that much further5.

An Argument For Keeping The System Online

Having written all of this out, I think that there may be a reasonable argument that I’ve been concerned about relative trivialities.

I certainly went into this believing that the false positive rate was much higher than it actually is.

Although my concerns about AI scraper behaviour are (IMO) valid, the aim of the system has never really been to protect against that sort of scraper.

In fact, that’s even noted in the original launch post:

Within the data-set generated by my log-agent, there are clear indications that some scrapers are attempting to circumvent IP based blocklists using a range of different techniques.

Data about these scrapers is not published into

botreceipts, because it’s difficult to automatically differentiate (at least, with any degree of certainty) between those and mistaken requests by legitimate users.

Scraper Snitch’s original purpose was to help watch for (and alert about) one-person-band style fedi-search scrapers (most of which probably aren’t going to go out of their way to obfuscate scraping in the ways described above).

Scraper Snitch is still very capable of doing that.

We also often only find out which results were most meaningful once we’ve got the benefit of hindsight. Although I’ve characterised recent results as less useful, the truth is that they might later prove to be much more meaningful than I currently realise.

Conclusion

For a while now, I’ve been on the fence about whether or not to continue to operate Scraper Snitch.

It doesn’t really cost much to run and I haven’t had to spend much time maintaining or tweaking it (although some time investment is going to be needed in future).

On the other hand, I still feel that it has become less useful and I’ve grown increasingly concerned that the consequences of a single false positive could outweigh the limited benefit that the system now delivers.

Scraper Snitch wasn’t written to counter the kind of behaviour observed amongst AI spiders and there’s an open question about whether we need to worry about small indexing projects in a world where abuse is sometimes just a ChatGPT or Perplexity question away.

Even when coming to write this post, there was a lot of indecision: was I proposing taking away something that the fedi community might later come to miss?

Part of the aim of writing this post was to see whether it cemented my feelings in either direction.

It hasn’t.

I think I now lean more towards keeping it running, but I also don’t believe that I’ve made a truly decisive argument in either direction.

So, I’ve decided to put the question out there: should I keep the service running or turn it off?

I can’t promise to abide by the result if it proves not to be realistic to do so, but if there’s strength of feeling in either direction it’ll likely help me reach an actual decision on the future of the bot.

Though there were suspicions: "If OpenAI obtained its training data through trawling the internet, it’s unlawful" - Hanff ↩ 1.

They don’t like it when it’s done to them though. ↩ 1.

Despite how badly Musk’s initial handling of Twitter went, along with it’s ongoing affinity for the far right, who could possibly have predicted that things would progress to users being driven away by Twitter’s AI generating CSAM on demand? ↩ 1.

Technically these stats are incomplete - a new detection fired just after I’d collected metrics, followed by another while I was drafting this post ↩ 1.

While this isn’t life and death stuff, there are consequences to false positives: legitimate instances/apps could end up fediblocked without knowing (and with no easy way to have it reverted). Conversely, under-reporting could lead to posts being included in some gobshite’s index and weaponised to aid targeted harrassment. ↩