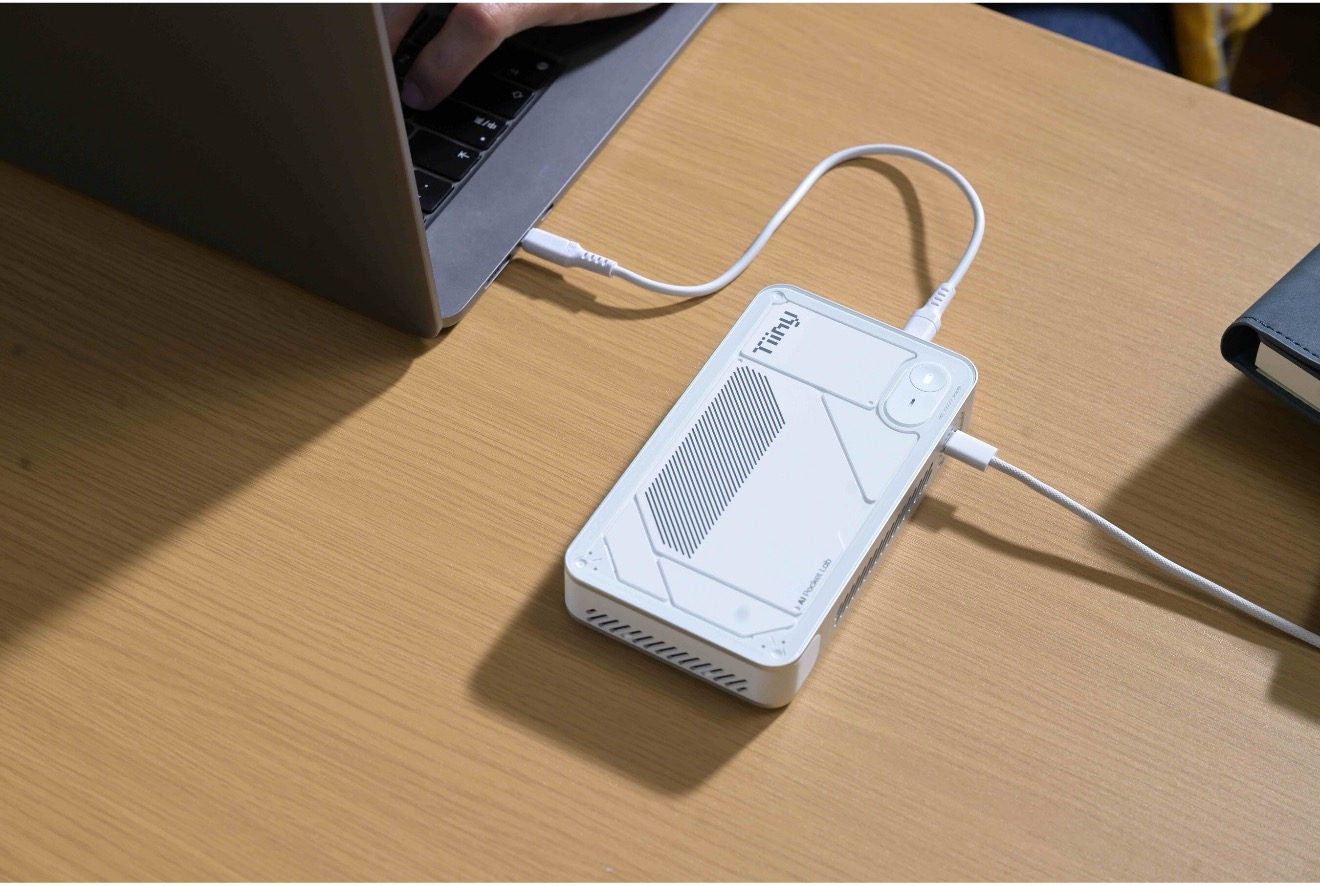

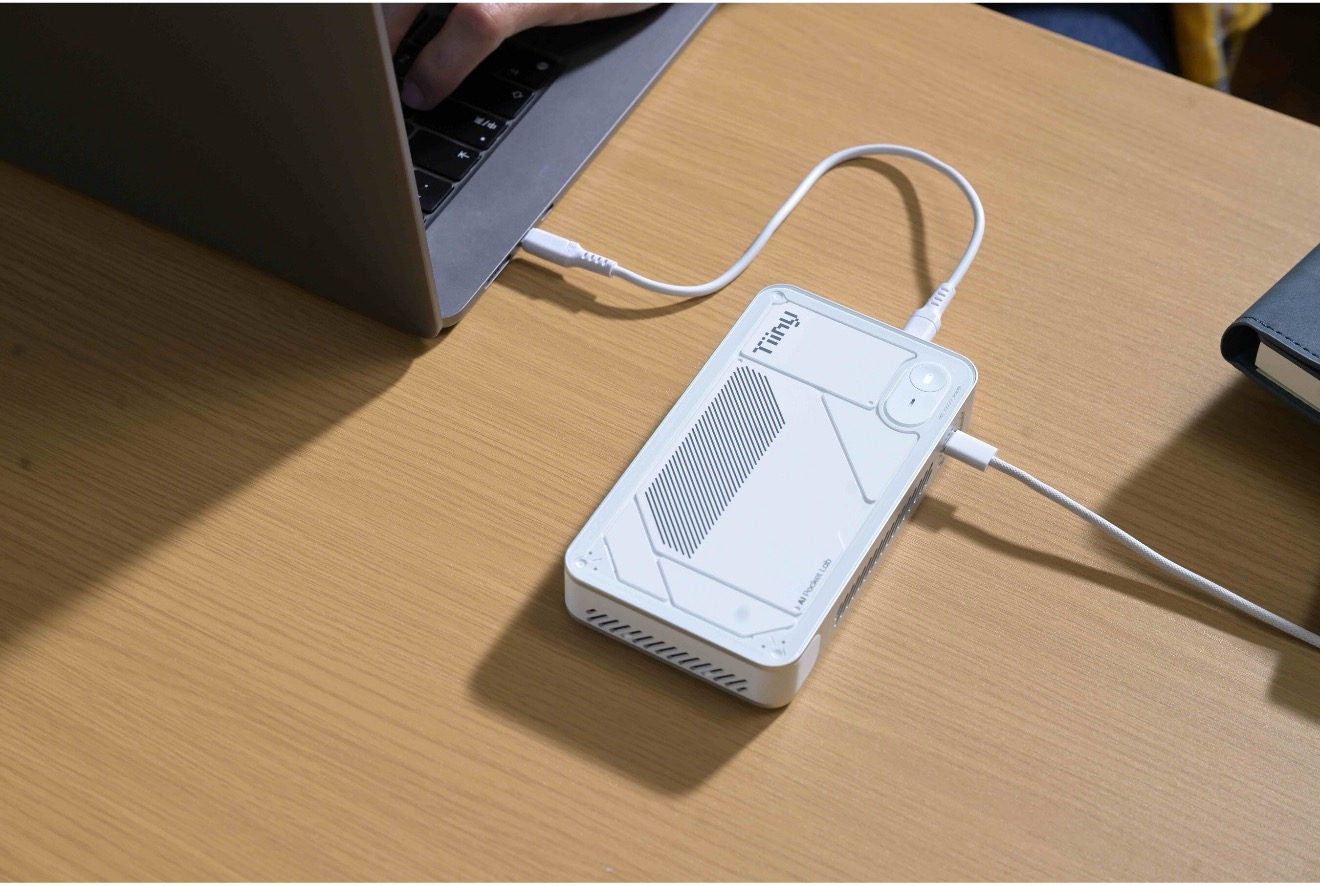

Tiiny AI, a 2024 start-up founded by MIT, Stanford, HKUST, SJTU, Intel, and Meta alumni, unveiled the Pocket Lab, a pocket‑sized AI computer that runs massive language models locally. Measuring just 14.2 × 8 × 2.5 cm and weighing 300 g, the 12‑core Armv9.2 chip with Neon, SVE2, and SME2 engines powers up to 120 billion‑parameter models at a 65 W envelope. Backed by a multi‑million seed round, the device promises private, fast, energy‑efficient AI for personal and small‑team use, and will be available after CES for $455.

(Source: Tiiny AI Inc.)

Tiiny AI introduced its Tiiny AI Pocket Lab as a compact personal AI computer that runs large language models locally. The system targets local deploymen…

Tiiny AI, a 2024 start-up founded by MIT, Stanford, HKUST, SJTU, Intel, and Meta alumni, unveiled the Pocket Lab, a pocket‑sized AI computer that runs massive language models locally. Measuring just 14.2 × 8 × 2.5 cm and weighing 300 g, the 12‑core Armv9.2 chip with Neon, SVE2, and SME2 engines powers up to 120 billion‑parameter models at a 65 W envelope. Backed by a multi‑million seed round, the device promises private, fast, energy‑efficient AI for personal and small‑team use, and will be available after CES for $455.

(Source: Tiiny AI Inc.)

Tiiny AI introduced its Tiiny AI Pocket Lab as a compact personal AI computer that runs large language models locally. The system targets local deployment of models up to 120 billion parameters within a 65 W power envelope, focusing on energy efficiency, latency reduction, and data privacy for individual users and small teams. The company was founded in 2024 by engineers from universities such as MIT, Stanford, HKUST, and SJTU, as well as from companies including Intel and Meta, bringing expertise in AI inference optimization, systems engineering, and hardware–software co-design. In 2025, Tiiny AI raised a multimillion-dollar seed round from international investors to commercialize this platform.

Tiiny AI Pocket Lab executes advanced personal AI workloads entirely on-device, including multi-step reasoning, long-context comprehension, agent-style workflows, and offline content generation. The architecture aims to support scenarios such as private document analysis, secure creative work, and research assistance without reliance on remote servers, with user data, preferences, and documents stored locally under strong encryption. Tiiny AI positions the device in a parameter range around 10 billion–100 billion parameters as a practical balance between capability and resource cost for personal use, with optional scaling to 120 billion parameters when workloads demand higher model capacity.

The little box uses 30 W TDP, 65 W typically, measures 14.2 × 8 × 2.53 cm, and weighs approximately 300 g—pocket-sized.

The company aligns this product with broader growth in the large language model market, which analysts expect to expand quickly across retail, e‑commerce, healthcare, and media. Tiiny AI identifies cloud dependence and energy consumption as structural constraints, and instead emphasizes an on-device approach. Tiiny AI Pocket Lab relies on TurboSparse, a fine-grained sparse-activation method that reduces inference computation while preserving model behavior, and on PowerInfer, a heterogeneous inference engine that distributes workload across the CPU and NPU to improve throughput under tight power budgets. The software environment supports one-click deployment of multiple open-source LLMs and agents, and uses over-the-air update mechanisms to refresh both software and selected hardware modules.

The device is powered by a 12-core Armv9.2 CPU with 80 GB LPDDR5X and 1 TB SSD. But can a little Arm CPU offer any special capability for AI?

A 12‑core Armv9.2‑A part does come with several AI‑friendly blocks. Each core includes the advanced SIMD (Neon) unit that handles classic 128‑bit SIMD operations, and on top of that, the architecture adds Scalable Vector Extension 2 (SVE2), which lets the hardware work on variable‑length vectors from 128 bits up to 2,048 bits, giving a big boost for matrix‑multiply and other vector‑heavy kernels.

Starting with Armv9.2, the Scalable Matrix Extension 2 (SME2) is also present. SME2 provides dedicated matrix‑multiply instructions and a dedicated ZA array that can be reused for both vector and matrix work, delivering up to 6× faster inference on LLMs and similar gains for vision or audio tasks

So yes, the CPU has both a traditional SIMD engine (Neon), a length‑agnostic vector engine (SVE2), and a matrix math engine (SME2), all of which make it particularly well‑suited for AI workloads. You can get one for $455, and will be available after CES. Too bad, would have been a great stocking stuffer.

LIKE WHAT YOU’RE READING? DON’T KEEP IT A SECRET, TELL YOUR FRIENDS AND FAMILY, AND PEOPLE YOU MEET IN COFFEE SHOPS.