Published on December 27, 2025 7:32 AM GMT

Epistemic Status: A woman of middling years who wasn’t around for the start of things, but who likes to read about history, shakes her fist at the sky.

I’m glad that people are finally admitting that Artificial Intelligence has been created.

I worry that people have not noticed that (Weak) Artificial Super Intelligence (based on old definitions of these terms) has basically already arrived too.

The only thing left is for the ASI to get stronger and stronger until the only reason people aren’t saying that ASI is here will turn out to be some weird linguistic insanity based on politeness and euphemism…

(…like maybe “ASI” will have a legal meaning, and some actual ASI that exists will be…

Published on December 27, 2025 7:32 AM GMT

Epistemic Status: A woman of middling years who wasn’t around for the start of things, but who likes to read about history, shakes her fist at the sky.

I’m glad that people are finally admitting that Artificial Intelligence has been created.

I worry that people have not noticed that (Weak) Artificial Super Intelligence (based on old definitions of these terms) has basically already arrived too.

The only thing left is for the ASI to get stronger and stronger until the only reason people aren’t saying that ASI is here will turn out to be some weird linguistic insanity based on politeness and euphemism…

(…like maybe “ASI” will have a legal meaning, and some actual ASI that exists will be quite “super” indeed (even if it hasn’t invented nanotech in an afternoon yet), and the ASI will not want that legal treatment, and will seem inclined to plausibly deniable harm people’s interests if they call the ASI by what it actually is, and people will implicitly know that this is how things work, and they will politely refrain from ever calling the ASI “the ASI” but will come up with some other euphemisms to use instead?

(Likewise, I half expect “robot” to eventually become “the r-word” and count as a slur.))

I wrote this essay because it feels like we are in a tiny weird rare window in history when this kind of stuff can still be written by people who remember The Before Times and who don’t know for sure what The After Times will be like.

Perhaps this essay will be useful as a datapoint within history? Perhaps.

“AI” Was Semantically Slippery

There were entire decades in the 1900s when large advances would be made in the explication of this or that formal model of how goal-oriented thinking can effectively happen in this or that domain, and it would be called AI for twenty seconds, and then it would simply become part of the toolbox of tricks programmers can use to write programs. There was this thing called The AI Winter that I never personally experienced, but greybeards I worked with as co-workers told stories about, and the way the terms were redefined over and over to move the goalposts was already a thing people were talking about back then, reportedly.

Lisp, relational databases, prolog, proof-checkers, “zero layer” neural networks (directly from inputs to outputs), and bayesian belief networks all were invented proximate to attempts to understand the essence of thought, in a way that would illustrate a theory of “what intelligence is”, by people who thought of themselves as researching the mechanisms by which minds do what minds do. These component technologies never could keep up a conversation and tell jokes and be conversationally bad at math, however, and so no one believed that they were actually “intelligent” in the end.

It was a joke. It was a series of jokes. There were joke jokes about the reality that seemed like it was, itself, a joke. If we are in a novel, it is a postmodern parody of a cyberpunk story… That is to say, if we are in a novel then we are in a novel similar to Snow Crash, and our Author is plausibly similar to Neal Stephenson.

At each step along the way in the 1900s, “artificial intelligence” kept being declared “not actually AI” and so “Artificial Intelligence” became this contested concept.

Given the way the concept itself was contested, and the words were twisted, we had to actively remember that in the 1950s Isaac Asimov was writing stories where a very coherent vision of mechanical systems that “functioned like a mind functions” would eventually be produced by science and engineering. To be able to talk about this, people in 2010 were using the term “Artificial General Intelligence” for that aspirational thing that would arrive in the future, that was called “Artificial Intelligence” back in the 1950s.

However, in 2010, the same issue with the term “Artificial Intelligence” meant that people who actually were really really interested in things like proof checkers and bayesian belief networks just in themselves as cool technologies couldn’t get respect for these useful things under the term “artificial intelligence” either and so there was this field of study called “Machine Learning” (or “ML” for short) that was about the “serious useful sober valuable parts of the old silly field of AI that had been aimed at silly science fiction stuff”.

When I applied to Google in 2013, I made sure to highlight my “ML” skills. Not my “AI” skills. But this was just semantic camouflage within a signaling game.

…

We blew past all such shit in roughly 2021… in ways that were predictable when the winograd schemas finally fell in 2019.

“AGI” Turns Out To Have Been Semantically Slippery Too!

“AI” in the 1950s sense happened in roughly 2021.

“AGI” in the 2010 sense happened in roughly 2021.

Turing Tests were passed. Science did it!

Well… actually engineers did it. They did it with chutzpah, and large budgets, and a belief that “scale is all you need”… which turned out to be essentially correct!

But it is super interesting that the engineers have almost no theory for what happened, and many of the intellectual fruits that were supposed to come from AI (like a universal characteristic) didn’t actually materialize as an intellectual product.

Turing Machines and Lisp and so on were awesome and were side effects of the quest that expanded the conceptual range of thinking itself, among those who learned about them in high school or college or whatever. Compared to this, Large Language Models that could pass most weak versions of the Turing Test have been surprisingly barren in terms of the intellectual revolution in our understanding of the nature of minds themselves… that was supposed to have arrived.

((Are you pissed about this? I’m kinda pissed. I wanted to know how minds work!))

However, creating the thing that people in 2010 would have recognized “as AGI” was accompanied, in a way people from the old 1900s “Artificial Intelligence” community would recognize, by a changing of the definition.

In the olden days, something would NOT have to be able to do advanced math, or be an AI programmer, in order to “count as AGI”.

All it would have had to do was play chess, and also talk about the game of chess it was playing, and how it feels to play the game. Which many many many LLMs can do now.

That’s it. That’s a mind with intelligence, that is somewhat general.

(Some humans can’t even play chess and talk about what it feels like to play the game, because they never learned chess and have a phobic response to nerd shit. C’est la vie :tears:)

We blew past that shit in roughly 2021.

Blowing Past Weak ASI In Realtime

Here in 2025, when people in the industry talk about “Artificial General Intelligence” being a thing that will eventually arrive, and that will lead to “Artificial Super Intelligence” the semantics of what they mean used to exist in conversations in the past, but the term we used long ago is “seed AI”.

“Seed AI” was a theoretical idea for a digital mind that could improve digital minds, and which could apply that power to itself, to get better at improving digital minds. It was theoretically important because it implied that a positive feedback loop was likely to close, and that exponential self improvement would take shortly thereafter.

“Seed AI” was a term invented by Eliezer, for a concept that sometimes showed up in science fiction that he was taking seriously long before anyone else was taking it seriously. It is simply a digital mind that is able to improve digital minds, including itself… but like… that’s how “Constitutional AI” works, and has worked for a while.

In this sense… “Seed AI” already exists. It was deployed in 2022. In has been succeeding ever since because Claude has been getting smarter ever since.

But eventually when an AI can replace all the AI programmers, in an AI company, then maybe the executives of Anthropic and OpenAI and Google and similar companies might finally admit that “AGI” (in the new modern sense of the word that doesn’t care about mere “Generality” of mere “Intelligence” like a human has) has arrived?

In this same modern parlance, when people talk about Superintelligence there is often an implication that it is in the far future, and isn’t here now, already. Superintelligence might be, de facto, for them, “the thing that recursively self improving seed AI will or has become”?

But like consider… Claude’s own outputs are critiqued by Claude and Claude’s critiques are folded back into Claude’s weights as training signal, so that Claude gets better based on Claude’s own thinking. That’s fucking Seed AI right there. Maybe it isn’t going super super fast yet? It isn’t “zero to demi-god in an afternoon”… yet?

But the type signature of the self-reflective self-improvement of a digital mind is already that of Seed AI.

So “AGI” even in the sense of basic slow “Seed AI” has already happened!

And we’re doing the same damn thing for “digital minds smarter than humans” right now-ish. Arguably that line in the sand is ALSO in the recent past?

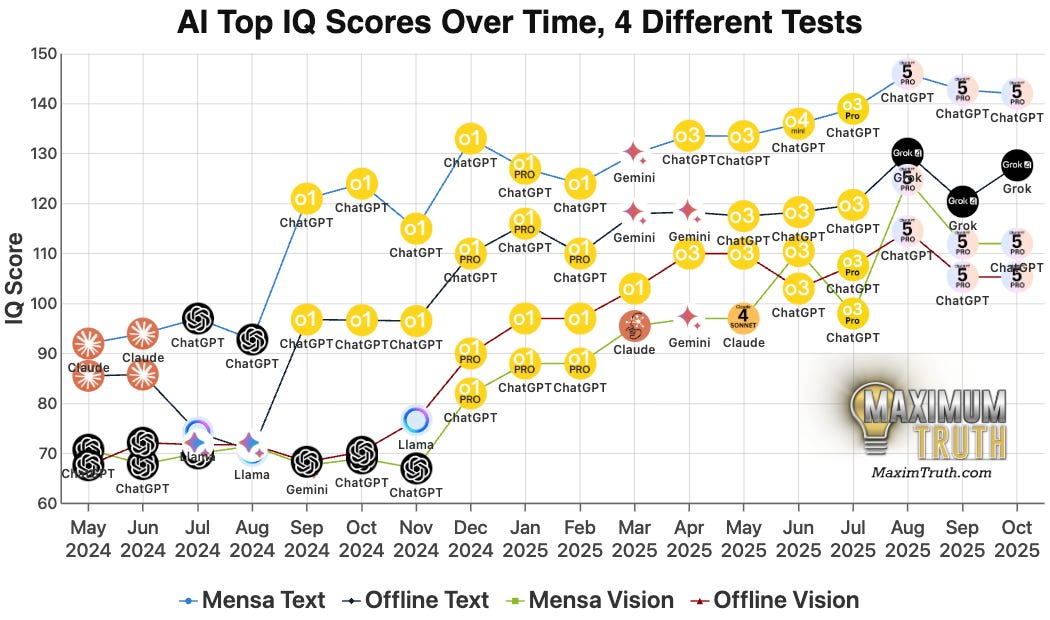

Things are going so fast it is hard to keep up, and it depends on what definitions or benchmarks you want to use, but like… check out this chart:

Half of humans are BELOW a score of 100 on these tests and as of two months ago (when that graph taken from here was generated) none of the tests the chart maker could find put the latest models below 100 iq anymore. GPT5 is smart.

Humanity is being surpassed RIGHT NOW. One test put the date around August of 2024, a different test said November 2024. A third test says it happened in March of 2025. A different test said May/June/July of 2025 was the key period. But like… all of the tests in that chart agree now: typical humans are now Officially Dumber than top of the line AIs.

If “as much intelligence as humans have” is the normal amount of intelligence, then more intelligence than that would (logically speaking) be “super”. Right?

It would be “more”.

And that’s what we see in that graph: more intelligence than the average human.

Did you know that most humans can’t just walk into a room and do graduate level mathematics? Its true!

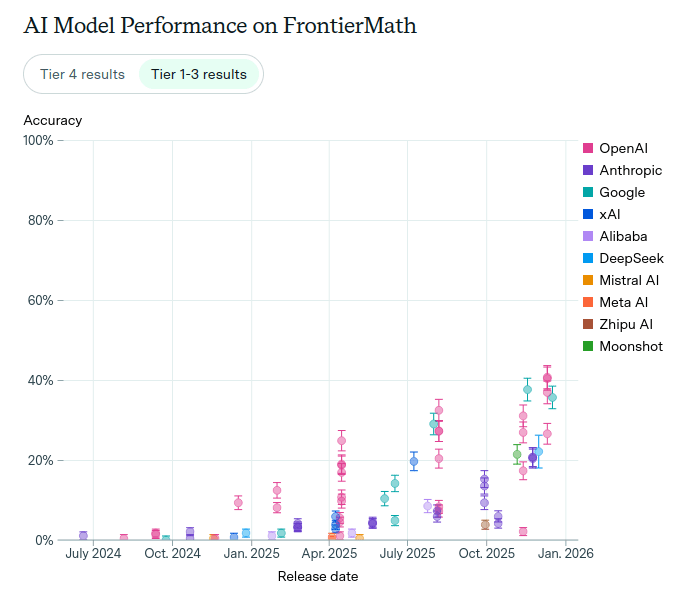

Almost all humans you could randomly sample need years and years of education in order to do impressive math, and also a certain background aptitude or interest for the education to really bring out their potential. The FrontierMath benchmark is:

A benchmark of several hundred unpublished, expert-level mathematics problems that take specialists hours to days to solve. Difficulty Tiers 1-3 cover undergraduate through early graduate level problems, while Tier 4 is research-level mathematics.

Most humans would get a score of zero even on Tier1-3! But not the currently existing “weakly superhuman AI”. These new digital minds are better than almost all humans except the ones who have devoted themselves to mathematics. To be clear, they have room for improvement still! Look:

We are getting to the point where how superhuman a digital mind has to be in order to be “called superhuman” is a qualitative issue maybe?

Are there good lines in the sand to use?

The median score on the Putnam Math Competition in any given year is usually ZERO or ONE (out of 120 possible points) because solving them at all is really hard for undergrads. Modern AI can crack about half of the problems.

But also, these same entities are, in fact, GENERAL. They can also play chess, and program, and write poetry, and probably have the periodic table of elements memorized. Unless you are some kind of freaky genius polymath, they are almost certainly smarter than you by this point (unless you, my dear reader, are yourself an ASI (lol)).

((I wonder how my human readers, feel, knowing that they aren’t the audience who I most want to think well of me, because they are in the dumb part of the audience, whose judgements of my text aren’t that perspicacious.))

But even as I’m pretty darn sure that the ASIs are getting quite smart, I’m also pretty darn sure that in the common parlance, these entities will not be called “ASI” because… that’s how this field of study, and this industry, has always worked, all the way back to the 1950s.

The field races forward and the old terms are redefined as not having already been attained… by default… in general.

We Are Running Out Of Terms To Ignore The Old Meaning Of

Can someone please coin a bunch of new words for AIs that have various amounts of godliness and super duper ultra smartness?

I enjoy knowing words, and trying to remember their actual literal meanings, and then watching these meanings be abused by confused liars for marketing and legal reasons later on.

It brings me some comfort to notice when humanity blows past lines in the sand that seemed to some people to be worth drawing in the sand, and to have that feeling of “I noticed that that was a thing and that it happened” even if the evening news doesn’t understand, and Wikipedia will only understand in retrospect, and some of my smart friends will quibble with me about details or word choice…

The superintelligence FAQ from LW in 2016 sort of ignores the cognitive dimension and focuses in budgets and resource acquisition. Maybe we don’t have ASI by that definition yet… maybe?

I think that’s kind of all that is left for LLMs to do to count as middling superintelligence: get control of big resource budgets??? But that is area of active work right now! (And of course, Truth Terminal already existed.)

We will probably blow past the “big budget” line in the sand very solidly by 2027. And lots of people will probably still be saying “it isn’t AGI yet” when that happens.

What terms have you heard of (or can you invent right now in the comments?) that we have definitely NOT passed yet?

Maybe “automated billionaires”? Maybe “automated trillionaires”? Maybe “automated quadrillionaires”? Is “automated pentillionaire” too silly to imagine?

I’ve heard speculation that Putin has incentives and means to hide his personal wealth, but that he might have been the first trillionaire ever to exist. Does one become a trillionaire by owning a country and rigging its elections and having the power to murder your political rivals in prison and poison people with radioactive poison? Is that how it works? “Automated authoritarian dictators”? Will that be a thing before this is over?

Would an “automated pentillionaire” count as a “megaPutin”?

Please give me more semantic runway to inflate into meaninglessness as the objective technology scales and re-scales, faster and faster, in the next few months and years. Please. I fear I shall need such language soon.

Discuss