Published on January 20, 2026 12:58 AM GMT

In a software-only takeoff, AIs improve AI-related software at an increasing speed, leading to superintelligent AI. The plausibility of this scenario is relevant to questions like:

- How much time do we have between near-human and superintelligent AIs?

- Which actors have influence over AI development?

- How much warning does the public have before superintelligent AIs arrive?

Knowing when and how much I expect to learn about the likelihood of such a takeoff helps me plan for the future, and so is quite important. This post presents possible events that would update me towards a software-only takeoff.

What are returns to software R&D?

The key variable determining wheth…

Published on January 20, 2026 12:58 AM GMT

In a software-only takeoff, AIs improve AI-related software at an increasing speed, leading to superintelligent AI. The plausibility of this scenario is relevant to questions like:

- How much time do we have between near-human and superintelligent AIs?

- Which actors have influence over AI development?

- How much warning does the public have before superintelligent AIs arrive?

Knowing when and how much I expect to learn about the likelihood of such a takeoff helps me plan for the future, and so is quite important. This post presents possible events that would update me towards a software-only takeoff.

What are returns to software R&D?

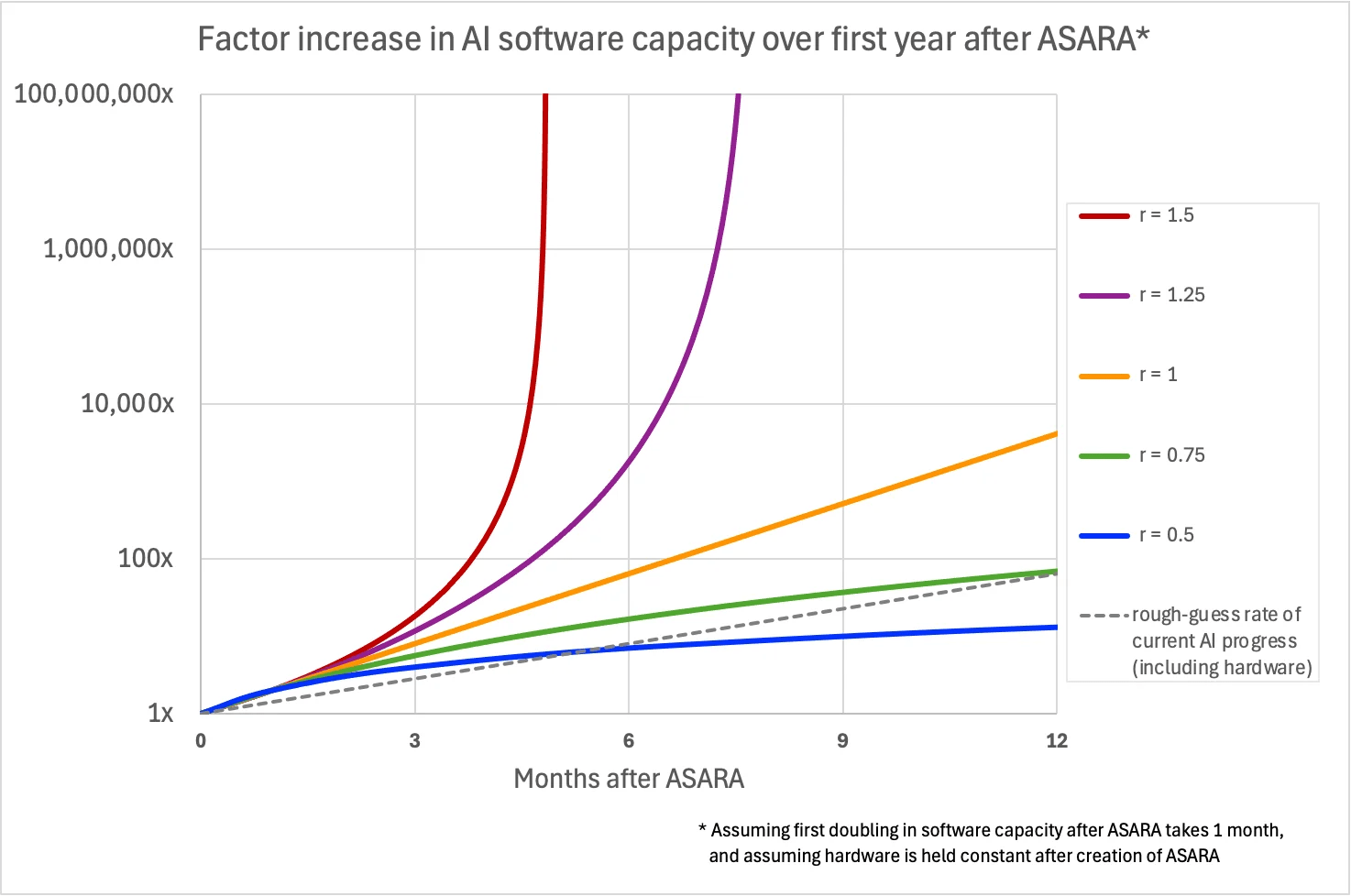

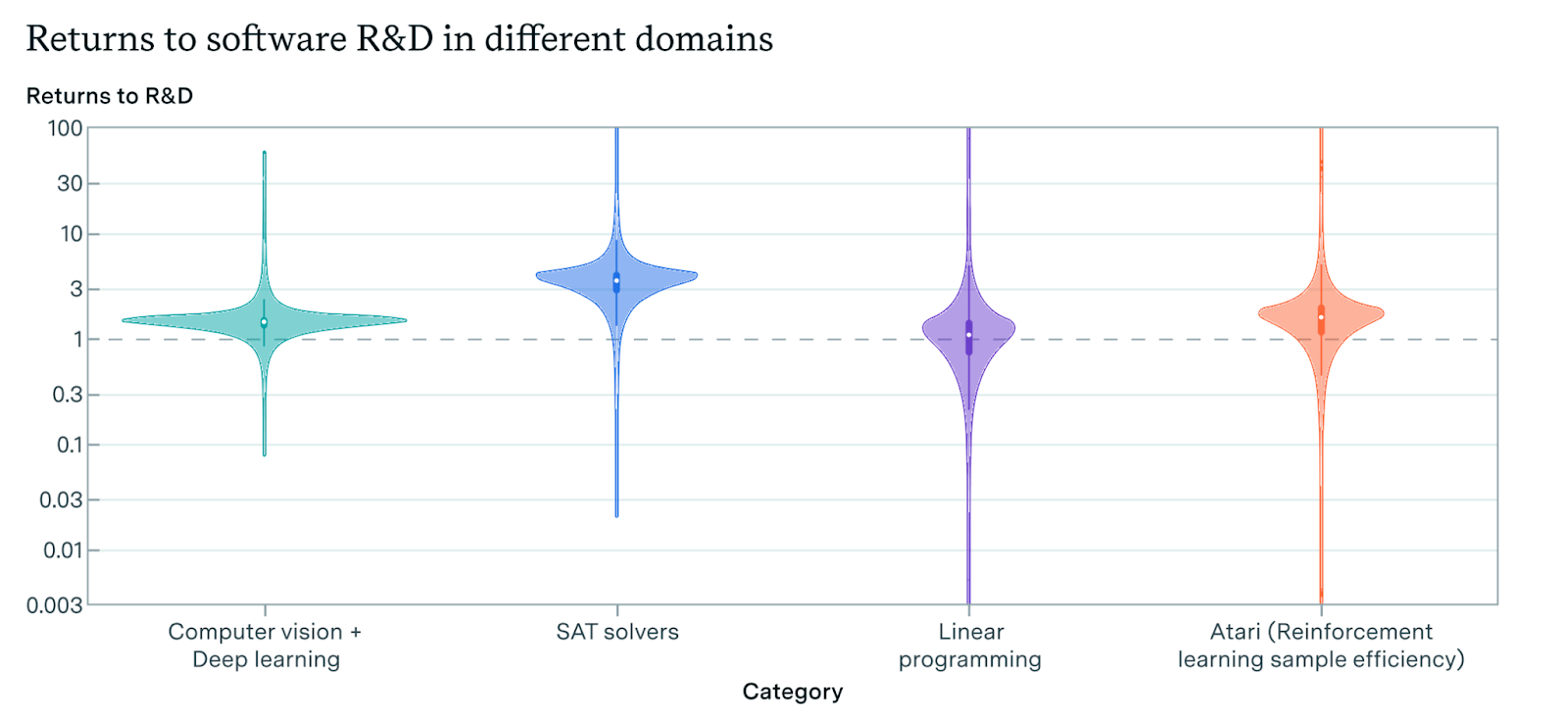

The key variable determining whether software progress alone can produce rapid, self-sustaining acceleration is returns to software R&D (r), which measures how output scales with labor input. Specifically, if we model research output as:

where O is research output (e.g. algorithmic improvements) and I is the effective labor input (AI systems weighted by their capability), then r captures the returns to scale.

If r is greater than 1, doubling the effective labor input of your AI researchers produces sufficient high-quality research to more than double the effective labor of subsequent generations of AIs, and you quickly get a singularity, even without any growth in other inputs. If it’s less than 1, software improvements alone can’t sustain acceleration, so slower feedback loops like hardware or manufacturing improvements become necessary to reach superintelligence, and takeoff is likely to be slower.

A software-only singularity could be avoided if r is not initially above 1, or if r decreases over time, for example, because research becomes bottlenecked by compute, or because algorithmic improvements become harder to find as low-hanging fruit is exhausted.

Initial returns to software R&D

The most immediate way to determine if returns to software R&D are greater than 1 would be observing shortening doubling times in AI R&D at major labs (i.e. accelerating algorithmic progress), but it would not be clear how much of this is because of increases in labor rather than (possibly accelerating) increases in experimental compute. This has stymied previous estimates of returns.

Evidence that returns to labor in AI R&D are greater than 1:

- Progress continues to accelerate after chip supplies near capacity constraints. This would convince me that a significant portion of continued progress is a result of labor rather than compute and would constitute strong evidence.

- Other studies show that labor inputs result in compounding gains. This would constitute strong evidence.

- Any high-quality randomized or pseudorandom trial on this subject.

- Work that effectively separates increased compute from increased labor input [1].

- Labs continue to be able to make up for less compute than competitors with talent (like Anthropic in recent years). This would be medium-strength evidence.

- A weaker signal would be evidence of large uplifts from automated coders. Pure coding ability is not very indicative of future returns, however, because AIs’ research taste is likely to be the primary constraint after full automation.

- Internal evaluations at AI companies like Anthropic show exponentially increasing productivity.

- Y Combinator startups grow much faster than previously (and increasingly fast over time). This is likely to be confounded by other factors like overall economic growth.

Compute bottlenecks

The likelihood of a software-only takeoff depends heavily on how compute-intensive ML research is. If progress requires running expensive experiments, millions of automated researchers could still be bottlenecked. If not, they could advance very rapidly.

Here are some things that would update me towards thinking little compute is required for experiments:

- Individual compute-constrained actors continue to make large contributions to algorithmic progress[2]. This would constitute strong evidence. Examples include:

- Academic institutions which can only use a few GPUs.

- Chinese labs that are constrained by export restrictions (if export restrictions are reimposed and effective).

- Algorithmic insights can be cross-applied from smaller-scale experimentation. This would constitute strong evidence. For example:

- Optimizers developed on small-scale projects generalize well to large-scale projects[3].

- RL environments can be iterated with very little compute.

- Conceptual/mathematical work proves particularly useful for ML progress. This is weak evidence, as it would enable non-compute-intensive progress only if such work does not require large amounts of inference-time compute.

Diminishing returns to software R&D

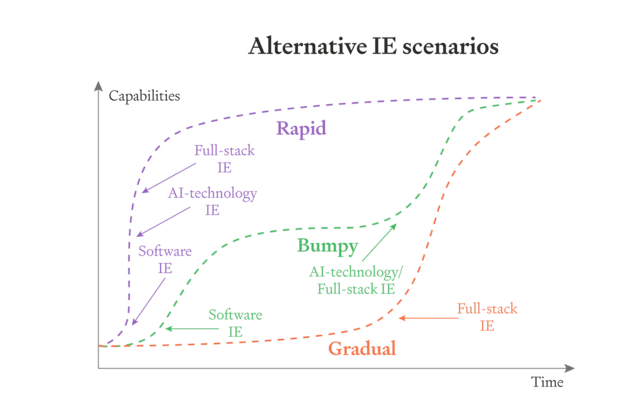

Even if returns on labor investment are compounding at the beginning of takeoff, research may run into diminishing returns before superintelligence is produced. This would result in the bumpy takeoff below.

The evidence I expect to collect before takeoff is relatively weak, because current progress rates don’t tell us much about the difficulty of discovering more advanced ideas we haven’t yet tried to find. That said, some evidence might be:

- Little slowdown in algorithmic progress in the next few years. Evidence would include:

- Evidence of constant speed of new ideas, controlling for labor. Results from this type of analysis that don’t indicate quickly diminishing returns would be one example.

- Constant time between major architectural innovations (e.g. a breakthrough in 2027 of similar size to AlexNet, transformers, and GPT-3)[4].

- New things to optimize (like an additional component to training, e.g. RLVR).

- Advances in other fields like statistics, neuroscience, and math that can be transferred with some effort. For example:

- Causal discovery algorithms that let models infer causal structure from observational data.

- We have evidence that much better algorithms exist and could be implemented in AIs. For example:

- Neuroscientific evidence of the existence of much more efficient learning algorithms (which would require additional labor to identify).

- Better understanding of how the brain assigns credit across long time horizons.

Conclusion

I expect to get some evidence of the likelihood of a software-only takeoff in the next year, and reasonably decisive evidence by 2030. Overall I think evidence of positive feedback in labor inputs to software R&D would move me the most, with evidence that compute is not a bottleneck being a near second.

Publicly available evidence that would update us towards a software-only singularity might be particularly important because racing companies may not disclose progress. This evidence is largely not required by existing transparency laws, and so should be a subject of future legislation. Evidence of takeoff speeds would also be helpful for AI companies to internally predict takeoff scenarios.

Thanks for feedback from other participants in the Redwood futurism writing program. All errors are my own.

This paper makes substantial progress but does not fully correct for endogeneity, and its 90% confidence intervals straddle an r of 1, the threshold for compounding, in all domains except SAT solvers.

It may be hard to know if labs have already made the same discoveries.

See this post and comments for arguments about the plausibility of finding scalable innovations using small amounts of compute.

This may only be clear in retrospect, since breakthroughs like transformers weren’t immediately recognized as major.

Discuss