- 25 Dec, 2025 *

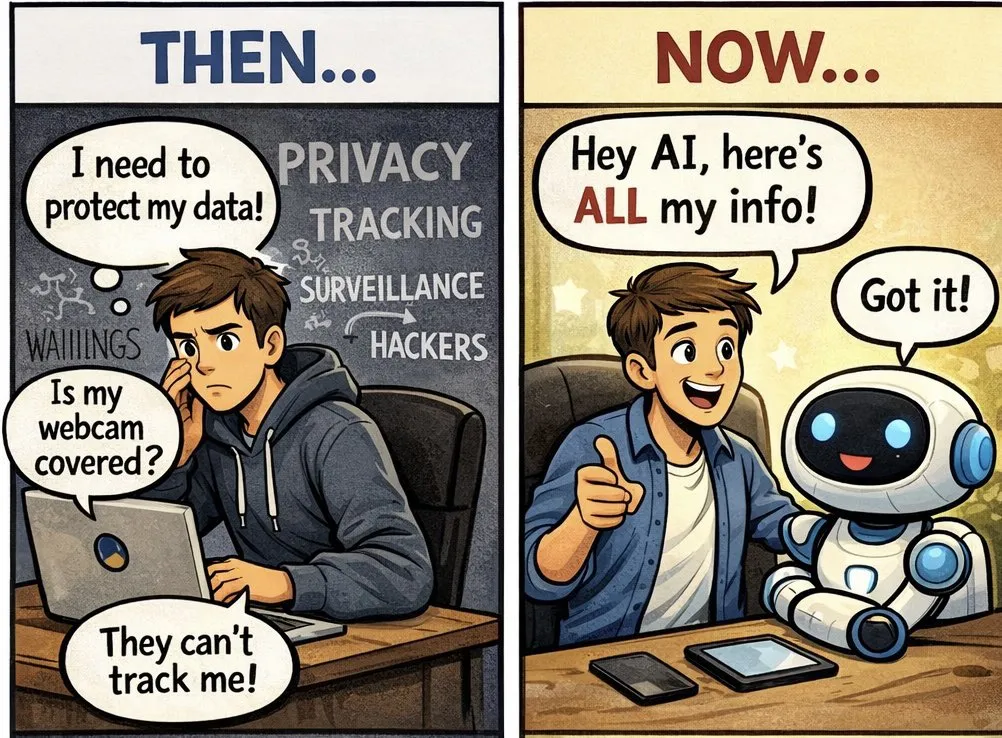

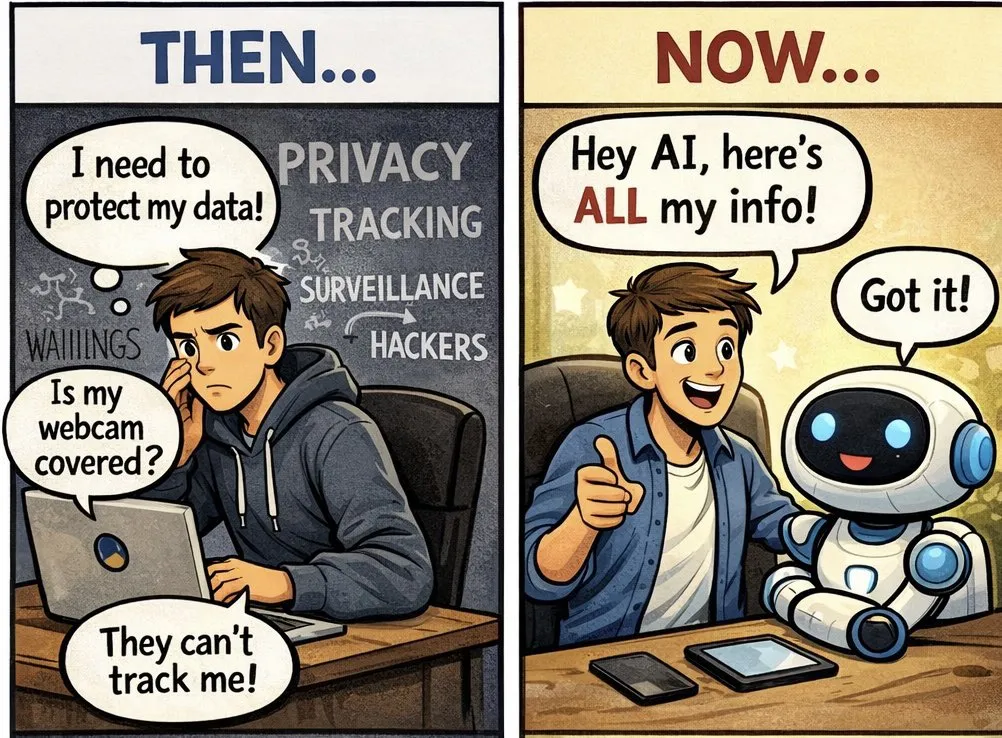

Hey there!! Let’s chat about something that’s been on my mind a lot lately. Remember the days when we were all up in arms about how much the internet and big tech companies knew about us?

Our entire lives seemed to be online, from search histories, to locations, to all our shopping habits. That was the time we couldn’t stop talking about all the scary data breaches, the creepy targeted ads, and the surveillance fears.

It felt invasive, right? But that was the past, with the ease of AI stepping in we’ve handed over not just our actions, but our thoughts, our predictions, even glimpses of our future decisions.

Unfortunatel…

- 25 Dec, 2025 *

Hey there!! Let’s chat about something that’s been on my mind a lot lately. Remember the days when we were all up in arms about how much the internet and big tech companies knew about us?

Our entire lives seemed to be online, from search histories, to locations, to all our shopping habits. That was the time we couldn’t stop talking about all the scary data breaches, the creepy targeted ads, and the surveillance fears.

It felt invasive, right? But that was the past, with the ease of AI stepping in we’ve handed over not just our actions, but our thoughts, our predictions, even glimpses of our future decisions.

Unfortunately, hardly anyone seems fazed. What has happened to us?

The Old Worries and the New Reality

In those days, we were all worried that our digital footprints were being sold off wihtout a second thought. The social media tracking and the cookies following us everywhere were the talk of the town. We demanded better privacy controls, deleted accounts in protest, and cheered when scandals brought regulations.

Fast forward to now when the AI is taking it even deeper, we have grown complacent. These models don’t just log what we do. They infer sensitive details from casual conversations with alamring accuracy. Think things like our income levels, our physical and mental health issues, even our personal identifiers, Studies show that nearly one in ten business prompts to generative AI tools inadvertently expose sensitive data, from customer billing info to employee payroll details.1 2

Cloud-based systems often retain inputs indefinitely especially when we allow the models to retain memory. All of this creates a risk of leaks, misuse, or even training on your private chats without explicit consent.3 4

Yet, where is the outrage? Maybe it’s just the convenience that AI provides us, or that it feels like a helpful friend instead of a spy, or perhaps we’re all just exhausted from years of fighting the same battles.

Whatever the reason, we’ve grown complacent, even as the stakes feel higher.5 6

Why the Silence Feels So Strange

Honestly, it’s baffling to me. AI amplifies everything we have feared before, from vast data processing to "understand" us on a profound level, the potential for 24/7 monitoring through devices.

Privacy policies from major tech giants often lack transparency on retention or training uses. The long term storage of our data raise red flags.7

We do benefit from the convenience for sure, but at what cost? We’re essentially journaling our innermost thoughts, health worries, financial plans, and personal dilemmas and hading it all over to large tech giants via AI, in hopes it will all remain safe and will never be used against us.

But what if a breach happens or data gets repurposed, it’s like exposing a diary no one asked to read.

Turning to Local Solutions for Real Privacy

You must be wondering it is all understandable, but can we really do about it?

You can definitely take back control by running AI locally on your own hardware. Local large language models (LLMs) process everything offline and no data is sent to the cloud, no risk of it feeding into someone else’s training set.8 9

Tools like Ollama make it easier to set up these open-source models locally from places like Hugging Face. What you get is the full ownership of your prompts and data, zero leaks, lower latency, and peace of mind, especially for sensitive topics.10

But, if it is this easy, why isn’t it common you ask? Well there is always a downside, isn’t it? The downside here is, it’s not always cheap to run LLMs locally. You’ll need hardware with a decent GPU and it can cost a fair bit. Plus there is the concer of ongoing power usage.

For privacy enthusiasts handling confidential info, it might be worth the investment.11 12

Can SLMs (Small Language Models) help?

Here’s where it gets exciting for those of us without unlimited budgets, Enter small language models (SLMs). These are the leaner versions with millions or billions of parameters instead of trillions. They are built to run efficiently on everyday devices like laptops or even phones.13 14

These specialized models are faster and consume far less resources. All while delivering strong performance on common tasks.

These models are easy to deplpy locally and help keep data locked down. All this while approximating 85-90% of cloud quality for the daily use prompts.15 16

They are definitley not as powerful for the most intricate reasoning, but for most of what we need? They’re surprisingly capable and way more accessible.

The Rise of Open-Source Local Agents

The best part is, we don’t have to rely on big companies anymore. Open-source communities are working on building incredible privacy-focused tools.

LocalAI as an OpenAI alternative.17

AnythingLLM for chatting with docs offline.18

Frameworks for autonomous agents that run entirely on your machine.19 20

You can fine-tune models, chain agents, and integrate them with your OS, all without a byte leaving your setup.

It’s empowering, really. These opensource ecosystems let us create custom, secure AI that truly serves us and not some distant corporation.

Summarizing My Thoughts

From freaking out over online tracking to casually feeding our deepest thoughts to AI, we have come a long way in complacency. There are options like local LLMs, SLMs, and open source agents that can bring back the privacy we all crave while keeping on top of the AI game.

These options are not perfect as there are hardware hurdles and occasional trade-offs in power, but for anyone valuing privacy, this system can be a breath of fresh air.

So, how about you? Have you tried going local yet or is the ease of cloud AI still too tempting? I’d love to hear your take on it. Let’s keep this conversation going.

Harmonic Security Study (2025): https://siliconangle.com/2025/01/16/study-finds-nearly-one-ten-generative-ai-prompts-business-disclose-potentially-sensitive-data/↩ 1.

Cybernews report on sensitive data in AI prompts: https://cybernews.com/security/ai-prompts-risk-sensitive-data/↩ 1.

Stanford study on AI chatbot privacy risks and data retention: https://news.stanford.edu/stories/2025/10/ai-chatbot-privacy-concerns-risks-research↩ 1.

Iron Mountain on retention and privacy in AI: https://resources.ironmountain.com/whitepapers/r/retention-privacy-and-security-keys-to-ai-success↩ 1.

IAPP Privacy and Consumer Trust Report on AI threats to privacy: https://iapp.org/resources/article/privacy-and-consumer-trust-summary↩ 1.

AI data privacy statistics (various sources, including Stanford AI Index 2025): https://www.kiteworks.com/cybersecurity-risk-management/ai-data-privacy-risks-stanford-index-report-2025/↩ 1.

Stanford Report on chatbot privacy policies: https://hai.stanford.edu/news/be-careful-what-you-tell-your-ai-chatbot↩ 1.

DataNorth on local LLMs for privacy: https://datanorth.ai/blog/local-llms-privacy-security-and-control↩ 1.

GodofPrompt on local LLM setups for businesses: https://medium.com/@lawrenceteixeira/revolutionizing-corporate-ai-with-ollama-how-local-llms-boost-privacy-efficiency-and-cost-52757390bf26↩ 1.

AI-for-Devs on switching to local LLMs (Ollama/HF): https://huggingface.co/blog/lynn-mikami/how-to-use-ollama↩ 1.

GovTech on local LLMs in education (privacy benefits): https://www.govtech.com/education/k-12/cite25-local-llms-confer-advantages-in-data-security-support↩ 1.

Skyflow on private LLMs limitations: https://www.skyflow.com/post/private-llms-data-protection-potential-and-limitations↩ 1.

DataCamp guide to SLMs vs LLMs: https://www.datacamp.com/blog/slms-vs-llms↩ 1.

Sendbird on small language models: https://sendbird.com/blog/small-language-models↩ 1.

Medium article on SLMs surpassing LLMs in scenarios: https://medium.com/@armankamran/slms-small-language-models-and-the-7-key-scenarios-where-they-surpass-llms-b548d73de85e↩ 1.

Microsoft Azure on what are small language models: https://azure.microsoft.com/en-us/resources/cloud-computing-dictionary/what-are-small-language-models↩ 1.

LocalAI official site: https://localai.io/↩ 1.

AnythingLLM features: https://anythingllm.com/↩ 1.

freeCodeCamp tutorial on local AI agents: https://www.freecodecamp.org/news/build-a-local-ai/↩ 1.

Medium guide to building local AI agents: https://medium.com/beyond-the-buzz-highlighting-the-impact-of-ai-in/the-lazy-devs-guide-to-building-a-local-ai-agent-that-actually-works-30e7f3aee140↩