Conference on Robot Learning 2022

Our approach, Semantic Abstraction, unlocks 2D VLM’s capabilities to 3D scene understanding. Trained with a limited synthetic dataset, our model generalizes to unseen classes in a novel domain (i.e., real world), even for small objects like “rubiks cube”, long-tail concepts like “harry potter”, and hidden objects like the “used N95s in the garbage bin”. Unseen classes are bolded.

Paper & Code

Latest Paper Version: ArXiv, Github

Abstraction via Relevancy

Our key observation is that 2D VLMs’ relevancy activations roughly corresponds to their confidence of whether and where an object is in the sce…

Conference on Robot Learning 2022

Our approach, Semantic Abstraction, unlocks 2D VLM’s capabilities to 3D scene understanding. Trained with a limited synthetic dataset, our model generalizes to unseen classes in a novel domain (i.e., real world), even for small objects like “rubiks cube”, long-tail concepts like “harry potter”, and hidden objects like the “used N95s in the garbage bin”. Unseen classes are bolded.

Paper & Code

Latest Paper Version: ArXiv, Github

Abstraction via Relevancy

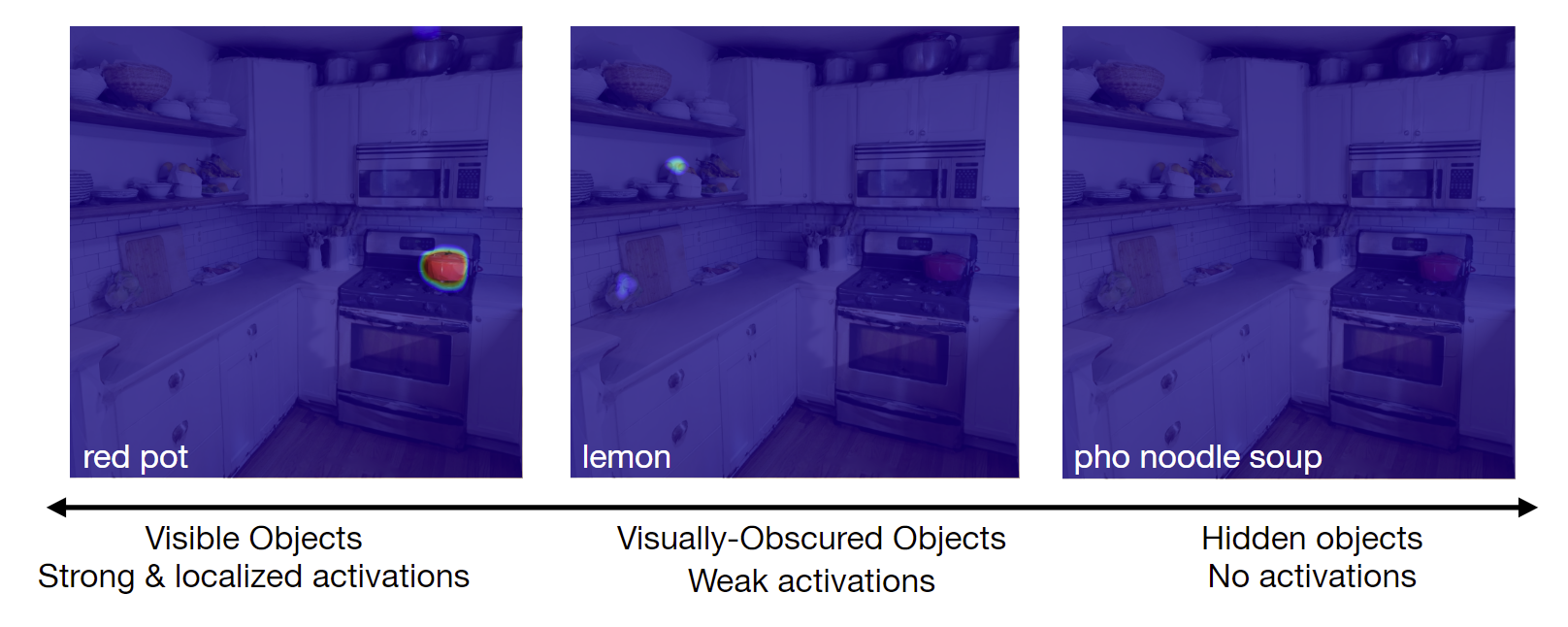

Our key observation is that 2D VLMs’ relevancy activations roughly corresponds to their confidence of whether and where an object is in the scene. Based on this observation, we treat relevancy maps as "abstract object" representations, and propose a framework for learning 3D localization and completion on top of them.

By learning 3D spatial and geometric reasoning skills in a semantic-agnostic manner, our approach generalizes to any novel semantic labels, vocabulary, visual properties, and domains that the 2D VLM can generalize to.

We apply our approach to two open-world 3D scene understanding tasks: 1) completing partially observed objects and 2) localizing hidden objects from language descriptions localizing .

Open Vocabulary Semantic Scene Completion

The goal of this task is to complete the 3D geometry of all partially observed objects, where object labels can change from scene to scene and from training to testing. The key challenge of this task is in dealing with the open-vocabulary of object labels.

Here, we show how our approach flexibly completes the scene with semantically similar labels, regardless of whether it has seen them in training. Novel labels (unseen during training) are shown in bold.

Visually Obscured Object Localization

Even after 3D completion of partially visible objects, there may be visually-obscured or hidden objects which aren’t in the completion. In such cases, the human user can provide additional hints using natural language to help localize the object.

Matterport Qualitative Results

Despite being trained on only a limited synthetic dataset, our approach generalizes to real world scans from HM3D. Novel labels (unseen during training) are shown in bold.

ARKitScenes Qualitative Results

We also show qualitative results for ARKitScenes, which has imperfect depths. Novel labels (unseen during training) are shown in bold.

Team

Columbia University in the City of New York

Citation

To cite this work, please use the following BibTex entry,

@inproceedings{ha2022semabs,

title={Semantic Abstraction: Open-World 3{D} Scene Understanding from 2{D} Vision-Language Models},

author = {Ha, Huy and Song, Shuran},

booktitle={Proceedings of the 2022 Conference on Robot Learning},

year={2022}

}

If you have any questions, please feel free to contact Huy Ha.