For researchers, analysts, and security professionals alike, the ability to quickly and accurately retrieve relevant information is critical. Yet, as our information landscape grows, so do the challenges of traditional search methods.

The Cisco Foundation AI team introduces a novel approach to information retrieval designed to tackle the shortcomings of current search.

The Challenge with Current Search

Often, when we search for information, especially for complex topics, our initial queries might not hit the mark. Traditional search engines, while powerful, typically operate on a “one-shot” principle: you ask a question, and it gives you results. If those results aren’t quite right, it’s up to you to reformulate your query and try again. This process can be inefficient and frus…

For researchers, analysts, and security professionals alike, the ability to quickly and accurately retrieve relevant information is critical. Yet, as our information landscape grows, so do the challenges of traditional search methods.

The Cisco Foundation AI team introduces a novel approach to information retrieval designed to tackle the shortcomings of current search.

The Challenge with Current Search

Often, when we search for information, especially for complex topics, our initial queries might not hit the mark. Traditional search engines, while powerful, typically operate on a “one-shot” principle: you ask a question, and it gives you results. If those results aren’t quite right, it’s up to you to reformulate your query and try again. This process can be inefficient and frustrating, particularly when dealing with nuanced or multi-faceted information needs.

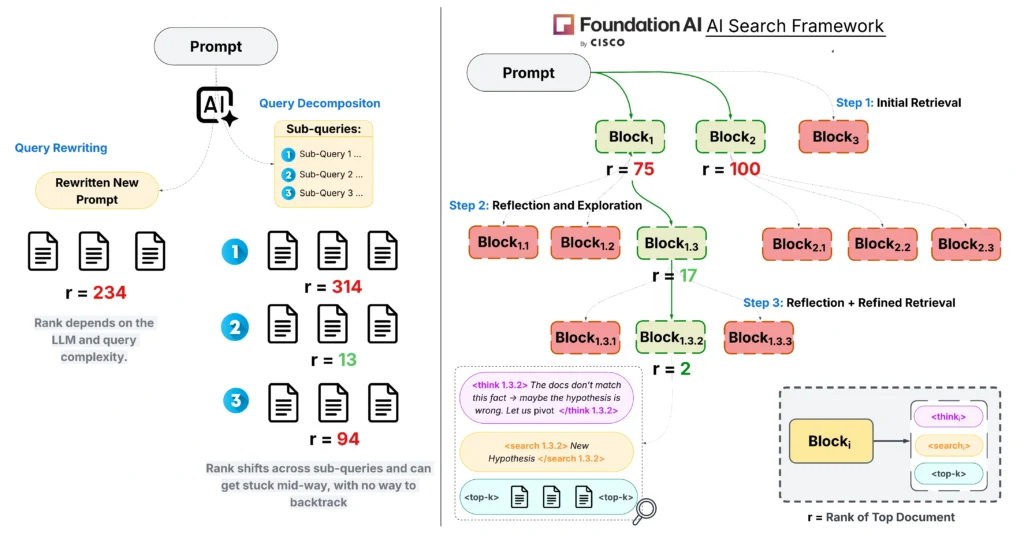

LLMs offer semantic understanding, but they can be computationally expensive and not always ideal for the iterative, exploratory nature of complex searches. Existing methods for query rewriting or decomposition often commit to a search plan too early, causing the retrieval process to become trapped in an incorrect search space and miss relevant information.

Foundation AI’s Adaptive Approach

The Foundation AI approach to search addresses these limitations by making the retrieval process itself adaptive and intelligent. Instead of a static, one-and-done query, the framework enables models to learn how to search iteratively, much like a human investigator would. This is done using a series of techniques: synthetic trajectory generation to create diverse search behaviors, supervised fine-tuning to establish the scaffolding for multi-turn search, reinforcement learning (GRPO) to refine search behavior, and finally inference time beam search to exploit the learned self-reflection capabilities.

At its core, our framework empowers compact models (from 350M – 1.2B parameters) to:

- Learn diverse search strategies: Through a process of observing and learning from various search behaviors, the framework models understand how to approach different types of queries.

- Refine queries based on feedback: The system learns to adjust its search queries dynamically, incorporating insights from previously retrieved documents.

- Strategically backtrack: A critical capability is knowing when to abandon an unfruitful path and explore alternative search directions, preventing the “revolving loops” seen in less adaptive systems.

Together, these abilities allow our search framework to conduct a multi-turn “conversation” with the information it retrieves, reflect on intermediate results, and adapt its strategy to zero in on the most relevant evidence. The figure below compares some of the existing approaches discussed with that of the Foundation AI team’s approaches.

Figure 1: Overview of framework

Figure 1: Overview of framework

We illustrate two established query reformulation baselines alongside our proposed framework on an example from the FEVER dataset. While query decomposition fails without corpus feedback and query rewriting yields static reformulations that ignore retrieval results, the Foundation AI framework performs tree-based exploration with structured reasoning spans, revising its strategy as it incorporates contradictory evidence and shifts from valley- to mountain-focused queries-effectively backtracking, refining, and exploring to recover relevant evidence.

Results

We evaluated our approach across two challenging benchmark suites that test both retrieval precision and reasoning depth: the BEIR benchmark for classic and multi-hop information retrieval, and the BRIGHT benchmark for reasoning-intensive search spanning scientific, technical, and analytical domains.

Despite being up to 400× smaller than the large language models it was compared against, our smaller custom models used in the tests consistently performed at or above par:

- On BEIR datasets such as SciFact, FEVER, HotpotQA, and NFCorpus, the Foundation AI large (1.2B) model achieved 77.6% nDCG@10 on SciFact and 63.2% nDCG@10 on NFCorpus, surpassing prior retrievers and approaching GPT-4-class performance, while maintaining strong scores on FEVER (65.3%) and HotpotQA (71.6%).

- On BRIGHT, we achieved a macro-average nDCG@10 of 25.2%, outperforming large proprietary models like GPT-4.1 (22.1%) across 12 diverse domains, from economics and psychology to robotics and mathematics.

These results demonstrate that learned adaptive search strategies, not just model scale, drive retrieval performance.

Real-world Application: Security Search

The implications of such an adaptive retrieval system reach across domains, especially in security:

- Enhanced Threat Intelligence Analysis: Security analysts are constantly sifting through massive volumes of threat reports, vulnerability databases, and incident data. The framework’s ability to handle complex, evolving queries and backtrack from dead ends means it can more effectively uncover subtle connections between disparate pieces of intelligence, identifying emerging threats or attack patterns that a static search might miss.

- Faster Incident Response: When a security incident takes place, responders need to quickly locate relevant logs, network traffic data, and security policies. Accelerate this by adaptively searching through diverse data sources, refining queries as new evidence emerges from the incident, and helping to pinpoint the root cause or affected systems faster.

- Proactive Vulnerability Research: Security researchers can use the framework to explore code repositories, technical forums, and security advisories to identify potential vulnerabilities in systems. Its adaptive nature allows it to follow complex chains of dependencies or exploit techniques, leading to more comprehensive vulnerability discovery.

The Future of Search is Adaptive

Our research shows that retrieval intelligence is not a function of scale but of strategy. By combining synthetic data, reinforcement learning, and intelligent search algorithms, compact models can achieve powerful adaptive capabilities. This means more efficient, cost-effective, and robust information retrieval systems that can truly understand and adapt to the complexities of human information needs.

If you’re interested in learning more, you can read the full research paper here on arXiv.

Learn more about the research we do and sign up for updates at the Cisco Foundation AI team website.

We’d love to hear what you think! Ask a question and stay connected with Cisco Security on social media.

Cisco Security Social Media