October 6, 2025

Like everyone else who knows what a “row group” is, I spent some of last week looking into F3, the new columnar data format out of CMU.

To take a step back, there’s been a lot of buzz over the last several years of making databases out of composable parts, and debating the construction of those composable parts. Chris Riccomini talks about this in Databases are Falling Apart. The traditional rule of thumb has been that it…

October 6, 2025

Like everyone else who knows what a “row group” is, I spent some of last week looking into F3, the new columnar data format out of CMU.

To take a step back, there’s been a lot of buzz over the last several years of making databases out of composable parts, and debating the construction of those composable parts. Chris Riccomini talks about this in Databases are Falling Apart. The traditional rule of thumb has been that it takes about 10x the work to make a reusable component as it does to make something bespoke for your use case. This has, up until the last couple years, meant that the right solution was generally to just build something bespoke for your use case.

This changed in the last couple years, where we’ve seen an increase in the number of modular database components, maybe because people realized that it was actually worth spending 10x the time in a lot of cases.

So far, the winner in the component of “disk-based, columnar storage format” has been Parquet. Non-obviously, this particular component has some different concerns than other “database-component-as-a-library” tools. While if a database embeds a query execution engine as a library, that can more-or-less just live encapsulated in that database, a database using a common format for its on-disk representation, particular in an analytics world, is a little different because it opens up the possibility to share data files between different pieces of software.

This has been one of the reasons Parquet has been adopted so aggressively: there’s a lot of benefits if all of your services speak the same disk format. This means not only can you say, more easily share your data with your data science team more easily, but you can also use off-the-shelf tools for any new kind of use case you have. You don’t have to build a bunch of bespoke command line tools for analyzing your data.

The power of interoperability comes with a cost, though: if it’s expected that every other service at your organization will be able to understand the files you produce, you’re bound by the Robustness principle to be as conservative as possible in terms of how sophisticated the files you produce are. If even one of your potential consumers is on version 31, it would be irresponsible of you to produce files that require version 32, even if there would be some valuable benefits for the majority of consumers.

This is the problem that the most interesting feature of F3 sets out to solve:

In this paper, we present the Future-proof File Format (F3) project. It is a next-generation open-source file format with interoperability, extensibility, and efficiency as its core design principles. F3 obviates the need to create a new format every time a shift occurs in data processing and computing by providing a data organization structure and a general-purpose API to allow developers to add new encoding schemes easily. Each self-describing F3 file includes both the data and meta-data, as well as WebAssembly (Wasm) binaries to decode the data. Embedding the decoders in each file requires minimal storage (kilobytes) and ensures compatibility on any platform in case native decoders are unavailable. To evaluate F3, we compared it against legacy and state-of-the-art open-source file formats. Our evaluations demonstrate the efficacy of F3’s storage layout and the benefits of Wasm-driven decoding.

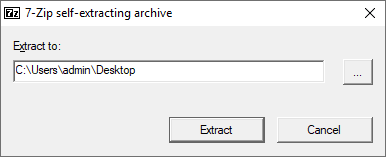

That’s right baby—we’re bringing back 7zip:

I’m sure the authors of this paper would say that there are many additional benefits their design brings over Parquet, many extra ideas that make this a better choice for modern data systems, but everyone I’ve talked to has gotten hung up on the “we embed a Wasm decoder in the data files themselves” thing so I think it’s fair to say that’s the part that has captured the most imaginations.

Now, one thing that should be made clear is that the data is also decodable without even looking at the Wasm. The Wasm is only there to solve the problem of “someone is trying to consume your file but doesn’t have the correct native decoder.” I originally thought, upon hearing the idea, that it was about specializing encodings to the particular data file, or something like that, but that’s not the case: it’s about forwards compatibility (as the name of the format would suggest).

There’s a couple obvious objections to this idea that I think are worth going into a bit more, but if you’ve worked with Parquet in a large organization then I’d be interested to hear your thoughts on the whole deal.

Embedded decoders turn an error into slowness

F3 claims:

The decoding performance slowdown of Wasm is minimal (10–30%) compared to a native implementation.

I think there are two major schools of thought on this kind of handling of problems in computer systems and I myself have bounced between them at times. One school says “I want to be alerted as soon as something breaks” and the other school says “systems should degrade gracefully,” where “gracefully” could mean basically whatever you want it to. In this case, it means things getting slower as one of your upstream producers of data adds a new feature that you don’t support, and you start falling back to the Wasm decoder to decode it.

Again, I waffled a bit on this one, but I think it’s basically fine. If, indeed, this slowdown is a problem for the consumer of the data, there’s an easy solution: they just install the correct native decoder, and everything gets better. Organizationally it can be a way to put pressure on a downstream system without actually breaking your contract to them. I’m reminded of the anecdote about how a team at Google wanted to deprecate a method and added a sleep call that they doubled every week until all the consumers of the library had removed it. It’s safer than just breaking things (but, you know, not as safe as never changing anything, but this is a tradeoff).

I think in practice most teams using a system like this would have some kind of alert fire when they fall back to Wasm decoding and make it some kind of priority to resolve such situations.

Is that you’re decoding into future-proof as well?

F3 decodes into Arrow. This is fine. There’s far less variation in encoding strategies for in-memory data than there are for on-disk data. While there are some, for the most part, a lot of data is just contiguous arrays. I admittedly don’t know a whole lot about this area, though. One main case I can think of is that it’s a somewhat common tool to compress in-memory data in order to turn a memory-bound problem into a CPU-bound one, and I don’t know how Arrow enables this (or not).

There is actually a lot more interesting material in the F3 paper than just this, and maybe we will talk about that going forward, but I think it’s an interesting idea! On one hand, it’s kind of heavyweight to need an entire Wasm runtime on-hand to be able to decode your data, but on the other hand, maybe having a whole Parquet decoder is also heavyweight. I’m not sure!