tete_escape/Shutterstock.com

Summary: Most L&D teams stop at training evaluation at Level 1, the “happy sheet.” But AI is changing that. Discover how AI helps measure behavior, business impact, ROI, turning training data into proof of real organizational value.

Stop Chasing Smile Sheets, Start Proving ROI With AI

For decades, the “happy sheet” has been the comfortable, familiar couch of the Learning and Development (L&D) world. It’s easy to sink into, requir…

tete_escape/Shutterstock.com

Summary: Most L&D teams stop at training evaluation at Level 1, the “happy sheet.” But AI is changing that. Discover how AI helps measure behavior, business impact, ROI, turning training data into proof of real organizational value.

Stop Chasing Smile Sheets, Start Proving ROI With AI

For decades, the “happy sheet” has been the comfortable, familiar couch of the Learning and Development (L&D) world. It’s easy to sink into, requires little effort, and gives us a warm, fuzzy feeling. We send out our post-training surveys, breathe a sigh of relief at the 4.5/5 average satisfaction score, and file the report away as evidence of a job well done.

But in the quiet moments, a nagging question persists for every L&D professional: Did any of it actually matter?

Did those five-star reviews translate into improved performance on the sales field? Did that meticulously crafted compliance course genuinely reduce risk incidents?

We comfort ourselves with completion rates and smiley faces, all while knowing that the real conversation in the boardroom is about revenue, market share, operational efficiency, and risk mitigation; conversations we often struggle to join with concrete data.

Moving Toward Implementation

The barrier has never been a lack of will or understanding. Seasoned L&D leaders know the Kirkpatrick and Phillips models intimately. The barrier has been implementation.

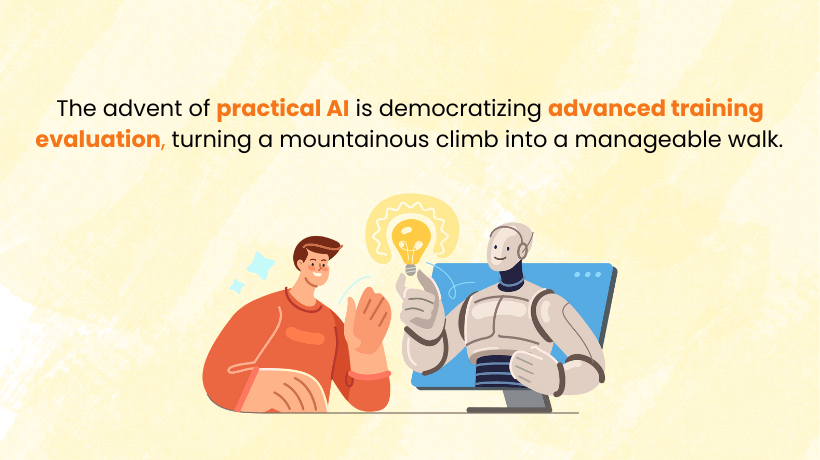

Moving beyond Level 1 (Reaction) and Level 2 (Learning) to assess Level 3 (Behavior), Level 4 (Results), and Level 5 (ROI) has historically been painted as a complex, time-consuming, and expensive endeavour—a luxury reserved for well-funded, enterprise-wide initiatives.

That barrier no longer exists. The advent of practical Artificial Intelligence (AI) is democratizing advanced training evaluation, turning what was once a mountainous climb into a manageable walk. This article dismantles the myths of difficulty and provides a practical roadmap for using AI to finally prove the undeniable value of your L&D programs.

Image by CommLab India

The High Cost Of Staying In Your Comfort Zone

Continuing to rely primarily on happy sheets isn’t just a methodological choice; it’s a strategic risk for the L&D function.

- **The credibility gap: **When we report on satisfaction alone, we inadvertently position L&D as a cost centre, a provider of feel-good activities, rather than a strategic partner driving business outcomes. This makes our budgets vulnerable during economic downturns.

- The misalignment trap: Without measuring on-the-job application and business impact, we cannot truly know if our training is solving the business problems it was designed to solve. We might be efficiently delivering brilliant training on the wrong things.

- The continuous improvement blind spot: Happy sheets tell us that learners liked the coffee and the facilitator. They don’t tell us why a high-performing employee failed to apply a new skill back on the job, or which specific custom eLearning module actually led to a change in process.

The goal is not to abandon Level 1 evaluation, but to see it for what it is: the starting point, not the finish line. The real story unfolds after the training ends.

Demystifying The “Hard” Part: Tackling Core Implementation Challenges

The objections to moving up the evaluation ladder are valid, but they are no longer insurmountable. Let’s address the two biggest hurdles.

**1. “We don’t have the time or resources for complex evaluation.” **

The traditional image of Level 3 evaluation involves L&D professionals shadowing employees, conducting endless interviews, and manually analyzing mountains of performance data. This is simply not scalable.

AI flips this model. Instead of you doing the heavy lifting, AI-powered tools can automate the data collection and initial analysis, surfacing insights for you to act upon. What was a 40-hour manual process becomes a few hours of configuring smart tools and interpreting their outputs.

2. “We don’t know how to isolate the impact of training from other business factors.”

This is the holy grail of evaluation and the core of Jack Phillips’s Level 4 and 5 analyses. If sales go up, was it the new training program, the new marketing campaign, or a competitor’s misstep?

Phillips’s methodology provides a robust structure for this, outlining techniques such as control groups, trend-line analysis, and expert estimation. The challenge has been that executing these techniques manually is complex and statistically daunting for many L&D teams.

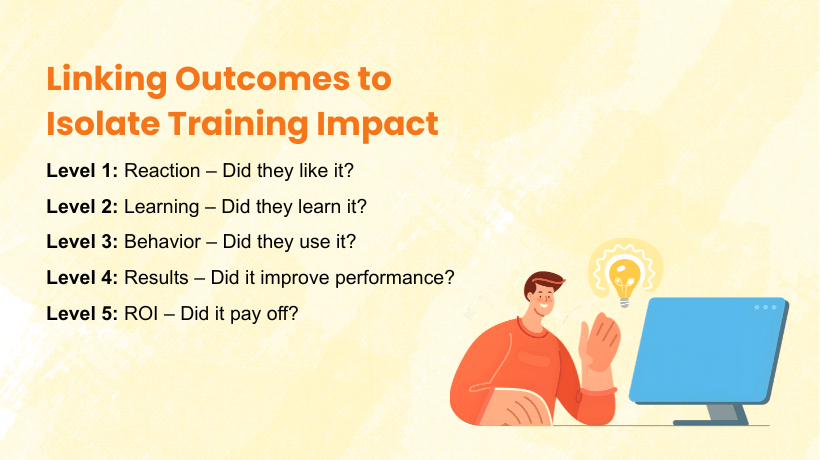

The Broken Chain: Linking Outcomes To Isolate Impact

A fundamental strength of Phillips’s model is its insistence on creating a chain of evidence. You cannot credibly claim a financial return (Level 5) without proving a business result (Level 4). You can’t claim a business result without observing a behavior change (Level 3). This sequential logic is our most powerful diagnostic tool.

When the expected business result doesn’t materialize, this chain helps us identify exactly where the link broke:

- Level 5 (ROI)? Was the monetary value of the Level 4 result miscalculated, or did the program costs balloon?

- Level 4 (Results)? Did the trained behaviors (Level 3) not translate into organizational performance, perhaps due to a lack of supportive systems or leadership?

- Level 3 (Behavior)? Did learners who acquired the skills (Level 2) fail to apply them on the job due to a lack of opportunity, fear, or no accountability?

- Level 2 (Learning)? Did learners who reacted positively (Level 1) actually not acquire the intended knowledge or skill?

Image by CommLab India

AI’s role is to automate the data collection across this entire chain, making it easier to pinpoint the breakage and take corrective action. It turns Phillips’s rigorous model from a theoretical ideal into a practical, ongoing process.

The AI-Powered Evolution: From Theory To Practical Application

So, how does this work in practice? Here’s how AI concretely enables evaluation at each level of the Phillips-Kirkpatrick chain.

For Level 3 (Behavior) – The “Are They Using It?” [Monitor]

How it was done: manual surveys months later, sporadic manager feedback.

How AI Does It

**Natural Language Processing (NLP): **AI can analyze transcripts from customer service calls, internal communications (such as Slack or Teams), and project documentation to identify the use of newly trained skills, terminology, and processes. Did the sales team start using the new value-selling framework language?

Skill inference platforms: Tools can analyze activity data from work systems (e.g., CRM, ERP) to infer skill application. For example, it can detect if an employee is using a newly trained feature in the software or following a newly taught QA checklist.

Automated check-ins: AI chatbots can conduct personalized, automated check-ins with learners weeks after training. They can ask questions such as, “What part of the negotiation training have you found most challenging to apply?” and analyze the open-text responses for common themes and barriers.

For Level 4 (Results) And Isolation – The “Is It Making A Difference?” [Analyst]

How it was done: manually correlating data, attempting complex statistical isolation techniques that were time-consuming and often questioned.

How AI Does It

This is where AI supercharges the Phillips methodology.

Predictive analytics and isolation: AI can run complex analyses that L&D teams lack the statistical bandwidth for. By feeding algorithms data on training participation and other potential factors (marketing campaigns, economic indicators, etc.), Machine Learning models can identify the unique contribution of the training to the outcome. This provides a data-driven isolation strategy, moving beyond estimation to calculated impact.

Data visualization dashboards: Tools such as Power BI or Tableau, fed by these AI models, can create real-time dashboards that visually link the training intervention to departmental KPIs, while also acknowledging other influencing factors.

Image by CommLab India

For Level 5 (ROI) – The “What’s The Financial Return?” [Business Partner]

How it was done: complex, one-off calculations that were often dismissed.

How AI Does It

Automated calculation engines: By integrating with HRIS and finance systems, AI can help automate the ROI calculation. It can track the monetizable outcomes (the isolated Level 4 impact) and weigh them against the fully loaded cost of the training program, providing a continuous, data-driven view of financial return, exactly as prescribed by the Phillips model.

From Theory To Practice: A Glimpse Of Impact

At CommLab India, we’ve seen this transition from myth to reality. In one instance, a global financial client needed to prove the impact of a new regulatory compliance training. Happy sheets were strong, but the real question was about risk reduction.

**The challenge: **Isolate the training’s impact on reducing procedural errors (the Level 4 result) from other control measures implemented at the same time, to ultimately calculate ROI.

The AI-enabled solution: We used an AI-powered platform to analyze internal audit reports and compliance ticketing data before and after the training. Using NLP, the system identified and categorized specific types of errors mentioned in the reports.

The AI model was then used to perform a trend-line analysis, comparing the error rate of the trained group against a control group that was scheduled for later training, a key isolation technique from the Phillips playbook.

The result: The analysis revealed a statistically significant drop in the category of errors directly addressed by the training module for the test group, even when other error types remained constant. This provided powerful, isolated evidence of the training’s effect on reducing business risk (Level 4).

By assigning a monetary value to the risk avoided (e.g., potential fines, operational losses), we were then able to credibly calculate the program’s ROI (Level 5), moving far beyond the initial “satisfaction” scores.

Your AI-Powered Evaluation Checklist: How To Get Started Now

This doesn’t require a massive budget or a complete overhaul. Start small, think big, and scale fast.

Phase 1: Lay The Foundation (This Quarter)

- Identify one strategic program: Choose one high-visibility, high-cost, or business-critical training program to pilot. Don’t boil the ocean.

- Define business KPIs: Partner with the business leader. Ask: “If this training is wildly successful, what will we see differently in the business in 90 days?” Get agreement on 1–2 measurable outcomes (e.g., reduce time-to-resolution by 10%, increase software adoption rate of Feature X by 25%).

- Audit your data: What systems hold the data for these KPIs? (e.g., CRM, ERP, Helpdesk software). Who owns access? Start these conversations early.

Phase 2: Select And Implement Tools (Next Quarter)

- **Explore integrated features: **Does your existing LMS or LXP have built-in analytics, survey tools, or AI-powered insights you aren’t using?

- Evaluate point solutions: Research affordable AI tools for surveys (e.g., Qualtrics), performance analytics (e.g., Cresta, Gong), or skills inference. Many offer free trials.

- Configure your pilot: Set up automated post-training check-ins. Create a simple dashboard in Power BI or even Excel that starts tracking your chosen KPI against the training timeline.

Phase 3: Analyze, Report, And Scale (Ongoing)

- **Look for correlations, not just causation: **Report on the data story. “Following the training rollout, we observed a 15% climb in feature adoption alongside a 5% increase in customer satisfaction scores in that cohort.”

- **Share insights, not just data: **Tell the story of the barriers to application discovered in your check-ins. This makes you a strategic partner in problem solving.

- Refine and expand: Use the learnings from your pilot to refine your approach and gradually apply it to other critical programs.

Image by CommLab India

It’s Time To Change The Conversation

The era of being held back by perceived complexity is over. Artificial Intelligence has provided the tools to dismantle the barriers that have kept L&D from proving its true worth. The question is no longer “Is it possible to measure impact?” but “Do we have the courage to know the answer, and the agility to act on it?”

Moving beyond happy sheets is no longer a theoretical best practice; it is an operational imperative. It’s how we shift the conversation from “How did they like it?” to “How did it help us win?”

It’s how we transform L&D from a cost centre into the most vital engine for growth and performance in the modern organization. The tools are here. The path is clear. The time to start is now.

CommLab India

Since 2000, CommLab India has been helping global organizations deliver impactful training. We provide rapid solutions in eLearning, microlearning, video development, and translations to optimize budgets, meet timelines, and boost ROI.