Hello all! Welcome once again to a progress update, to players both old and new, veterans and newcomers alike!

First of all thank you to everyone who has made the DLC launch a success - and to those who left comments and feedback. You seem to be really enjoying it, so Thank You!

Since the last release I’ve been hard at work on the next update to Super Video Golf. However, while most updates concentrate on adding new features, right now I’ve been doing my best to optimise the game, particularly at a GPU level. While adding new features is great, every new feature brings with it a little more overhead and, unfortunately, some players with lower spec hardware are beginning to get left behind. I decided it was time to take a look and see just what I could do to improve performance and…

Hello all! Welcome once again to a progress update, to players both old and new, veterans and newcomers alike!

First of all thank you to everyone who has made the DLC launch a success - and to those who left comments and feedback. You seem to be really enjoying it, so Thank You!

Since the last release I’ve been hard at work on the next update to Super Video Golf. However, while most updates concentrate on adding new features, right now I’ve been doing my best to optimise the game, particularly at a GPU level. While adding new features is great, every new feature brings with it a little more overhead and, unfortunately, some players with lower spec hardware are beginning to get left behind. I decided it was time to take a look and see just what I could do to improve performance and, as it turns out, it was rather a lot 🤔

This post, then, is going to take a relatively deep dive into what I’ve changed and may also get a little technical. If this isn’t your thing then you might want to skip this one - however if you’re interested in how the sausage is made, read on…

Where to begin?

Super Video Golf has always been a game which is very much ‘GPU Bound’ - that is the hardest working part of the game has been on the graphics card. In the early days of the game it was so relatively simple on a technical level that it didn’t matter too much - almost any GPU could chew through the vertices that were being fed to it. As the game has grown more complex over the years, however, optimising the data to be processed on the GPU has become more important as the need for more quickly processing that data has increased.

To avoid just throwing up a bunch of numbers on the screen I’m going to try using a warehouse analogy - so bear with me. Apologies up front if it just ends up sounding daft 😅

We’ll start with the GPU’s memory, aka VRAM. Consider this as a large warehouse - although the warehouse can vary in size based on any particular graphics card. It has a loading door around the back where a delivery truck can pull up and drop off a bunch of packages in various sizes. This truck represents the game when it’s loading - and the packages are the images, shaders and 3D meshes used to display the game, being brought by the truck from wherever they were stored on your hard drive.

These packages get placed on a variety of shelves and stands throughout the warehouse, waiting to be taken to the front counter - or in other words displayed on screen - by our GPU who, in this analogy, takes the form of a friendly custodian, whose job it is to walk the aisles and fetch packages from storage (the things we want to draw) and bring them to the counter (display them on screen).

Size and Locality

Before I started optimising the game many of the packages were far larger than they needed to be. While our custodian could fetch the data with a bit of effort, it was heavy work. Packages were also strewn all over the place as if they were just dumped in the first place that would fit. Worse still, smaller warehouses (GPUs with less VRAM) would fill up so much that it was difficult for the custodian to move around. Some packages might even get left out in the back alley!

This meant I had two things to tackle off the bat:

Size:

Drawables such as 3D and 2D meshes are made up of vertex data - vertices are the points which make up the mesh and are joined together to form triangles. A single vertex can have a variety of properties, depending on its use, and each property can be stored in a particular way, or data format.

As an example a typical mesh might have a vertex layout with the following properties:

- Position - the 2D or 3D position at which this vertex should be drawn

- Colour - the colour of the vertex which may also affect the colour of the associated texture when it’s drawn

- UV Coordinate - this tells our vertex which part of the associated texture should be drawn

When I started out (some 4 years ago now!) I created all vertices equal. They were all represented by a series of floating point numbers: 3 for the X,Y and Z coordinates for the position, 4 for the Red, Green, Blue and Alpha parts of the colour and 2 for the U and V coordinates.

Each floating point number takes up 4 bytes in memory, so each vertex has a memory requirement of

(3 + 4 + 2) * 4 bytes - 36 bytes in total.

While this seems like a relatively small number at first, a 3D mesh may have thousands of vertices. It may also have many more vertex properties, such as a mesh with skeletal animation (bone weights, bone indices, normal vectors etc…). When we consider all this as part of a package in our warehouse it starts to become quite hefty, and hard work for our custodian to move around.

Fortunately there are other data types in which we can store our properties, and effectively compress the vertex data by reducing its size. For instance the UVs can be stored as 16bit (2 byte) values instead of 32bit (4 bytes) which immediately halves the amount of memory needed for the UV properties. Even better than that it turns out that colour values can easily be represented in a single byte for each Red, Green, Blue and Alpha channel. A decent reduction in size of 4:1 😁

Reducing the size of position data is a bit more complicated - so let’s just assume we’ve done our best for this example. If we look at our new vertex size we have the 12 bytes for position as before, but now colour is a mere 4 bytes as is the UV property. This brings our total size down from 32 to 20 bytes per vertex - about 63% its original size. Our custodian (the GPU) can now move these lighter packages around more easily - and even pick up two or three at a time, so it’s a good start!

Using this technique I went through all of the various mesh formats throughout the game with a fine toothed comb, and reduced all of the vertex data in size as much as I reasonably could.

(It should be noted that, depending on use case, vertex layouts vary wildly and the amount of reduction which can be done varies as much. There are also different techniques available depending on which property you’re working on. If you’re interested in more details check out the OpenGL wiki page on the topic.)

Locality

The second consideration is locality. What do I mean by that? Although our packages in our warehouse have been made more compact and easier to move around, they’re somewhat scattered around the warehouse itself. It stands to reason that many of these packages are related to each other - for instance all the images which make up a particular menu, or all the meshes which make up the 3D background in the game. Even if the packages are easier to carry now, our custodian is going to have a hard time if they have to keep climbing over or walking around all the other packages which are in the way. It makes more sense that, when we unload the packages from the truck, we store related packages close together - on the same shelf if you like - so that they can be easily accessed in as few trips as possible. In other words, related packages should be* local* to each other.

To do this we can take advantage of OpenGL’s buffer storage mechanism - think of a buffer as a shelf in the warehouse. Before optimisation every mesh was just given a small buffer - whichever the graphics driver had to hand, and the mesh was placed in it. Now, when the game loads, it specifically requests a series of large buffers - each a whole wall of shelving - and then as the packages come in from the truck they are examined and placed in the relevant storage location to group them together by relevance. As a result when we switch from, say, the main menu to the driving range in the game, both the menu and the driving range have their own area in the warehouse. Our custodian can concentrate on just a small area relevant to the active part of the game, bringing as many packages as possible to the counter swiftly (and with style 🎩) - with much less of a chance of tripping over a stray shader or a box of textures 😅

Making the mesh data, particularly, compact and well sorted immediately showed a performance improvement - but is that all I could do to optimise performance? Of course not 😁

Image Compression

Textures are large blocks of contiguous memory. They often contain image data (but not always, we’ll touch on that below) used to colour the meshes which are drawn on screen, either as 2D menu items or 3D objects. When we use images on our computers or phones they are usually stored in some sort of compressed format such as png or jpg to take up less storage space. However, in order to display them on screen, they have to be decompressed fully into GPU memory. This means that even though a small 2kb png file might not take up much storage space on your hard drive it’ll take up more shelf space in our warehouse once it’s moved to VRAM. This is because graphics cards like to work on many small blocks of an image in parallel (see this Mythbusters video for a great explanation) but compression formats like png and jpg need to be processed sequentially, making it unreasonable to decompress them in good time on a GPU.

It doesn’t matter how compressed a 512x512 8bit RGBA image is on disk it will always uncompress to:

512x512x4(bytes per pixel, RGBA) = 1,048,576 bytes (approx 1mb).

Switch to a 1024x1024 image and it jumps in size to 4mb! You can see how having many images in a game can suddenly take up a lot of space in memory. Fortunately there is a special type of compression suited to processing images in blocks, handily titled BC or Block Compression. Block compression is a complex topic and there are many variants (check out this article if you’re interested in the technical details) however it’s relatively easy to implement in a game engine with open source programming libraries out there available for the job.

In the case of Super Video Golf I used the libktx library to add support for loading compressed textures, and nvidia’s Texture Tools to update the game’s existing assets. This means that, for the same reasons above (size and locality), image textures are now compressed into smaller, manageable blocks to sit on the warehouse shelves and, by the very nature of image data, all sat in a continuous, data-local, row.

Win number 2!

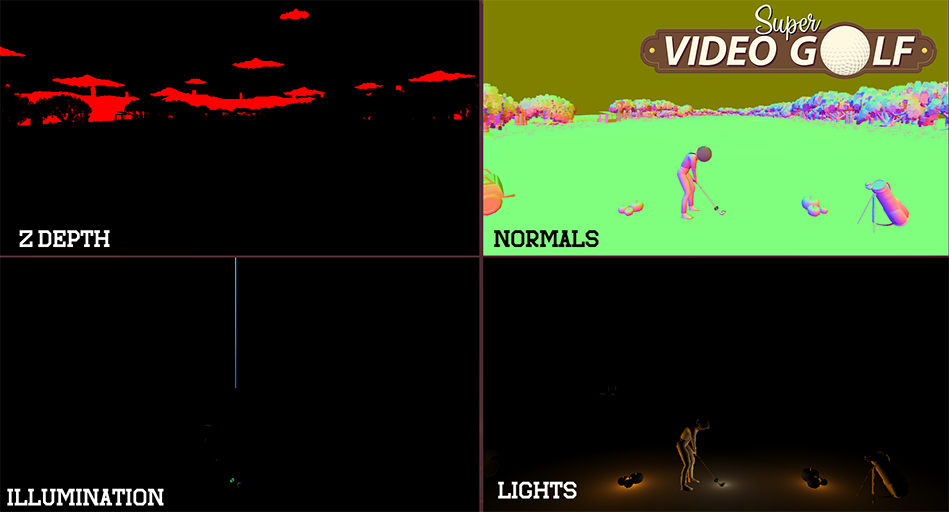

As I hinted above, not all textures are necessarily images. Super Video Golf uses a series of ‘multi-render targets’ or MRTs to store rendering information in textures, which are written by the GPU. In particular effects such as the lighting when playing golf at night are processed via a series of render targets:

and then combined into the final image you see on screen.

I’ll avoid going into details as to exactly why this is - however you can read about the technique (also known as deferred shading) at Learn OpenGL.

The crux of the matter is that, as these textures are writable, they are unavailable for Block Compression and each of these layers, before optimisation, were all set to 4 channels, with 32bits(4 bytes) per channel. For a full screen effect at 1920x1080 with layers for position, colour, normals, lighting this adds up to:

- 1920x1080

- x4 (channels)

- x4 (bytes per channel)

- x4 (layers)

- = 132,710,400 bytes - using a whopping 133mb of GPU memory!

Throw in the fact that there are MRTs for not only the main scene but also the ‘ball flight’ window and minimap view (a somewhat ridiculous 4480x2560 image - yes the real optimisation here is to take a different approach to zooming 🤦♂️) it’s suddenly very obvious where much of the GPU memory was being used 😅

With a bit of head scratching it’s actually possible to apply some of the vertex data reduction techniques on the texture. For instance the colour data (such as the illumination values or lighting) doesn’t need 4 bytes per channel, just one byte, so there’s a gain to be had there simply by changing the format of the colour layer and even reducing the channel count from 4 to 3. The layer containing the normals can also be reduced in precision as a normal only represents a direction (and has a unit length of one) - and so will also fit nicely in an 8-bit channel without perceptible loss of accuracy.

As it happens it’s also possible to recreate the position data from only the z-depth using a shader on the GPU, so the position layer can be reduced from 4 channels to just one, and even converted to 16 bit from 32bit - reducing the position layer to one eighth its previous size as we’re now only storing the z position. Nice!

There are some other potential optimising techniques available to use - such as combining mask images with colour ones into Array Textures which would reduce the number of state changes, or using a z-prepass which I may still experiment with. However, for the effort required, it feels like I’m approaching the point of diminishing returns.

In conclusion

So… does this give a perceptible boost to the game’s performance? I think so, but it’s hard to tell 😅 I actually have a limited amount of hardware to test the current version on, and compare it to the 1.21 version of Super Video Golf.

On an older PC with a GTX1070 GPU and an intel processor from 2013 I can now play the game at a crisp 1440p without much of a hitch. On the Steam Deck I can increase the tree quality to high and get a solid 60fps which I couldn’t before, and playing a round in night mode no longer makes the fans work as hard as they were. I haven’t measured it but the lower power consumption ought to make the battery last a little longer too. I don’t have a dock, or even a TV to test the Steam Deck at 1080p or higher though, so I don’t know if there was any improvement there. Hopefully it’ll perform well even if you do have to switch the tree quality to low.

So perhaps, dear reader, assuming you’ve made it this far (congratulations if you did!), you could tell me what your experience of the optimised version is compared to the current 1.21.2 version? It’s currently available on the beta branch on Steam - found in the game’s properties, under the beta tab and select the beta branch from the drop down menu. I’d be very interested to hear! Drop any comments you have over on the Discussions, Group Chat, or Discord. Thanks!

*However… *

With all that being said there’s still more planned for the 1.22 update - including some new features! Unfortunately I’ve already waffled on for far too long so I’ll have to save the details for what else I have planned for a future post.

Until then though, as always,

Happy golfing! ⛳🏌️

“Janitor” (https://skfb.ly/6Z9GD)) by Víctu is licensed under Creative Commons Attribution (http://creativecommons.org/licenses/by/4.0/)..)

“PSX Storage Shelves & Cardboard Boxs” (https://skfb.ly/oWpno)) by Drillimpact is licensed under Creative Commons Attribution (http://creativecommons.org/licenses/by/4.0/)..)

“Kei Truck” (https://skfb.ly/otNAF)) by grs is licensed under Creative Commons Attribution (http://creativecommons.org/licenses/by/4.0/)..)

Get Super Video Golf

Super Video Golf

90s era golf, with network play

| Status | Released |

| Author | fallahn |

| Genre | Sports |

| Tags | achievements, billiards, cross-platform, Internet, Moddable, Multiplayer, Open Source, Pixel Art, Retro, ultrawide |

| Languages | English |

| Accessibility | Color-blind friendly |

More posts

10 days ago

31 days ago

37 days ago

68 days ago

Jul 21, 2025

Jul 05, 2025

Jun 27, 2025

Jun 10, 2025

Jun 04, 2025