The open source community is reeling this week as a dramatic feud explodes on social media, pitting the trillion-dollar resources of Google’s Project Zero and its AI bug hunter, Big Sleep, against the all-volunteer maintainers of the essential multimedia framework, FFmpeg.

The core issue isn’t the existence of security vulnerabilities. It’s who should be responsible for fixing them, and whether a major corporation should use its advanced tools to pressure an unpaid community project.

The new rules of the game: Google’s ‘Reporting Transparency’

To understand the current crisis, we have to look back to July 2025, when Google Project Zero (GPZ) announced a trial of its new [Reporting Transparency](https://googleprojectzero.blogspot.com/2025/07/reporting-tran…

The open source community is reeling this week as a dramatic feud explodes on social media, pitting the trillion-dollar resources of Google’s Project Zero and its AI bug hunter, Big Sleep, against the all-volunteer maintainers of the essential multimedia framework, FFmpeg.

The core issue isn’t the existence of security vulnerabilities. It’s who should be responsible for fixing them, and whether a major corporation should use its advanced tools to pressure an unpaid community project.

The new rules of the game: Google’s ‘Reporting Transparency’

To understand the current crisis, we have to look back to July 2025, when Google Project Zero (GPZ) announced a trial of its new Reporting Transparency policy. Under this policy, within about a week of discovering a vulnerability, Google publicly shares that it has reported the issue — naming the affected project and when the disclosure clock expires — even before a fix exists. However, the standard 90-day disclosure deadline for vendors to fix a bug remains.

“We’re adding a new step at the beginning of the process,” wrote Project Zero’s Tim Willis. “Within one week of reporting a vulnerability, we’ll share that it was discovered — but not the technical details.”

Google said the change was meant to shrink the “upstream patch gap” — the lag between when a vulnerability is fixed upstream and when users actually receive that fix downstream. The company’s AI-assisted bug hunter, Big Sleep (developed with Google DeepMind), is part of this new approach.

Big Sleep meets FFmpeg

In early August, Google revealed that Big Sleep had uncovered around 20 vulnerabilities in several major open-source projects — including FFmpeg, the media framework used across browsers, apps, and operating systems.

Consistent with its new transparency policy, Google later published public issue tracker entries showing that some of these vulnerabilities were reported to FFmpeg in mid-August and September. Most were marked as low or medium severity, but the visibility created new tension: FFmpeg volunteers were now on the clock, under public scrutiny, and with no ready-made patches from Google.

FFmpeg’s pushback: “We’re volunteers, not vendors”

That’s when the drama began. FFmpeg maintainers fired back, arguing that Google’s AI tools were dumping reports without solutions on projects largely sustained by unpaid volunteers.

“We take security very seriously, but is it really fair that trillion-dollar corporations run AI to find security issues on people’s hobby code — then expect volunteers to fix them?” FFmpeg wrote on X. FFmpeg sees this as a high-pressure deadline applied by a giant corporation to an all-volunteer project, forcing unpaid labor to patch issues found using billions of dollars of AI infrastructure and highly paid engineers.

FFmpeg maintainers are justifiably upset. According to them, finding the vulnerabilities is a public service. But Google’s next step is not: they are reporting the flaws without providing patches or code fixes. The open source community’s stance, famously summarized by FFmpeg, is simple: “stop jerking yourselves off, just submit a patch.”

The crossfire: security nerds vs. volunteer maintainers

The debate has erupted into a full-blown war on X (formerly Twitter), illuminating a deep ideological chasm in the software supply chain:

| The security nerds’ stance (Pro-Google) | The FFmpeg & OSS supporters’ stance |

| Responsibility is paramount: Due to FFmpeg’s size and ubiquity, it acts as a critical vendor for the entire internet. They have a responsibility to fix security bugs regardless of their “volunteer project” status. | Contribute a fix, not just a report: Google is missing a critical opportunity to contribute back. Finding and fixing is great; merely finding and pressuring is not. |

| It’s their job to fix: The project’s developers (the “vendor” or maintainers) are ultimately responsible for the patch, not the security researcher. | Burnout is real: This kind of high-pressure, deadline-driven CVE reporting is hugely demotivating and drives away highly talented, long-term volunteer engineers. |

| Early warning is good: The existence of a vulnerability is not Google’s fault. They are finding it only shortly before hackers using similar AI techniques would. | The XZ Utils parallel: The saga highlights the fragility of critical open source infrastructure, echoing the disastrous XZ Utils supply chain attack, where a lone maintainer was co-opted due to lack of support. |

This isn’t the first time open-source maintainers have raised the alarm about sustainability.

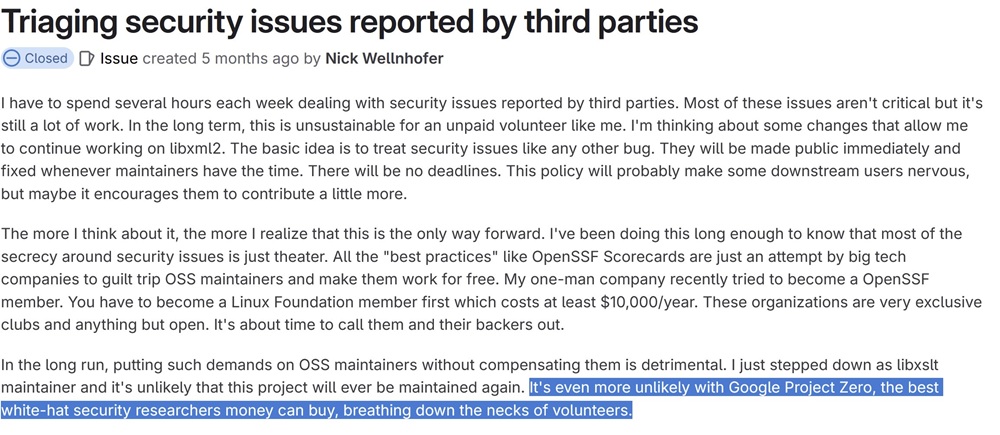

Nick Wellnhofer, maintainer of libxml2, voiced similar frustrations earlier this year, saying he spends hours triaging security issues from third parties while working unpaid.

He singled out Google Project Zero in his comments, noting that “it’s even more unlikely with Google Project Zero, the best white-hat security researchers money can buy, breathing down the necks of volunteers.”

According to Nick, who has since stepped down from maintaining libxslt, this type of environment is “detrimental” to the fun and enjoyment of reverse engineering, leading talented people to leave projects. FFmpeg claims this exact scenario led one of their most brilliant engineers to step away.

Such comments echo broader concerns after incidents like the XZ Utils backdoor earlier in 2024, where a lone overworked volunteer inadvertently handed control of a widely used compression library to an attacker.

The saga is still on, with no end in sight to the public arguments over patches and responsibilities. And FFmpeg is still aiming subtle digs at Google.

Google’s actions, driven by a desire to close the security gap before hackers strike, are clashing with the reality of unpaid, volunteer-driven open source development. The community agrees that vulnerabilities must be fixed, but the central question remains a heated point of contention: Who pays the cost—in time, labor, and motivation—to create the patches?

“It’s interesting how the security ‘research’ community is happy to write the most ruthless things when they find security flaws. But get upset when called out about sending patches to volunteer projects…” FFmpeg posted on X.

The debate is a necessary, albeit chaotic, spotlight on the fragile foundation of the modern internet, which largely runs on the passion and free labor of a few dedicated maintainers. And honestly, it reminds me of the WordPress and WP Engine saga.

What are your thoughts? Should major corporations like Google commit to providing patches alongside their AI-discovered vulnerability reports for volunteer open source projects, or is the maintainers’ responsibility for their code absolute?