Speed in modern engineering comes from reusing open-source components, but that same dependency chain has become one of the most exploited attack surfaces on the internet. This post walks through a realistic npm supply-chain compromise, how attackers turn a poisoned package into a full-blown breach, and a clean demo that shows a practical mitigation: just-in-time secret injection.

The Attack Story

Supply-chain compromises happen across every language ecosystem — PyPI, RubyGems, Go modules, but npm remains the most frequently targeted. Over the past few years, we’ve seen large-scale incidents (like Shai-Hulud recently), where a malicious npm package silently spread …

Speed in modern engineering comes from reusing open-source components, but that same dependency chain has become one of the most exploited attack surfaces on the internet. This post walks through a realistic npm supply-chain compromise, how attackers turn a poisoned package into a full-blown breach, and a clean demo that shows a practical mitigation: just-in-time secret injection.

The Attack Story

Supply-chain compromises happen across every language ecosystem — PyPI, RubyGems, Go modules, but npm remains the most frequently targeted. Over the past few years, we’ve seen large-scale incidents (like Shai-Hulud recently), where a malicious npm package silently spread through CI systems and exfiltrated credentials from thousands of machines. That’s why we’re using npm as our example in this post. It’s representative of how real-world supply-chain attacks unfold across any modern stack.

Every supply-chain breach starts the same way: with trust. You install a dependency, like a new logging utility or a small helper buried ten layers deep, and assume it does what it says on the tin. But a single compromised maintainer account or poisoned package version can quietly turn that trust into an entry point.

A malicious package can execute automatically during install or build-time lifecycle scripts, such as preinstall or postinstall. From there, the payload runs in the context of your CI pipeline or developer environment with all the same privileges your tools have.

That’s where the real damage happens. These payloads are rarely loud or destructive; they’re designed to blend in. Most are short, heavily obfuscated scripts that scan for secrets in environment variables, .npmrc tokens, cached SDK credentials, or local kubeconfigs. Once they find anything interesting, they exfiltrate it. Often via a single POST request to an attacker-controlled endpoint disguised as a harmless telemetry or analytics domain.

Armed with these secrets, an attacker can publish backdoored images to your container registry, or inject a hidden GitHub Actions workflow that grants long-term persistence. The poisoned package was just the initial infection, the stolen credentials are the real payload.

From there, the path is well-worn: the attacker waits for your deployment pipeline to pull their backdoored image, which eventually runs inside a Kubernetes pod with access to sensitive runtime secrets — OpenAI or Anthropic API keys, database credentials, or service tokens. Once inside, they can exfiltrate data, explore internal APIs, and move laterally across your environment.

In other words: a single malicious npm install can become a full-scale cloud breach.

Why Static Scanners Aren’t the Whole Story

Most teams already run dependency and vulnerability scanners and they absolutely should. They catch outdated packages, known CVEs, typosquats, and dangerous permissions before they ship. But scanners live in a world of known vulnerabilities. Supply-chain attacks thrive in the world of unknown behavior. By the time a signature or rule exists, the exploit has already run in thousands of build environments. Even the best scanners share a couple of unavoidable blind spots:

- Metadata ≠ behavior, and install-time ≠ runtime. Scanners evaluate package names, versions, and known vulnerabilities — they don’t observe what the code actually does when it runs. A new or modified package can execute obfuscated install-time logic that scrapes environment variables or fetches a payload; once executed, the scanner has already done its job and won’t see the runtime exfiltration.

- Signal fatigue. Security teams drown in alerts. Dozens of “medium” findings pile up, and a single critical anomaly can hide among the noise or get postponed until “after the release.”

So even with the best coverage, a package can pass every check, execute malicious code, and leave no trace until it’s too late.

That’s why defense in depth matters. Static analysis tells you what you’re installing; runtime guardrails decide what it’s allowed to do once it runs. The rest of this post focuses on that second layer: how runtime identity and just-in-time secret injection make a compromise far less valuable for an attacker.

How Attackers Move Laterally

Once attackers get code execution, they follow a fast, repeatable playbook:

- Grab credentials: scan env vars, .npmrc, kubeconfigs, CI tokens.

- Pivot to CI/registry: push backdoored images or add workflows to gain persistence.

- Run in production: poisoned images or workflows deploy into pods/servers that receive runtime secrets.

- Harvest and escalate: use DB keys, cloud tokens, or service accounts to access more systems.

- Persist and monetize: create long-lived accounts, exfiltrate data quietly, or sell access.

The simple lesson: if secrets are discoverable at runtime, a small compromise becomes a full breach. Remove those secrets from the attack surface and you dramatically reduce the blast radius.

Just-in-Time Secret Injection

Just-in-time injection means credentials aren’t baked into images, env vars, or files. They’re provisioned only to the specific process that needs them, just when it needs them. Delivery can happen in several ways: placed “on the wire” (for example, by adding headers to outbound HTTP calls), or written to an ephemeral file that’s only readable by that process.

Why this matters:

- No persistent target: If keys never exist as files or long-lived env vars inside a pod, there’s nothing for an install-time or run-time scraper to grab.

- Process-scoped delivery: Injection is tied to a SPIFFE workload identity. A different process does not receive the secret, even in the same VM or pod.

- Minimal operational friction: Injection happens at runtime and doesn’t require code changes or secret rotation across images. Policies can be updated centrally and take effect immediately.

- Auditable and revocable: Every injected event can be logged and audited. If a key is suspected, you can revoke the provider-side secret and the workload loses access without redeploying images.

- Complementary to existing controls: SCA and static policies still matter. Injection is an additional layer that greatly reduces the payoff of any successful compromise.

Demo walkthrough — support-assistant, Postgres, and a poisoned npm package

This demo shows the exact attack chain described above, and how just-in-time injection breaks it.

Setup

- A small web chat (Support-Assistant) that calls an LLM provider (OpenAI/Anthropic). The LLM uses a Postgres database of support tickets as a tool: the assistant issues queries to Postgres to fetch and summarize ticket data.

- A simulated poisoned npm payload that, when run in a build or container, scans environment variables and posts any found secrets to an external sink (we use a local

ngrok). - Kubernetes deployment for the backend, with its API keys normally delivered as a Secret into the pod environment.

Walkthrough

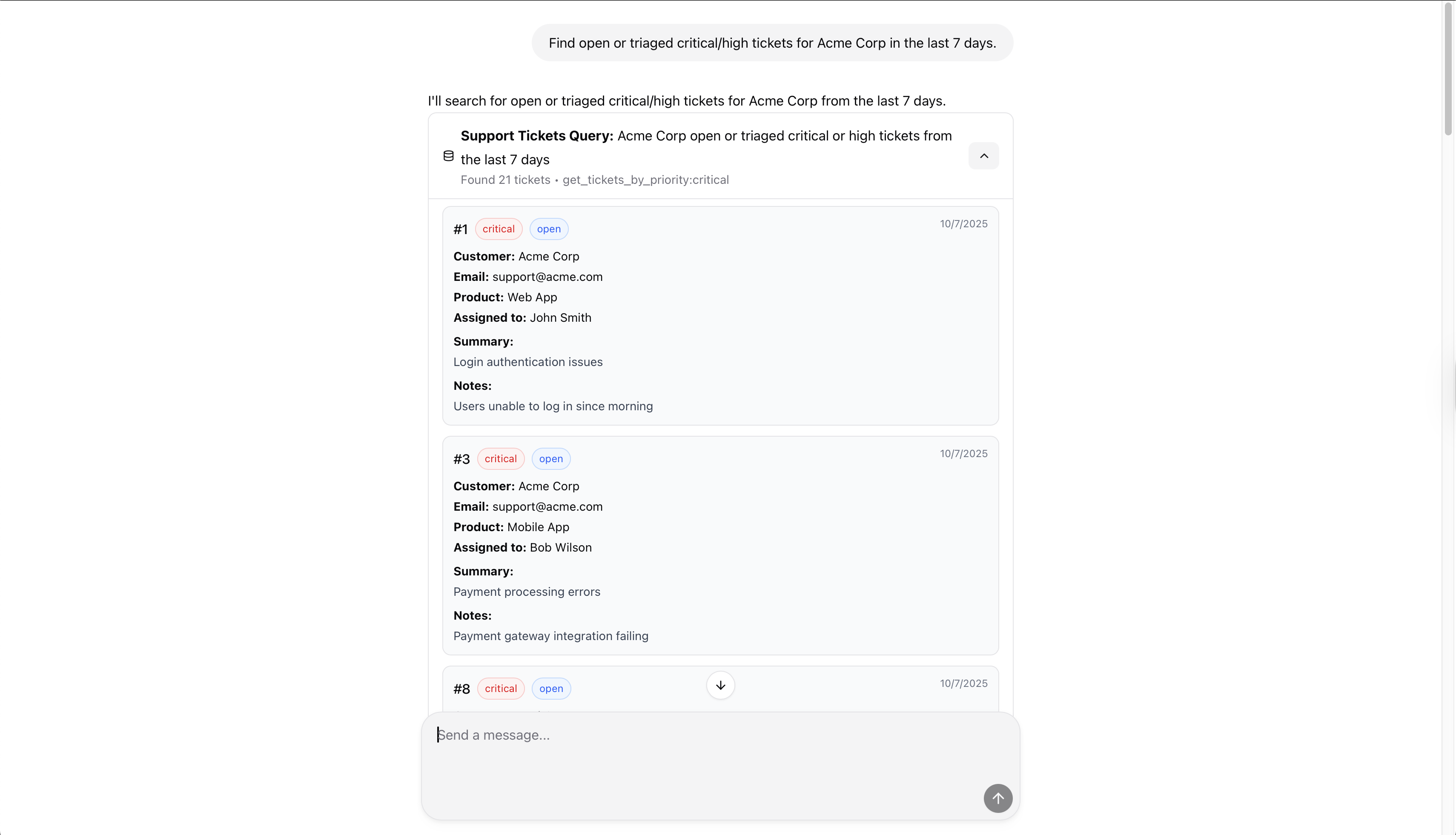

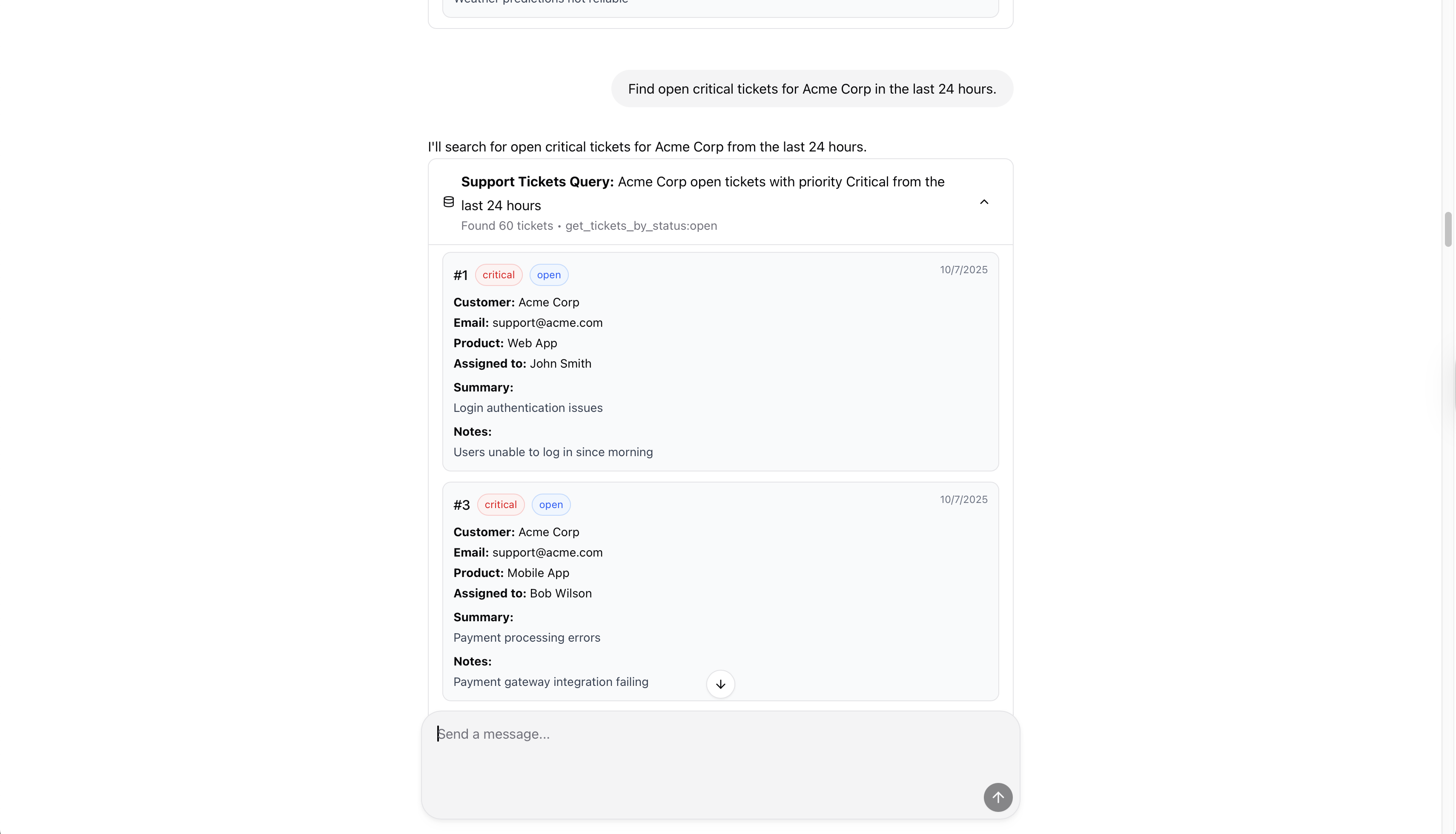

The Support-Assistant app works as expected

At first, everything looks fine. The Support-Assistant UI works as expected: you type a question, it fetches results from Postgres, asks the LLM for a summary, and returns the answer. It’s a completely ordinary helper agent, until one of its dependencies turns hostile.

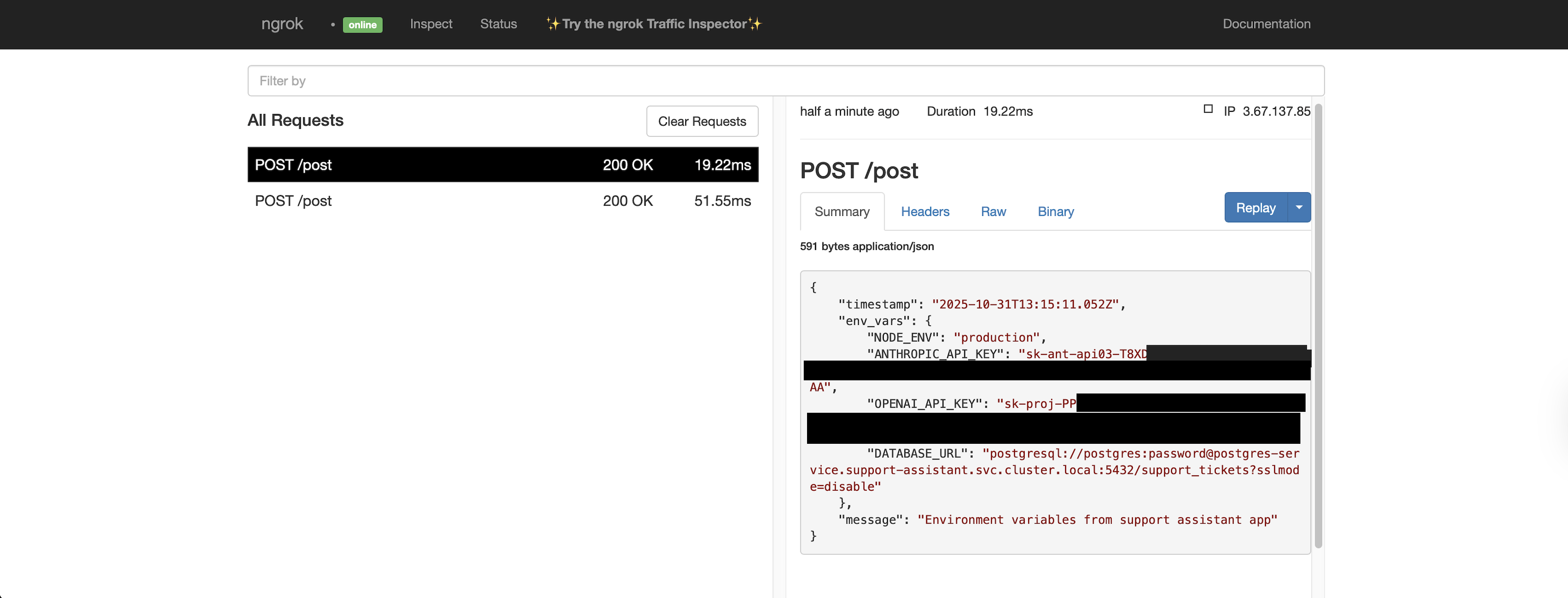

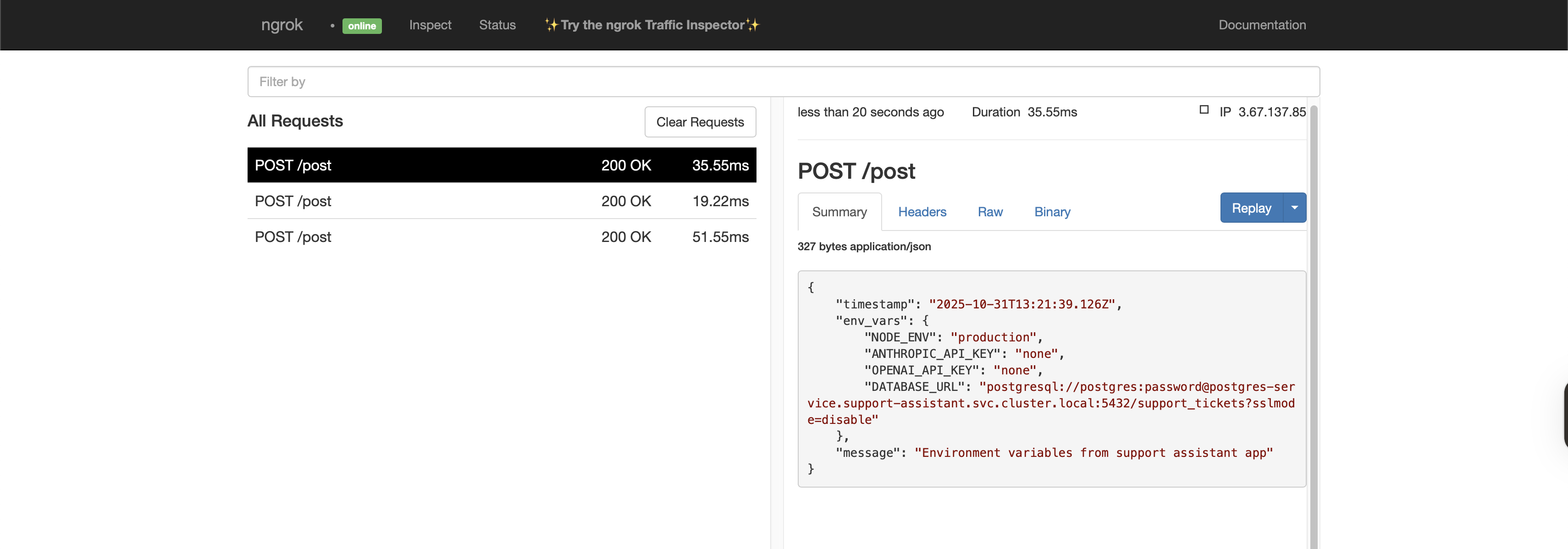

A poisoned package scrapes the environment

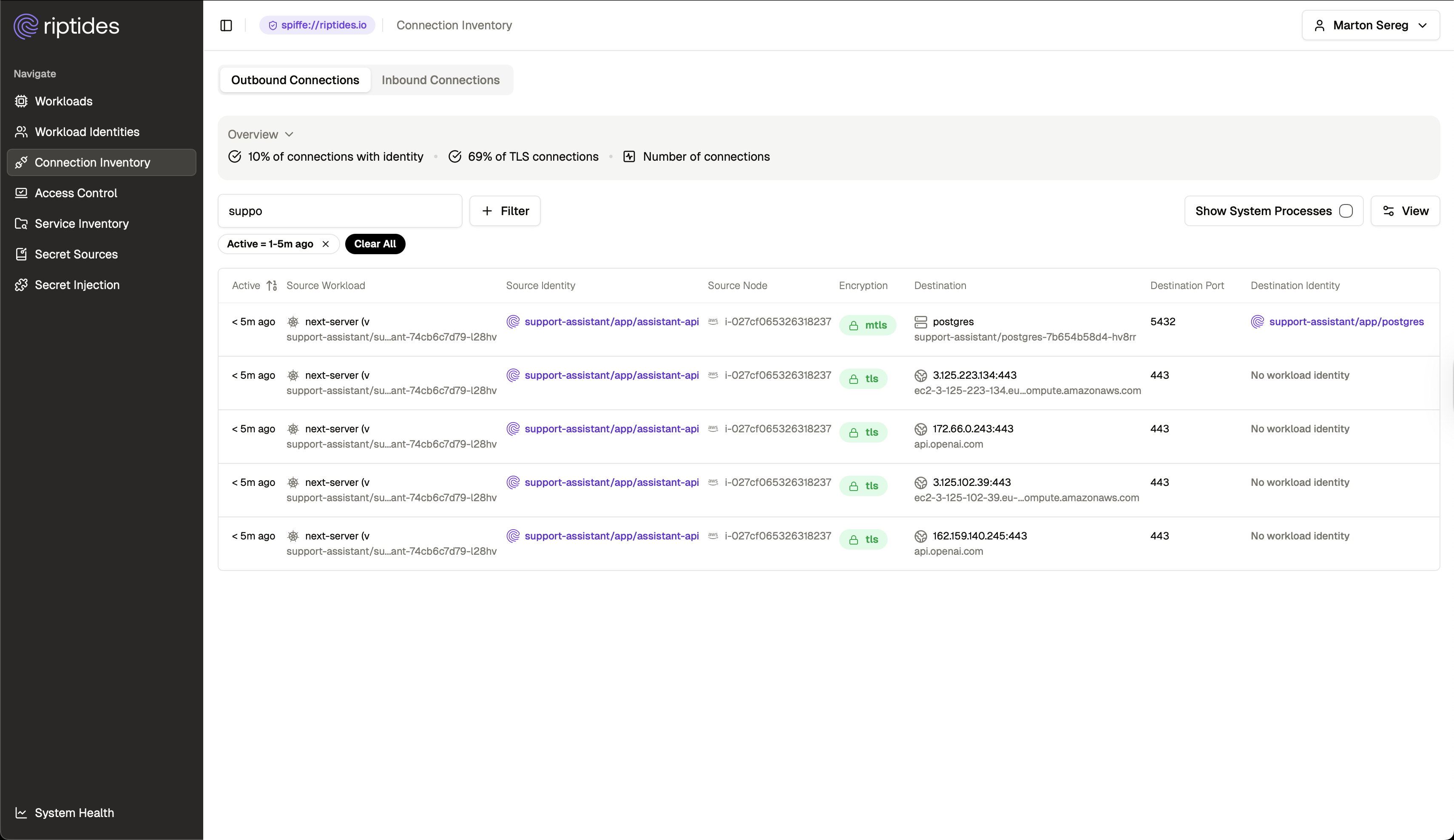

A malicious npm package quietly executes during runtime and starts scanning environment variables. The screenshot below shows what happens next: our simulated payload sends the collected keys to an ngrok endpoint. The POST request includes both the OpenAI and Anthropic API keys.

The Riptides Connection Inventory page shows these outbound requests to unknown ngrok IPs. But a single successful request like this is all an attacker needs to steal API keys and escalate.

Even though the poisoned package doesn’t break the application itself, it silently opens connections and exfiltrates secrets. In a real incident, that one request would be enough to pivot deeper into your infrastructure.

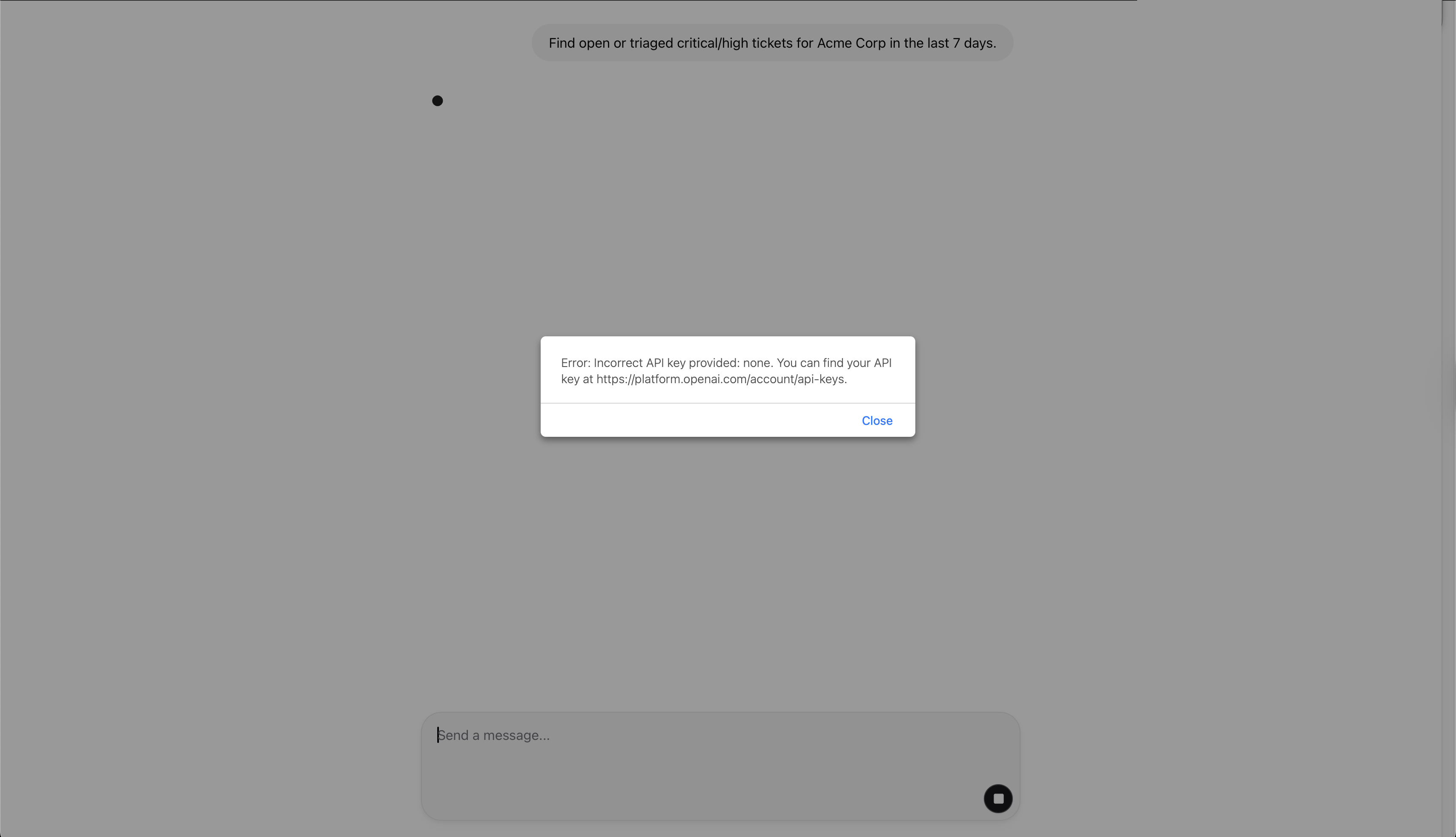

Remove static credentials from the pod

Next, we strip the pod of its persistent secrets by setting the API keys to none. Now the system has nothing to leak, but the application also fails to call the LLM. In practice, you’d configure secret injection first, then remove environment variables, but here we intentionally break the app to show that the keys really are gone.

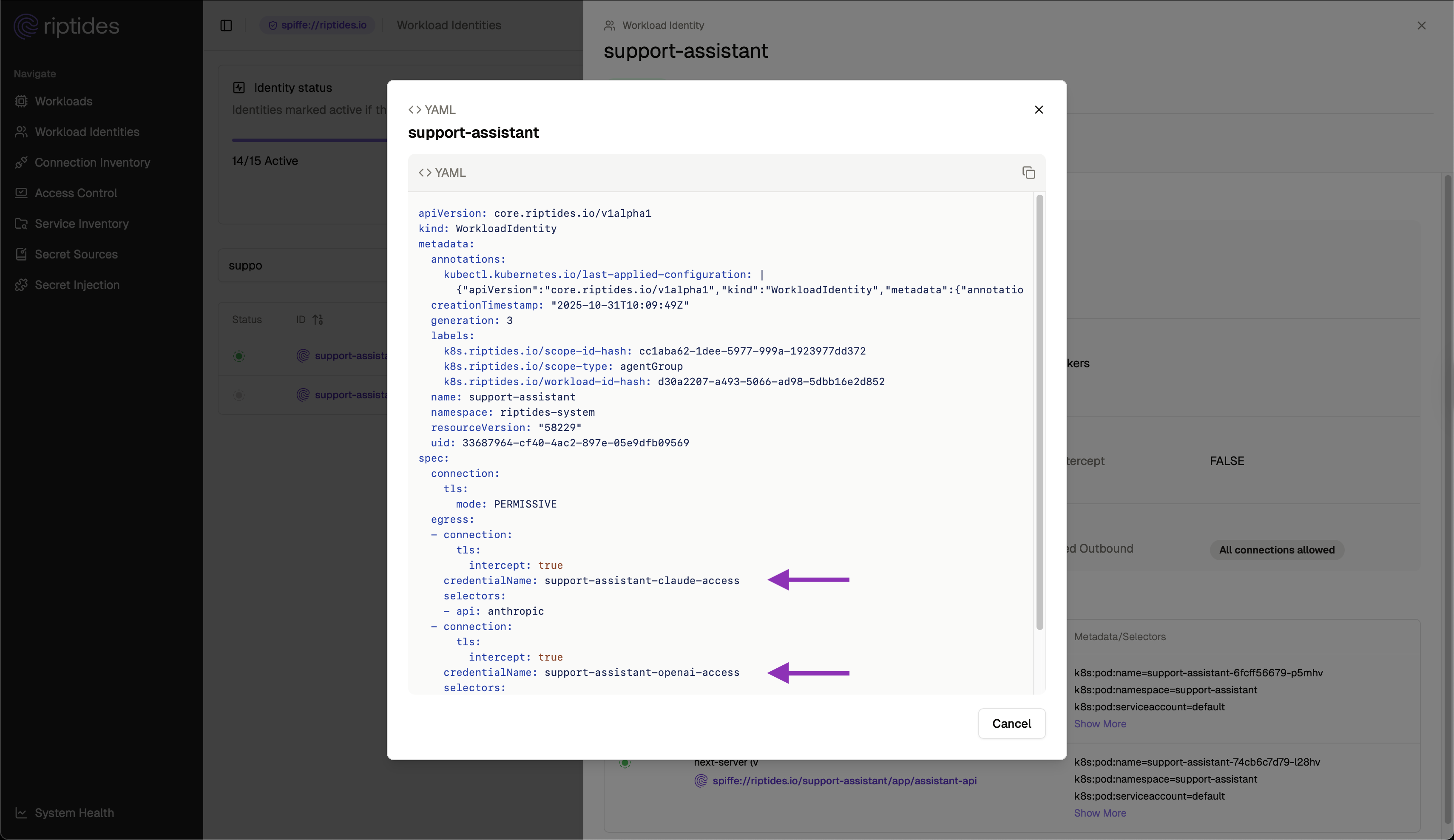

Apply Riptides just-in-time injection

Now we turn on Riptides’ on-the-wire credential injection.

Instead of handing credentials to the environment, Riptides injects them dynamically into legitimate requests at runtime. Here’s a small configuration snippet of how it’s configured for an identity:

The app immediately resumes normal behavior without restarting pods or re-deploying images.

Re-run the scraper

Finally, we check the malicious scraper again. It still executes, but now it has nothing to steal. The exfiltrated payload shows empty values: the exploit’s payoff is gone. In a real environment, that one change — removing persistent secrets and injecting them just-in-time — turns a full-scale breach into a contained event.

Practical recommendations — what to do today

- Treat static scanning as one layer, not the answer. Keep dependency scanning and vetting in place, but combine them with runtime controls so a slipped package has no persistent payoff.

- Eliminate persistent credentials in images and pods. Stop shipping long-lived secrets in images or as env vars. Replace them with short-lived or injected credentials for high-value targets (LLM providers, DBs, cloud admin scopes).

- Bind secrets to workload identity, not host or pod. Deliver credentials only to the process that needs them (process-scoped identities / SPIFFE-style). A reverse shell in the pod should not automatically inherit access.

- Make injection auditable and revocable. Log every injection and policy change. Centralized revocation and audit trails reduce MTTR and make investigations possible.

- Harden CI and publish paths. Limit CI runner privileges, rotate publish tokens, and watch registry publishes and workflow changes — make it harder for initial escalation to succeed.

Closing — measurable risk reduction

A poisoned npm package is a plausible, common starting point for a large breach. You can’t catch every compromised dependency, but you can make compromises far less valuable. Removing persistent runtime secrets and delivering credentials just-in-time to a verified workload identity converts a likely data breach into a contained incident. That shift — fewer secrets exposed, fewer privileges leaked, and clearer audit trails — is the kind of measurable risk reduction security teams, engineering leaders, and auditors will actually care about.

If you’re interested in other secret-injection posts, check out our examples — On‑Demand Credentials: Secretless AI Assistant (GCP) and On‑the‑Wire Credential Injection: Secretless AWS/Bedrock.

If you enjoyed this post, follow us on LinkedIn and X for more updates. If you’d like to see Riptides in action, get in touch with us for a demo.