Key Takeaways

- The landscape of hardware-assisted verification (HAV) has dramatically evolved, driven by increased design complexity in semiconductor design and the need for early software validation.

- There has been a significant shift in priorities from compile time to runtime performance, emphasizing the validation of long software workloads over shorter compile times.

- The focus of capacity utilization has transitioned from running many small jobs in parallel to executing single, system-critical validation runs for complex multi-die architectures.

- Debugging techniques have evolved from waveform visibility to system-level visibility, utilizing software debuggers and protocol analyzers to handle complex workloads more effectively.

- The role of HAV has expanded beyond late…

Key Takeaways

- The landscape of hardware-assisted verification (HAV) has dramatically evolved, driven by increased design complexity in semiconductor design and the need for early software validation.

- There has been a significant shift in priorities from compile time to runtime performance, emphasizing the validation of long software workloads over shorter compile times.

- The focus of capacity utilization has transitioned from running many small jobs in parallel to executing single, system-critical validation runs for complex multi-die architectures.

- Debugging techniques have evolved from waveform visibility to system-level visibility, utilizing software debuggers and protocol analyzers to handle complex workloads more effectively.

- The role of HAV has expanded beyond late-stage verification to become integral throughout the semiconductor design flow, supporting processes from early RTL verification to first-silicon debug.

The competitive landscape of hardware-assisted verification (HAV) has evolved dramatically over the past decade. The strategic drivers that once defined the market have shifted in step with the rapidly changing dynamics of semiconductor design.

Design complexity has soared, with modern SoCs now integrating tens of billions of transistors, multiple dies, and an ever-expanding mix of IP blocks and communication protocols. The exponential growth of embedded software has further transformed verification, making early software validation and system-level testing on emulation and prototyping platforms essential to achieving time-to-market goals.

Meanwhile, extreme performance, power efficiency, reliability, and security/safety have emerged as central design imperatives across processors, GPUs, networking devices, and mobile applications. The rise of AI has pushed each of these parameters to new extremes, redefining what hardware-assisted verification must deliver to keep pace with next-generation semiconductor innovation.

Evolution of HAV Over the Past Decade

My mind goes back to the spirited debates that played out on the pages of DeepChip in the mid-2010s. At the time, I was consulting for Mentor Graphics, proudly waving the flag for the Veloce hardware-assisted verification (HAV) platforms. My face-to-face counterpart in those discussions was Frank Schirrmeister, then marketing manager at Cadence, standing his ground in defense of the Palladium line.

Revisiting those exchanges today underscores just how profoundly user expectations for HAV platforms have evolved. Three key aspects of deployment, once defining points of contention, have since flipped entirely: runtime versus compile time, multi-user operation, and DUT debugging. Let’s take a closer look at how each has transformed.

Compile Time versus Runtime: A Reversal of Priorities

A decade ago, shorter compile times were prized over faster runtime performance. The prevailing rationale, championed by Cadence in the processor-based HAV camp, was that rapid compilation improved engineering productivity by enabling more iterative RTL debug cycles per day. In contrast, the longer compile times typical of FPGA-based systems often negated their faster execution speeds, creating a significant workflow bottleneck.

Over the past decade, however, the dominant use case for high-end emulation has shifted dramatically. While iterative RTL debug remains relevant, today’s most demanding and value-critical tasks involve validating extremely long software workloads: booting full operating systems, running complete software stacks, executing complex application benchmarks, and, increasingly, deploying entire AI/ML models. These workloads no longer run for minutes or hours, instead they run for days or even weeks, completely inverting the equation and rendering compile time differences largely irrelevant.

This fundamental shift in usage has decisively tilted the value proposition for high-end applications toward high-performance, FPGA-based systems.

The Capacity Driver: From Many Small Jobs to One Long Run

Back in 2015, one of the central debates revolved around multi-user support and job granularity. Advocates of processor-based emulation systems argued that the best way to maximize the value of a large, expensive platform was to let as many engineers as possible run small, independent jobs in parallel. The key metric was system utilization: how many 10-million-gate blocks could be debugged simultaneously on a billion-gate system?

While the ability to run multiple smaller jobs remains valuable, the driver for large-capacity emulation has shifted entirely. The focus has moved from maximizing user parallelism to enabling a single, system-critical pre-silicon validation run. This change is fueled by the rise of monolithic AI accelerators and complex multi-die architectures that must be verified as cohesive systems.

Meeting this challenge demands new scaling technologies—such as high-speed, asynchronous interconnects between racks—that enable vendors to build ever-larger virtual emulation environments capable of hosting these massive designs.

The economic rationale has evolved as well: emulation is no longer justified merely by boosting daily engineering productivity, but by mitigating the catastrophic risk of a system-level bug escaping into silicon in a multi-billion-dollar project.

The Evolution of Debug: From Waveforms to Workloads

Historically, the quality of an emulator’s debug environment was defined by its ability to support waveform visibility of all internal nets. Processor-based systems excelled in this domain, offering native, simulation-like access to every signal in the design. FPGA-based systems, by contrast, were often criticized for the compromises they imposed, such as the performance and capacity overhead of inserting probes, and the need to recompile whenever those probes were relocated.

That paradigm has been fundamentally reshaped by the rise of software-centric workloads. For an engineer investigating why an operating system crashed after running for three days, dumping terabytes of low-level waveforms is not only impractical but largely irrelevant. Debug has moved up the abstraction stack—from tracing individual signals to observing entire systems. The emphasis today is on system-level visibility through software debuggers, protocol analyzers, and assertion-based verification, approaches that are less intrusive and far better suited to diagnosing the behavior of complex systems over billions of cycles.

At the same time, waveform capture technology in FPGA-based platforms has advanced dramatically. Modern instrumentation techniques have reduced traditional overhead from roughly 30% to as little as 5%, making deep signal visibility available when needed, without imposing a prohibitive cost.

Debug is no longer a monolithic task. It has become a multi-layered discipline where effectiveness depends on choosing the right level of visibility for the problem at hand.

In Summary

Recently, I had the chance to reconnect with Frank—now Marketing Director at Synopsys—who, a decade ago, was my counterpart in those spirited face-to-face debates on hardware-assisted verification. This time, however, the discussion took a very different tone. Both of us, now veterans of this ever-evolving field, found ourselves in full agreement on the dramatic metamorphosis of the semiconductor design landscape—and how it has redefined the architecture, capabilities, and deployment methodologies of HAV platforms.

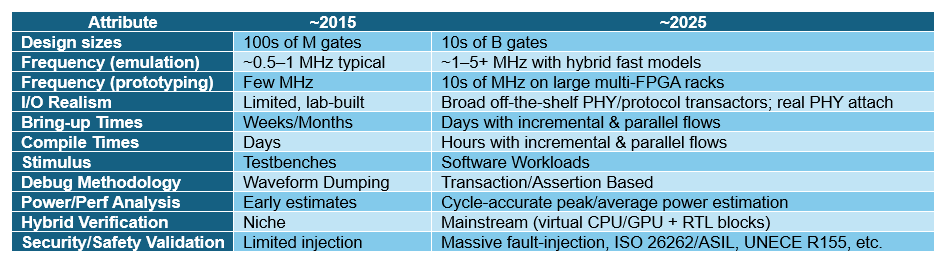

What once divided the “processor-based” and “FPGA-based” camps has largely converged around a shared reality: design complexity, software dominance, and AI-driven workloads have reshaped the fundamental priorities of verification. The focus has shifted from compilation speed and multi-user utilization toward system-level validation, scalability, and long-run stability. Table I summarizes how the key attributes of HAV systems have evolved over the past decade.

Table: The Evolution of the HAV Main Attributes from ~2015 to ~2025

More importantly, the role of HAV itself has expanded far beyond its original purpose. Once considered a late-stage verification tool—used primarily for system validation and pre-silicon software bring-up—it has now become an indispensable pillar of the entire semiconductor design flow. Modern emulation platforms span nearly the full lifecycle: from early RTL verification and hardware/software co-design to complex system integration and even first-silicon debug.

Also Read:

Synopsys and NVIDIA Forge AI Powered Future for Chip Design and Multiphysics Simulation

Podcast EP315: The Journey to Multi-Die and Chiplet Design with Robert Kruger of Synopsys

Share this post via: