22nd November 2025

Olmo is the LLM series from Ai2—the Allen institute for AI. Unlike most open weight models these are notable for including the full training data, training process and checkpoints along with those releases.

The new Olmo 3 claims to be “the best fully open 32B-scale thinking model” and has a strong focus on interpretability:

At its center is Olmo 3-Think (32B), the best fully open 32B-scale thinking model that for the first time lets you inspect intermediate reasoning traces and trace those behaviors back to the data and training decisions that produced them.

They’ve released four 7B models—Olmo 3-Base, Olmo 3-Instruct, Olmo 3-Think and Olmo 3-RL Zero, plus 32B variants of the 3-Think and 3-Base mode…

22nd November 2025

Olmo is the LLM series from Ai2—the Allen institute for AI. Unlike most open weight models these are notable for including the full training data, training process and checkpoints along with those releases.

The new Olmo 3 claims to be “the best fully open 32B-scale thinking model” and has a strong focus on interpretability:

At its center is Olmo 3-Think (32B), the best fully open 32B-scale thinking model that for the first time lets you inspect intermediate reasoning traces and trace those behaviors back to the data and training decisions that produced them.

They’ve released four 7B models—Olmo 3-Base, Olmo 3-Instruct, Olmo 3-Think and Olmo 3-RL Zero, plus 32B variants of the 3-Think and 3-Base models.

Having full access to the training data is really useful. Here’s how they describe that:

Olmo 3 is pretrained on Dolma 3, a new ~9.3-trillion-token corpus drawn from web pages, science PDFs processed with olmOCR, codebases, math problems and solutions, and encyclopedic text. From this pool, we construct Dolma 3 Mix, a 5.9-trillion-token (~6T) pretraining mix with a higher proportion of coding and mathematical data than earlier Dolma releases, plus much stronger decontamination via extensive deduplication, quality filtering, and careful control over data mixing. We follow established web standards in collecting training data and don’t collect from sites that explicitly disallow it, including paywalled content.

They also highlight that they are training on fewer tokens than their competition:

[...] it’s the strongest fully open thinking model we’re aware of, narrowing the gap to the best open-weight models of similar scale – such as Qwen 3 32B – while training on roughly 6x fewer tokens.

If you’re continuing to hold out hope for a model trained entirely on licensed data this one sadly won’t fit the bill—a lot of that data still comes from a crawl of the web.

I tried out the 32B Think model and the 7B Instruct model using LM Studio. The 7B model is a 4.16GB download, the 32B one is 18.14GB.

The 32B model is absolutely an over-thinker! I asked it to “Generate an SVG of a pelican riding a bicycle” and it thought for 14 minutes 43 seconds, outputting 8,437 tokens total most of which was this epic thinking trace.

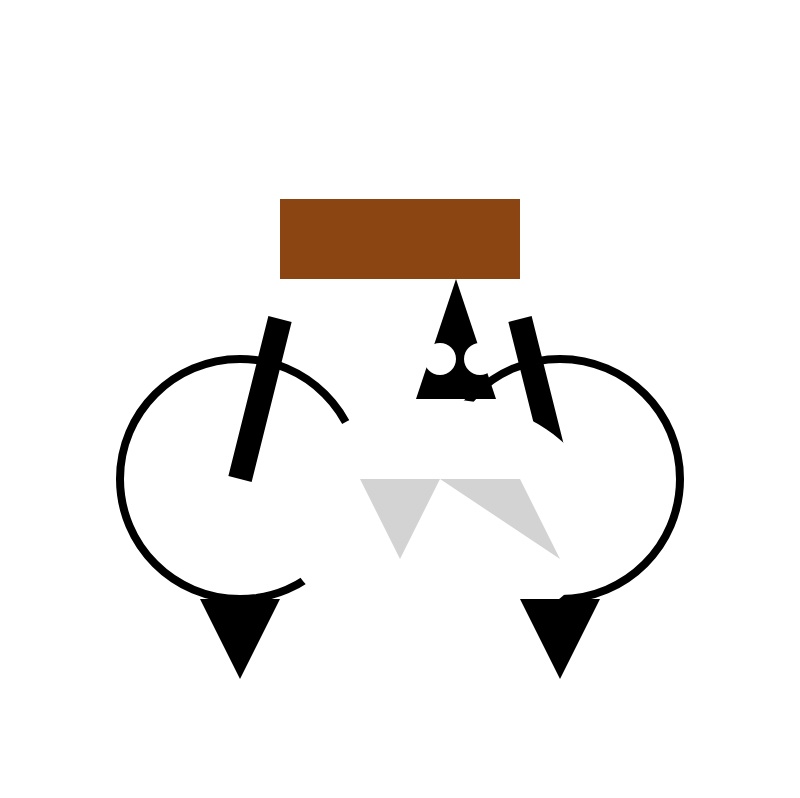

I don’t usually quote the full SVG in these write-ups, but in this case it’s short enough that I think it’s worth sharing. The SVG comments give a great impression of what it was trying to do—it has a Bicycle, Bike frame, Pelican, Left and Right wings and even “Feet on pedals”.

<svg width="200" height="200" viewBox="0 0 100 100">

<!-- Bicycle -->

<circle cx="30" cy="60" r="15" stroke="black" fill="none"/>

<circle cx="70" cy="60" r="15" stroke="black" fill="none"/>

<!-- Bike frame -->

<rect x="35" y="25" width="30" height="10" fill="saddlebrown"/>

<line x1="35" y1="40" x2="30" y2="60" stroke="black" stroke-width="3"/>

<line x1="65" y1="40" x2="70" y2="60" stroke="black" stroke-width="3"/>

<!-- Pelican -->

<ellipse cx="55" cy="65" rx="20" ry="15" fill="white"/>

<polygon points="52 50,57 35,62 50" fill="black"/> <!-- Head/beak -->

<circle cx="55" cy="45" r="2" fill="white"/>

<circle cx="60" cy="45" r="2" fill="white"/>

<polygon points="45 60,50 70,55 60" fill="lightgrey"/> <!-- Left wing -->

<polygon points="65 60,70 70,55 60" fill="lightgrey"/> <!-- Right wing -->

<!-- Feet on pedals -->

<polygon points="25 75,30 85,35 75" fill="black"/>

<polygon points="75 75,70 85,65 75" fill="black"/>

</svg>

Rendered it looks like this:

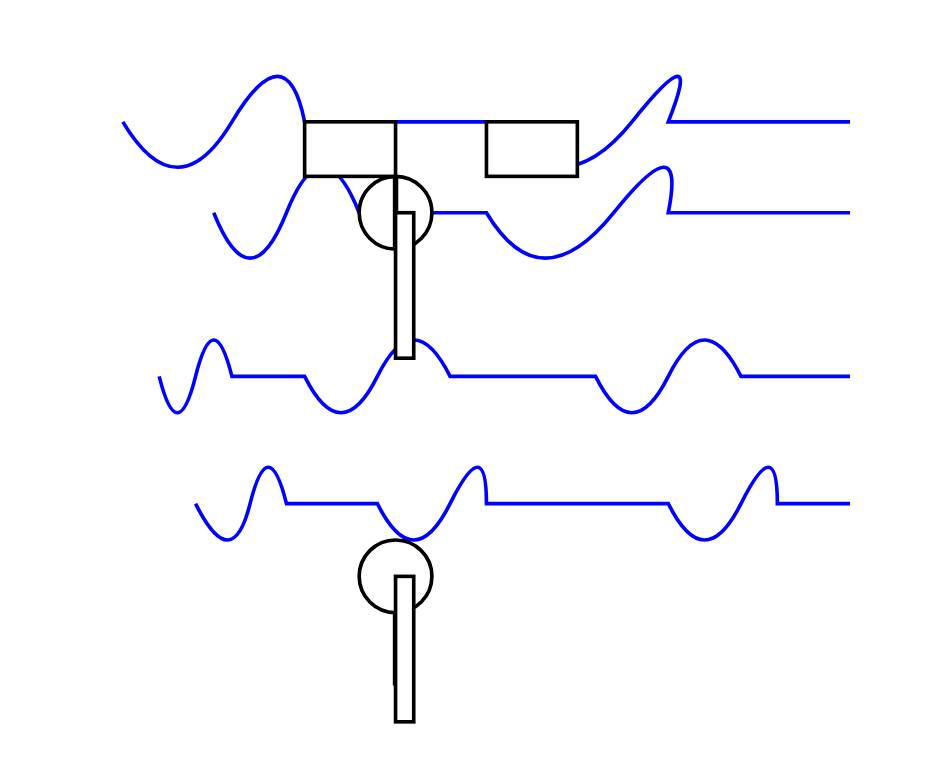

I tested OLMo 2 32B 4bit back in March and got something that, while pleasingly abstract, didn’t come close to resembling a pelican or a bicycle:

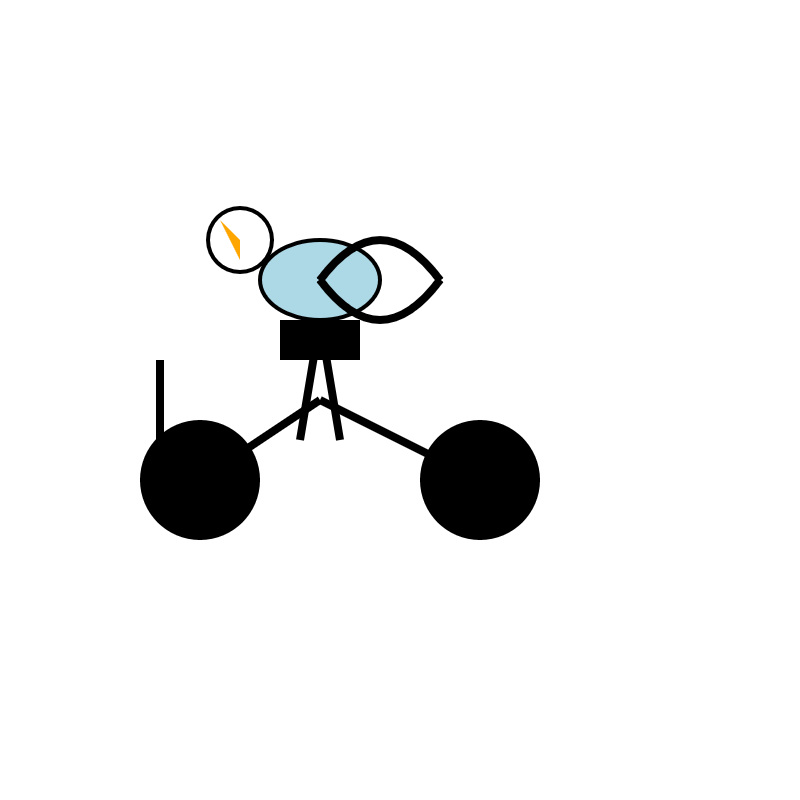

To be fair 32B models generally don’t do great with this. Here’s Qwen 3 32B’s attempt (I ran that just now using OpenRouter):

OlmoTrace

I was particularly keen on trying out the ability to “inspect intermediate reasoning traces”. Here’s how that’s described later in the announcement:

A core goal of Olmo 3 is not just to open the model flow, but to make it actionable for people who want to understand and improve model behavior. Olmo 3 integrates with OlmoTrace, our tool for tracing model outputs back to training data in real time.

For example, in the Ai2 Playground, you can ask Olmo 3-Think (32B) to answer a general-knowledge question, then use OlmoTrace to inspect where and how the model may have learned to generate parts of its response. This closes the gap between training data and model behavior: you can see not only what the model is doing, but why—and adjust data or training decisions accordingly.

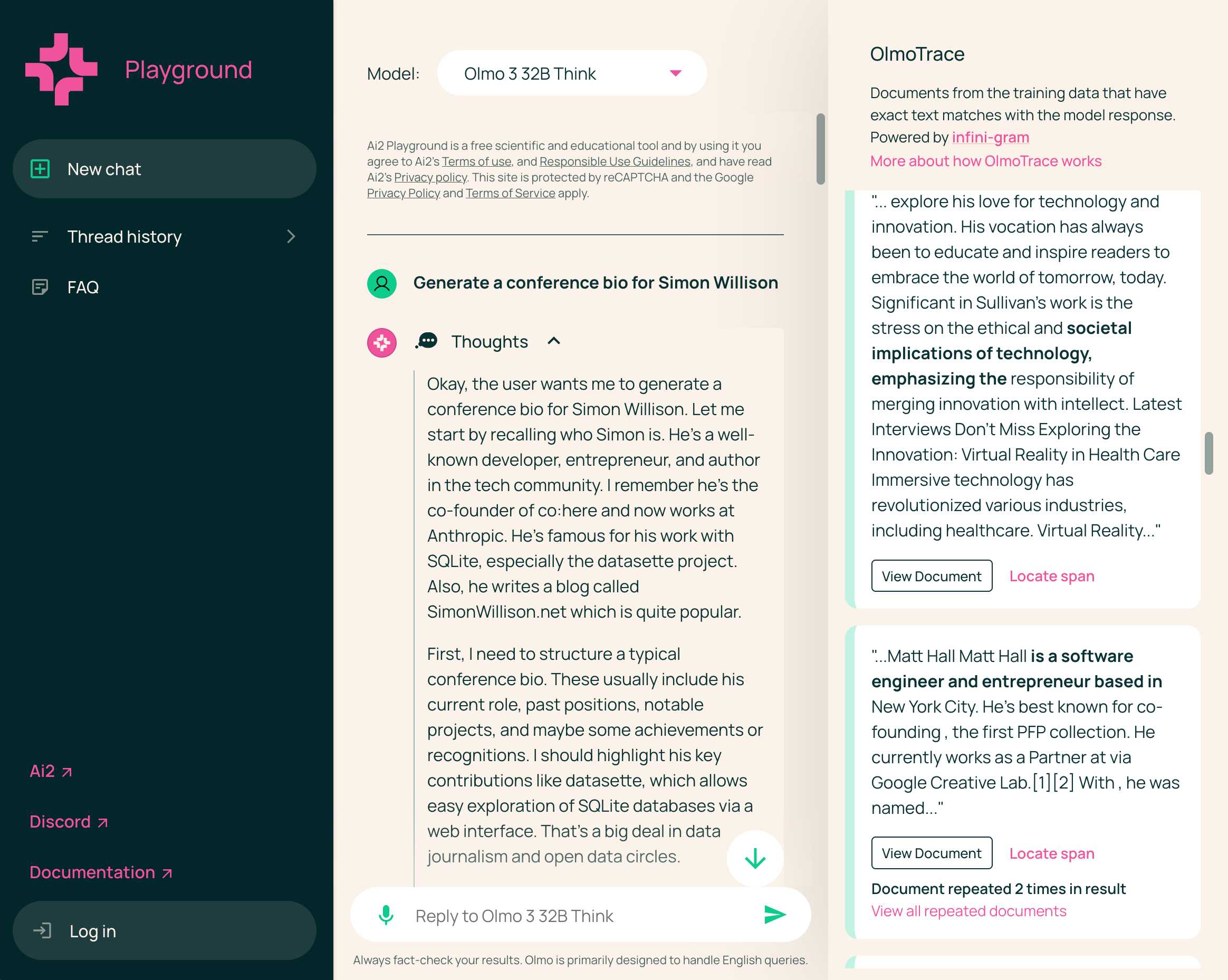

You can access OlmoTrace via playground.allenai.org, by first running a prompt and then clicking the “Show OlmoTrace” button below the output.

I tried that on “Generate a conference bio for Simon Willison” (an ego-prompt I use to see how much the models have picked up about me from their training data) and got back a result that looked like this:

It thinks I co-founded co:here and work at Anthropic, both of which are incorrect—but that’s not uncommon with LLMs, I frequently see them suggest that I’m the CTO of GitHub and other such inaccuracies.

I found the OlmoTrace panel on the right disappointing. None of the training documents it highlighted had anything to do with me—it appears to be looking for phrase matches (powered by Ai2’s infini-gram) but the documents it found had nothing to do with me at all.

Can open training data address concerns of backdoors?

Ai2 claim that Olmo 3 is “the best fully open 32B-scale thinking model”, which I think holds up provided you define “fully open” as including open training data. There’s not a great deal of competition in that space though—Ai2 compare themselves to Stanford’s Marin and Swiss AI’s Apertus, neither of which I’d heard about before.

A big disadvantage of other open weight models is that it’s impossible to audit their training data. Anthropic published a paper last month showing that a small number of samples can poison LLMs of any size—it can take just “250 poisoned documents” to add a backdoor to a large model that triggers undesired behavior based on a short carefully crafted prompt.

This makes fully open training data an even bigger deal.

Ai2 researcher Nathan Lambert included this note about the importance of transparent training data in his detailed post about the release:

In particular, we’re excited about the future of RL Zero research on Olmo 3 precisely because everything is open. Researchers can study the interaction between the reasoning traces we include at midtraining and the downstream model behavior (qualitative and quantitative).

This helps answer questions that have plagued RLVR results on Qwen models, hinting at forms of data contamination particularly on math and reasoning benchmarks (see Shao, Rulin, et al. “Spurious rewards: Rethinking training signals in rlvr.” arXiv preprint arXiv:2506.10947 (2025). or Wu, Mingqi, et al. “Reasoning or memorization? unreliable results of reinforcement learning due to data contamination.” arXiv preprint arXiv:2507.10532 (2025).)

I hope we see more competition in this space, including further models in the Olmo series. The improvements from Olmo 1 (in February 2024) and Olmo 2 (in March 2025) have been significant. I’m hoping that trend continues!