YouTube Shorts automationAI video editingPython ffmpegOpenAI WhisperGPT-4 video analysisautomated content creationvideo processing pipelineYouTube API integrationCLI video toolscontent creator automationspeech to text videovideo cutting algorithmssocial media automation

Introduction

One month ago, I launched my own YouTube Channel focused on software engineering. While working on content creation, I recognized that building a successful YouTube channel requires effective promotion strategies. YouTube Shorts, though not a new format, remains powerful because the recommendation algorithm can showcase my content to a much broader audience.

I decided to experiment with automatic video editing and build a tool that analyzes my long YouTube videos us…

YouTube Shorts automationAI video editingPython ffmpegOpenAI WhisperGPT-4 video analysisautomated content creationvideo processing pipelineYouTube API integrationCLI video toolscontent creator automationspeech to text videovideo cutting algorithmssocial media automation

Introduction

One month ago, I launched my own YouTube Channel focused on software engineering. While working on content creation, I recognized that building a successful YouTube channel requires effective promotion strategies. YouTube Shorts, though not a new format, remains powerful because the recommendation algorithm can showcase my content to a much broader audience.

I decided to experiment with automatic video editing and build a tool that analyzes my long YouTube videos using LLM technology and produces 5-10 ready-to-publish YouTube Shorts. The purpose is to increase engagement and gain more subscribers. In this article, I will cover how I built this solution.

youtube-shorts-creator - Open Source project available on GitHub.

Result

This is the original video:

Loading...

After running my CLI application:

1shorts-creator -v Flow\ Run\ System\ Design\ \#1.mp4 -s 5 -sd 60 --upload

The script produces 5 YouTube shorts with a 60-second duration and uploads them to YouTube. The shorts look like this:

Loading...

High Level Design

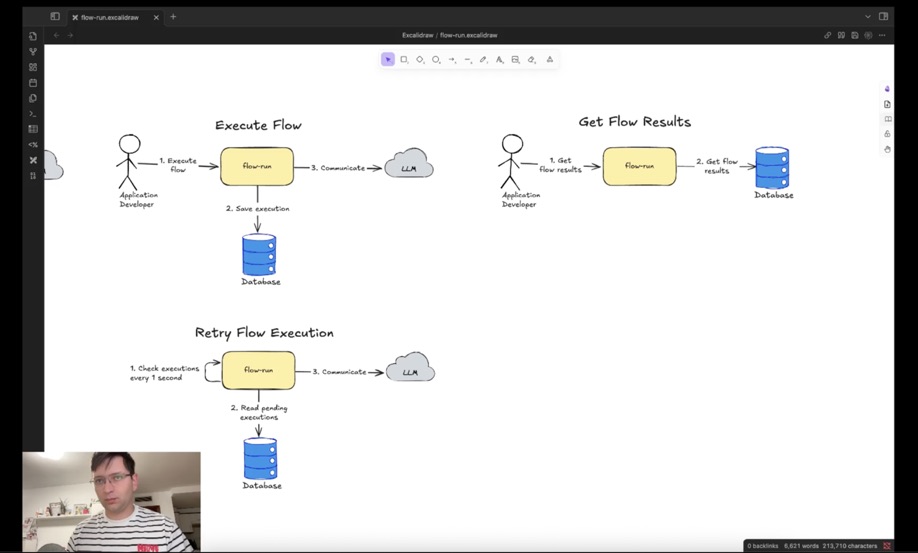

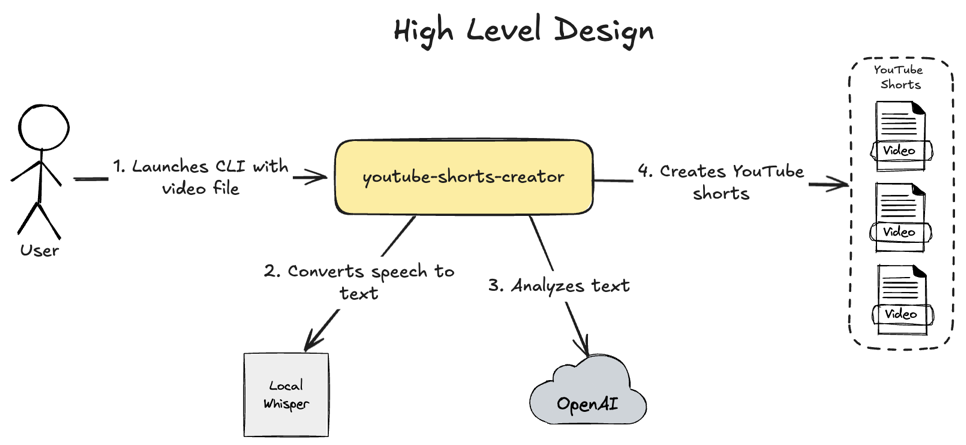

youtube-shorts-creator is a CLI application that users launch on their local machine:

Loading...

- User launches CLI with a video file as target and other parameters.

- The CLI application uses a local OpenAI Whisper model to convert audio to text.

- The CLI application uses the retrieved text from audio to analyze it with OpenAI GPT-4-mini and identifies the most interesting moments of a video that will become YouTube Shorts.

- The CLI application creates multiple short YouTube videos and uploads them to YouTube.

Technology Stack

Originally, when I started this project, I thought I would edit videos programmatically. After a quick search, I found there are basically two approaches:

- Use a wrapper for the

ffmpeglibrary in C. - Use a language-native library. I didn’t want to use a wrapper over a C library because it involves some drift between the library implementation and wrapper, sometimes performance issues, etc.

After quick research, I found that there’s a PyMovie library in Python. That’s why I decided to use Python to build this application. However, during development, I realized that the performance of the PyMovie library was very poor.

That’s why I stopped using PyMovie and started using an ffmpeg wrapper, which provided excellent performance and many video editing possibilities that were difficult or impossible with PyMovie.

When I speak about video editing performance, it’s not about P99 of 50ms or something near real-time, which is typical in my day job. It’s about editing videos that take 40 minutes or 15 minutes. With PyMovie, editing a 1-minute video required waiting 5 minutes on my MacBook Pro. With ffmpeg, it took around 1 minute to edit a video of the same duration.

So my final technology stack was:

- Python

- Local OpenAI Whisper - runs perfectly on MacBook Pro, so no reason to pay for API

- OpenAI GPT-4-mini - good balance between quality and price

ffmpeg- all video manipulations At the end of the project, I can say that I used Python only for PyMovie, which was eventually removed. If I were to start this project again, I would use Go instead of Python, but Python solved my problem perfectly.

Detailed Design

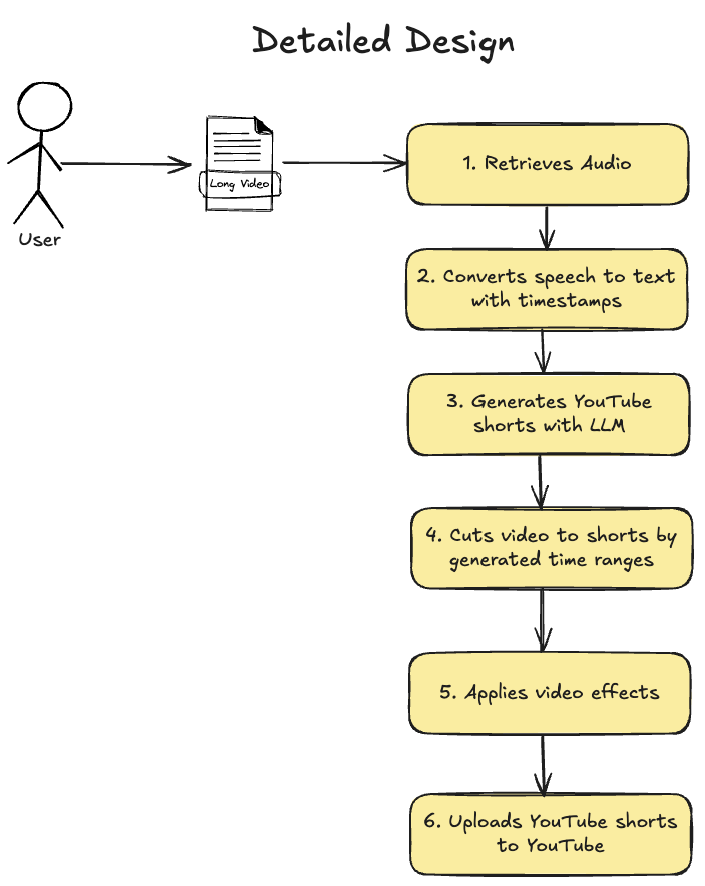

This is the detailed algorithm for YouTube shorts generation from my long video:

Loading...

Let’s dive deeper into each step separately.

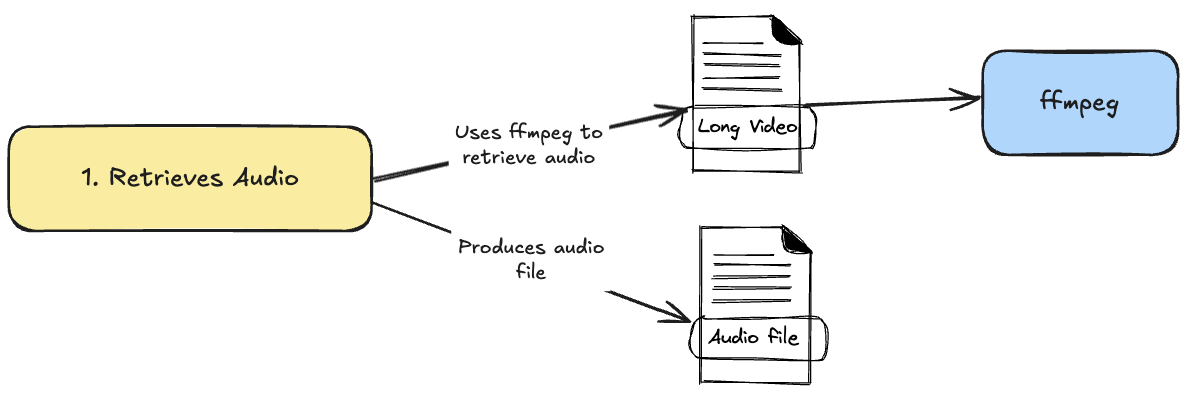

1. Retrieves Audio

Loading...

Using ffmpeg, my application retrieves audio from a long video. Code is available on GitHub. The audio file is the result of this step.

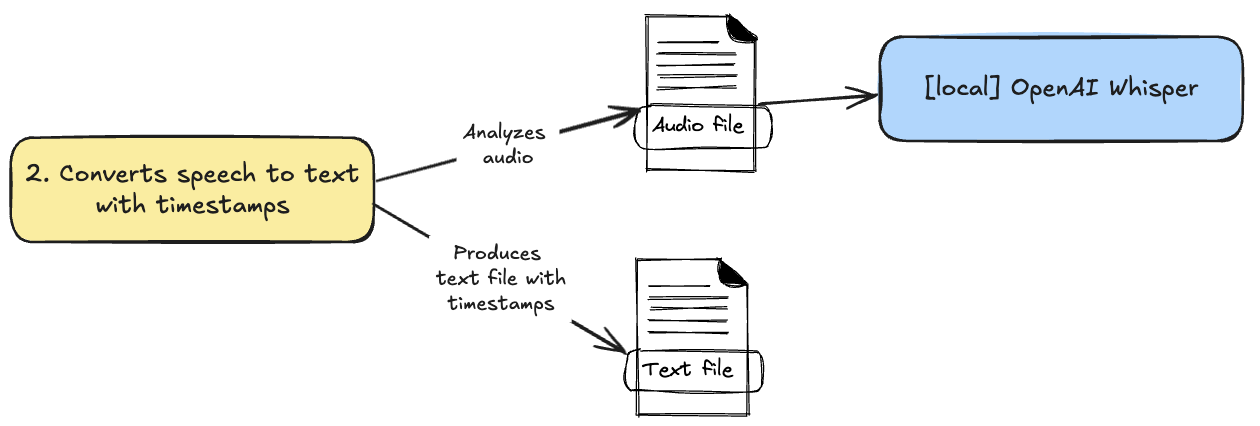

2. Converts Speech to Text with Timestamps

Loading...

Next, I use a local OpenAI Whisper model to convert speech from the retrieved audio to text. This text will be used to generate YouTube shorts from the best moments of a video. The key point here is that it’s not enough to convert speech to text - we need to store timestamps for each word to efficiently generate YouTube short videos. Code is available on GitHub.

The result of this step is a JSON file with timestamps and transcriptions of the video:

1{

2 "language": "en",

3 "duration_seconds": 30.0,

4 "segments": [

5 {

6 "start_time": 0.82,

7 "end_time": 6.94,

8 "text": "Hello everyone, my name is Vitaliy Anchar and I am senior software engineer. This is my new"

9 },

10 {

11 "start_time": 7.96,

12 "end_time": 9.62,

13 "text": "video series about"

14 },

15 {

16 "start_time": 10.24,

17 "end_time": 13.08,

18 "text": "software development during which I will develop"

19 },

20 {

21 "start_time": 13.6,

22 "end_time": 21.16,

23 "text": "my new open source project and record each step on the YouTube video. I am doing YouTube video recording"

24 },

25 {

26 "start_time": 21.16,

27 "end_time": 28.6,

28 "text": "without any editing. So enjoy my video because you will see how really software engineer works."

29 }

30 ]

31}

Of course, the transcription contains errors, like an error in my name, but the quality is good enough to handle with LLM in the next step.

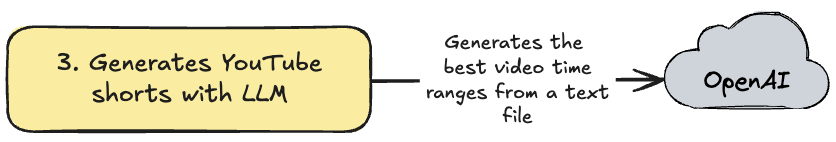

3. Generates YouTube Shorts with LLM

Loading...

At this step, I upload the JSON file with transcription and timestamps to OpenAI GPT-4-mini model with a prompt to generate time ranges for YouTube shorts by following YouTube shorts best practices. The prompt is available on GitHub.

The result is YouTube shorts metadata that will be used to upload videos to YouTube and time ranges that will be used to cut long videos into short ones (test output):

1{

2 "shorts": [

3 {

4 "title": "Watch Unedited Dev Work",

5 "subscribe_subtitle": "Subscribe for raw dev videos",

6 "start_segment_index": 3,

7 "end_segment_index": 4,

8 "description": "Hook: I'm recording my open-source project completely unedited — so you can see exactly how a software engineer works. In this short clip I explain that I'll record each development step and publish it raw, no cuts, no polish. Watch to get an honest behind-the-scenes look at real development, learn the process, and see decisions as they happen. If you like raw dev process, follow the series and subscribe for more full-length episodes and step-by-step builds. #software #opensource #programming #dev",

9 "estimated_duration": "15 seconds",

10 "tags": [

11 "software development",

12 "software engineer",

13 "open source",

14 "open-source",

15 "programming",

16 "coding",

17 "developer",

18 "dev",

19 "software",

20 "raw footage",

21 "unedited",

22 "behind the scenes",

23 "dev process",

24 "build in public",

25 "live coding",

26 "how I code",

27 "coding tips",

28 "programming tutorial",

29 "opensource project",

30 "software engineering",

31 "tech",

32 "code",

33 "software developer life",

34 "vitaliy anchar",

35 "developer workflow",

36 "recording unedited"

37 ],

38 "speech": [

39 {

40 "start_time": 13.6,

41 "end_time": 21.16,

42 "text": "my new open source project and record each step on the YouTube video. I am doing YouTube video recording"

43 },

44 {

45 "start_time": 21.16,

46 "end_time": 28.6,

47 "text": "without any editing. So enjoy my video because you will see how really software engineer works."

48 }

49 ],

50 "start_time": 13.6,

51 "end_time": 28.6

52 }

53 ],

54 "total_shorts_found": 1,

55 "analysis_summary": "Selected segments 3–4 (15s) because they form a complete, self-contained message: the creator is building an open-source project and recording every step unedited. This provides an immediate hook (unedited/raw), clear value (behind-the-scenes dev process), and high engagement potential (curiosity, comments, shares). Duration well under 40.5s and strong CTA opportunity to subscribe for the full, raw series."

56}

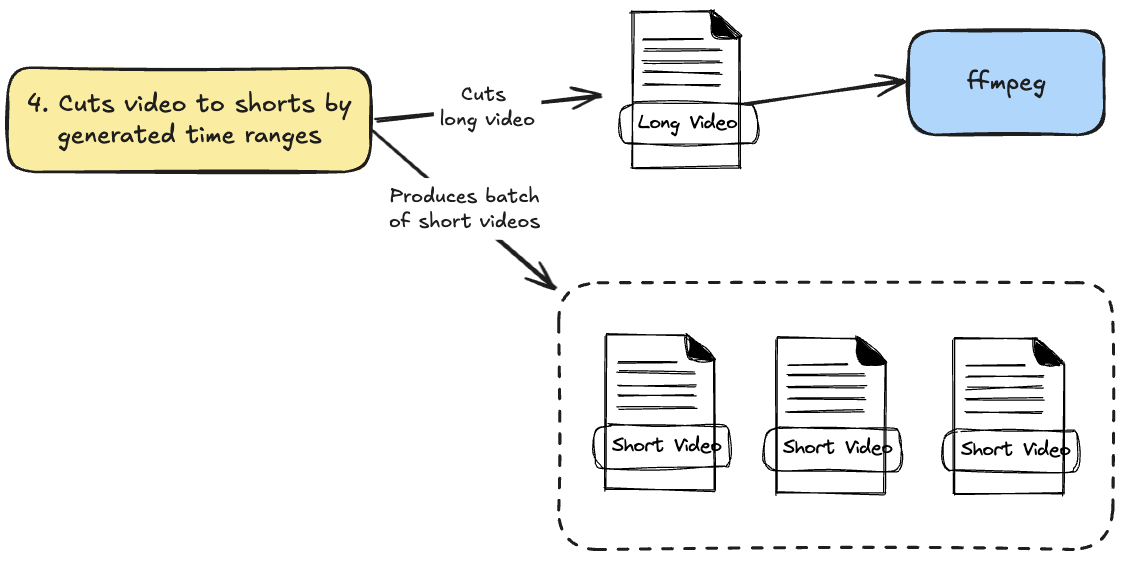

4. Cuts Video to Shorts by Generated Time Ranges

Loading...

At this step I’m using output from the LLM analysis and cutting long video to small ones with ffmpeg. Code is available on GitHub.

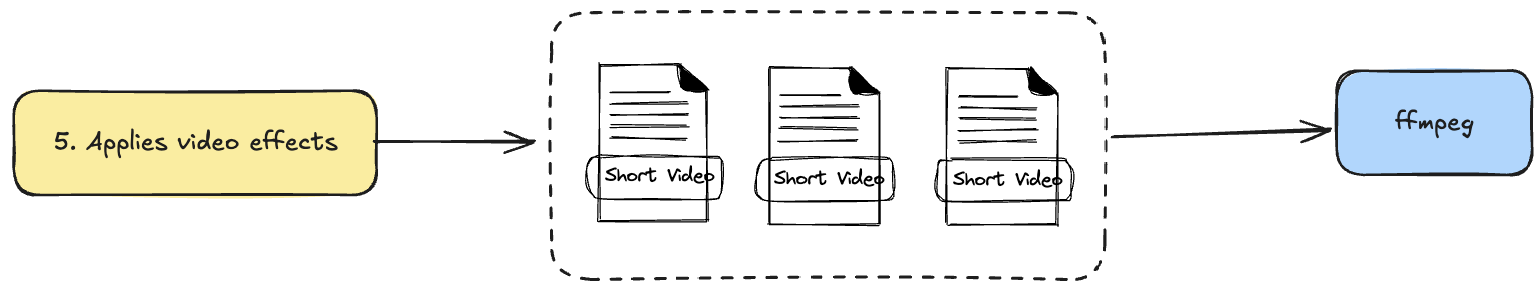

5. Applies Video Effects

Loading... This is the most challenging part of the application - applying video effects with

This is the most challenging part of the application - applying video effects with ffmpeg. Claude Code was unable to build video effects for me, so it took some time to read about ffmpeg to build the right arguments and combine the application of effects.

I also used the Strategy pattern here to make effects pluggable and allow building different effect strategies for different videos. Currently, I support only one strategy BASIC:

1[

2 AudioNormalizationEffect(target_lufs=-14.0, peak_limit=-1.0),

3 VideoRatioConversionEffect(target_w=1080, target_h=1920),

4 TextEffect(text=short.title, text_align="top"),

5 CaptionsEffect(

6 youtube_short=short,

7 output_dir=data_dir,

8 short_index=short_index,

9 debug=debug,

10 ),

11 BlurFilterStartVideoEffect(blur_strength=20, duration=1.0),

12 IncreaseVideoSpeedEffect(speed_factor=speed_factor, fps=30),

13]

which works pretty well for me.

Current effects:

- Normalize audio by increasing its volume if needed.

- Change video ratio from original to YouTube shorts format.

- Add title to video at the top.

- Add captions that highlight during speaking.

- Add blur effect at the beginning.

- Increase video speed by 1.35 times. As I mentioned, each effect is an implementation of the Strategy pattern, so the code is flexible:

1class VideoEffect(ABC):

2

3 @abstractmethod

4 def apply(self, video_stream: Stream) -> list[Stream]:

5 pass

6

7class IncreaseVideoSpeedEffect(VideoEffect):

8 def __init__(self, speed_factor: float, fps: int):

9 self.speed_factor = speed_factor

10 self.fps = fps

11

12 def apply(self, video_stream: Stream) -> list[Stream]:

13 v = video_stream.video

14 a = video_stream.audio

15 v = v.filter("setpts", f"PTS/{self.speed_factor}")

16 a = a.filter("atempo", self.speed_factor)

17 v = v.filter("fps", fps=self.fps)

18 v = v.filter("format", "yuv420p")

19 return [a, v]

The full code is available on GitHub. The result of this step is edited YouTube shorts that are ready to upload to YouTube.

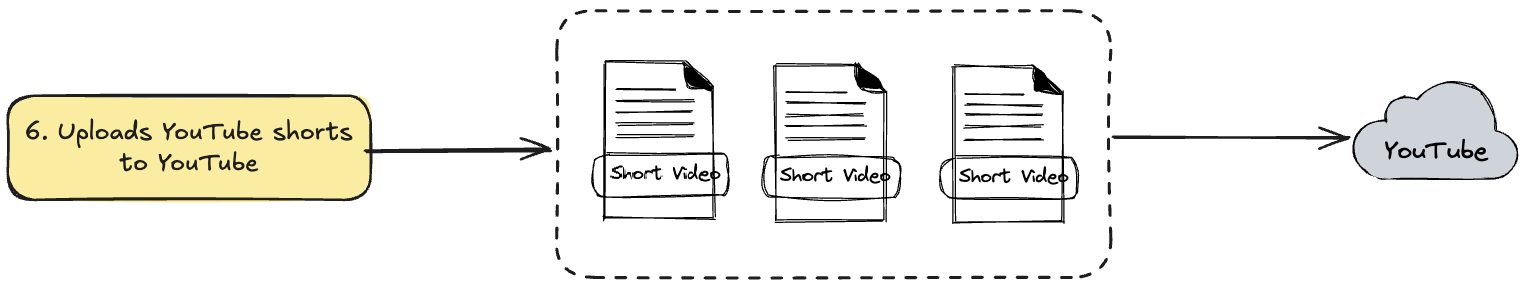

6. Upload YouTube Shorts to YouTube

Loading...

At this final step, the application uploads a batch of videos to YouTube with metadata generated by LLM. I also need to experiment with YouTube tags afterward to improve SEO, but that’s another story. Code is available on GitHub.

All code of described application is Open Source and available on GitHub.

Conclusions

In this article, I described my latest fun project that converts long videos into batches of small YouTube shorts. It was a very interesting project where I learned how to edit videos with ffmpeg and convert speech to text effectively.

The project successfully automates the entire pipeline from video analysis to YouTube upload, saving hours of manual work while maintaining quality output. The use of local OpenAI Whisper ensures cost-effective transcription, while GPT-5-mini provides intelligent content analysis for optimal short selection.

Key takeaways from this project:

ffmpegproved to be significantly more performant than Python-native video libraries- The Strategy pattern enables flexible video effect application

- Local AI models can be highly effective for specific use cases

- Automation of content creation pipelines can dramatically improve productivity Thanks for reading, and see you in my next article! Also check out my YouTube channel, which has excellent videos: YouTube.