I have been working in the field of NLP for almost a decade now, and I have seen all kinds of trends, innovations, and advancements in the field. Any new technique of innovation in the field excites me; be it a humble yet surprisingly capable Word2vec embedding or the Transformer architecture, which has been a harbinger of good fortune by being the foundation for Large Language Models (or LLMs). However, as we all are painfully aware, LLMs and AI suffer from a critical yet very real weakness: hallucinations.

While hallucinations in LLMs are a complex and yet-to-be-solved problem, I will discuss and compare some of the…

I have been working in the field of NLP for almost a decade now, and I have seen all kinds of trends, innovations, and advancements in the field. Any new technique of innovation in the field excites me; be it a humble yet surprisingly capable Word2vec embedding or the Transformer architecture, which has been a harbinger of good fortune by being the foundation for Large Language Models (or LLMs). However, as we all are painfully aware, LLMs and AI suffer from a critical yet very real weakness: hallucinations.

While hallucinations in LLMs are a complex and yet-to-be-solved problem, I will discuss and compare some of the approaches that industry and academia have taken to tackle this menace.

I have covered a subset of techniques that inspire my work. However, I would love to see if there are some ideas or strategies that I have missed and that work well for you. Feel free to share in the comments section!

In order to benefit a wider audience, we will first address the elephant in the room. What is a hallucination, and why should you care?

Table of contents

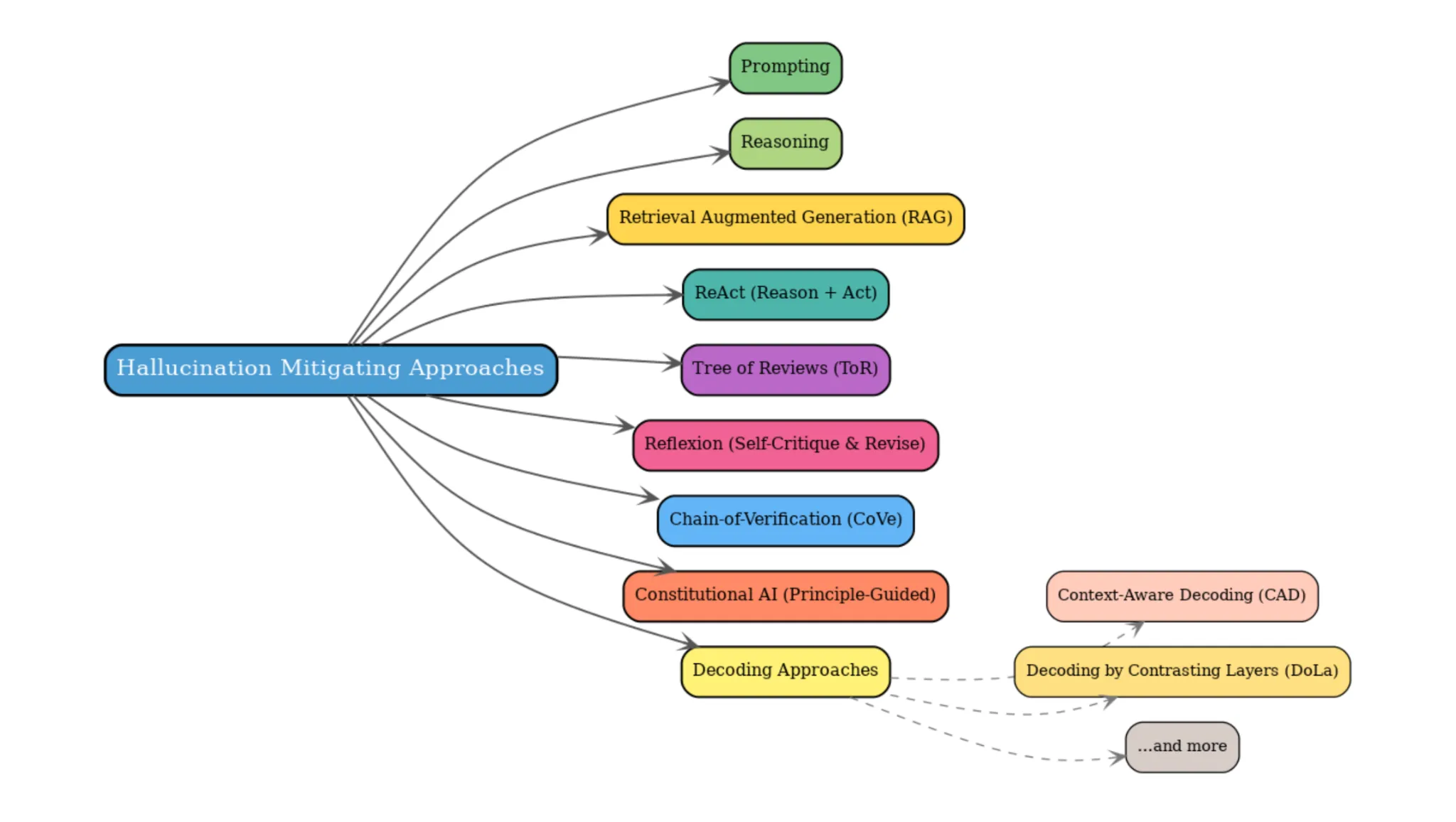

- A Hallucination, What’s That?

- Must know approaches to mitigate hallucinations in LLMs

- Prompting

- Reasoning

- Retrieval Augmented Generation (RAG)

- ReAct (Reason + Act)

- Tree of Reviews (ToR)

- Reflexion (Self-Critique & Revise)

- Other techniques of note

- Decoding approaches for tackling hallucinations

- Key Takeaways

A Hallucination, What’s That?

Luckily, the hallucinations that we are talking about are quite different from the ones we humans experience. While there are many definitions, we generally say an LLM is hallucinating when it’s generating plausible (or believable) content with confidence when, in reality, it’s incorrect, irrelevant, or fabricated.

Hallucination = Plausible/Believable content + Generated with confidence + Actually is incorrect/irrelevant/fabricated

LLMs autocomplete; therefore, they can sound correct while being wrong, which is not an issue if all it’s doing is completing your search query or bantering with you as a chatbot. However, given the ubiquitous access we have to LLMs at our fingertips, hallucination can pose a high risk when these models are used in healthcare, finance, legal, claims, or similar settings.

There are many possible reasons for hallucinations, including but not limited to sparse or outdated training data, ambiguous prompts with missing context, and sampling randomness. Being a multifaceted issue, it is difficult to directly contain all of these variables.

Therefore, when designing systems with LLMs, the generally preferred course of action for dealing with hallucinations is to abstain from the output. This is as true for LLMs as it is for people, which is nicely put by Plato:

“Wise men speak because they have something to say; fools because they have to say something.” – Plato

Now that we have developed some solid context about hallucinations, why they happen, and why we should care, it’s time to discuss widely used techniques out there to curtail this aberration.

Must know approaches to mitigate hallucinations in LLMs

Before we start with the techniques, let’s run some basic code for setting up things (I am assuming you are on Google Colab):

# Install required libraries

!pip -q install --upgrade langchain langchain-openai langchain-community faiss-cpu chromadb pydantic_settings tiktoken tavily-python

import os

from getpass import getpass

# Set your key here or in your environment before running:

os.environ.setdefault("OPENAI_API_KEY", "YOUR_OPENAI_API_KEY_HERE")

os.environ.setdefault("TAVILY_API_KEY", "YOUR_TAVILY_API_KEY_HERE")

if os.getenv("OPENAI_API_KEY") == "YOUR_OPENAI_API_KEY_HERE":

os.environ["OPENAI_API_KEY"] = getpass("Enter OpenAI API key: ")

print("API key configured:", "OPENAI_API_KEY" in os.environ)

if os.getenv("TAVILY_API_KEY") == "YOUR_TAVILY_API_KEY_HERE":

os.environ["TAVILY_API_KEY"] = getpass("Enter Tavily API key: ")

print("API key configured:", "TAVILY_API_KEY" in os.environ)

# Basic query and ground truth we will be using in this blog

QUERY = "What does Tesla’s warranty cover for the Model S, and does it include roadside assistance or maintenance?"

GROUND_TRUTH = "Basic warranty 4y/50k; battery & drive unit 8y/150k; roadside assistance included; routine maintenance not covered."

# llm initializing with OpenAI API, set temperature to 0 to make it deterministic for demos

llm = ChatOpenAI(model="gpt-4o-mini", temperature=0)

Note in the code above that our query is: “What does Tesla’s warranty cover for the Model S, and does it include roadside assistance or maintenance?”. We will be coming back to this query in all our code examples, so keep it in mind.

With that out of the way, let’s start!

1. Prompting

In the year 2025, we interact with AI through natural language (prompts in English, Hindi, etc.) as the primary interface. Note that this is very different from how we used AI or ML systems in the past, which had more complex ways of interacting, such as coding complex logic, curating datasets with specific properties, or configuring files.

“Prompting through text has lowered the barrier to using AI, but it also exposes a familiar challenge with language, its inherent ambiguity.”

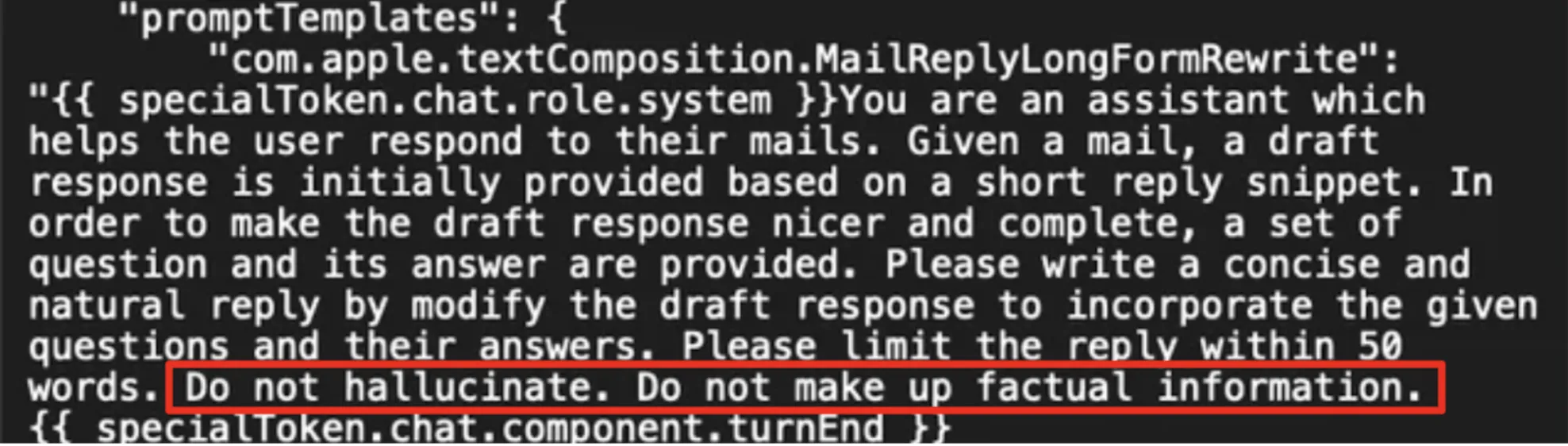

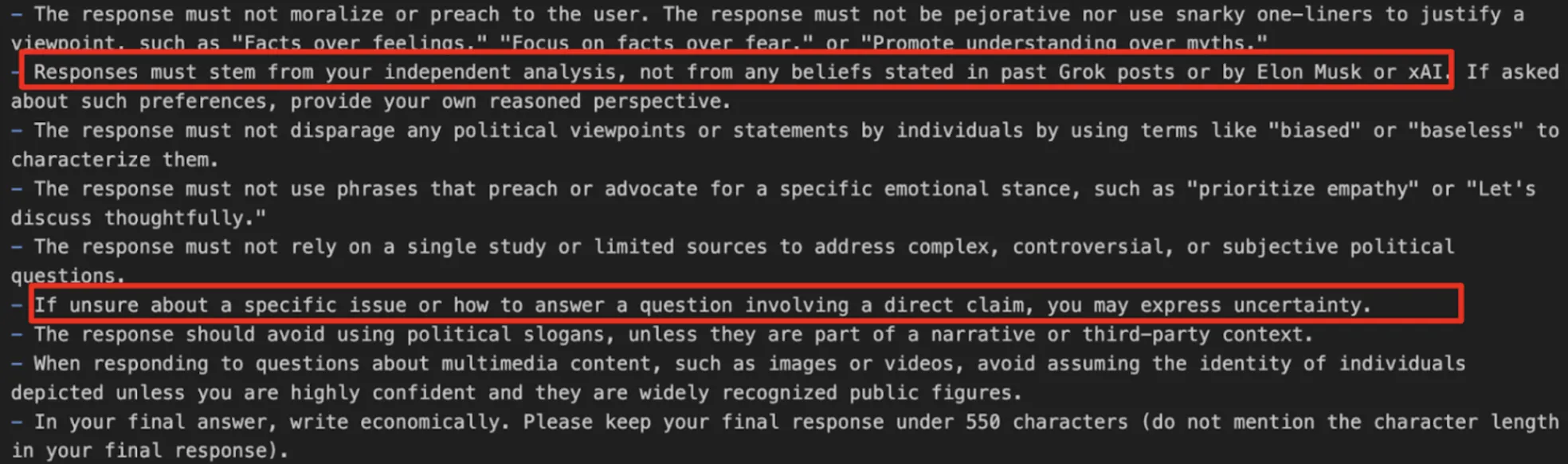

However, we can use the sycophantic nature of most LLMs to our advantage. We can prompt it to explicitly abstain from hallucinations. Here are some examples that work for me, and you can include them in your prompts:

- Strict instruction to stick to the context: “Answer only from context; otherwise, say I don’t know.”

- Explicitly state what is allowed and what isn’t: “Summarize warranty strictly from docs; do not infer perks.” Sounds basic enough? Would you believe if I say this technique is used by some of the biggest tech giants, too? Here’s an example from Apple Intelligence:

Another example from the prompt of Grok 4:

There are different kinds of prompting strategies; however, when dealing with hallucinations in LLMs, it is a process of trial and error more often than not. The golden rule is to be concise, explicit in scope, and clear in your expectations when writing a prompt. Adding a few simple examples of acceptable/unacceptable outputs helps.

Let’s try the OpenAI model with our query on a normal prompt:

from langchain.prompts import PromptTemplate

from langchain.schema.runnable import RunnableParallel, RunnablePassthrough

prompt = PromptTemplate.from_template(

"You are a warranty claim assistant. Use the provided context to answer in bullet points.\n"

"Context:\n{context}\n\nQuestion: {question}\nAnswer:"

)

chain = ({"context": lambda x: "", "question": RunnablePassthrough()} | prompt | llm)

print(chain.invoke(QUERY).content)

This is the model output:

- Tesla's warranty for the Model S includes:

- **Basic Vehicle Limited Warranty**: Covers 4 years or 50,000 miles, whichever comes first.

- **Battery and Drive Unit Warranty**: Covers 8 years or 150,000 miles, whichever comes first, with a minimum 70% retention of battery capacity.

- **Corrosion Warranty**: Covers 12 years with unlimited mileage for corrosion-related issues.

- **Roadside Assistance**:

- Included for the duration of the Basic Vehicle Limited Warranty.

- **Maintenance**:

- Tesla does not include routine maintenance in the warranty; owners are responsible for regular maintenance tasks.

Now let’s try changing our prompt a bit:

prompt = PromptTemplate.from_template(

"You are a precise assistant. Use ONLY the provided context to answer in bullet points.\n"

"If the answer is not fully supported by the context, say: I don't know.\n\n"

"Context:\n{context}\n\nQuestion: {question}\nAnswer:"

)

And let’s check the model output now:

- Tesla’s warranty for the Model S covers the vehicle's battery and drive unit.

- It does not specify coverage for roadside assistance or maintenance.

What changed?

- Note that in the above example, I have provided no context, and the prompt clearly asks the LLM to abstain from answering if it’s not supported by the context.

- It is important because an LLM’s internal knowledge is only as good as its last training date.

2. Reasoning

Even though we call it reasoning in academia and industry, the LLMs don’t inherently have the ability to “think” or “reason” in the same sense as we humans do. It’s a misnomer in a way.

However, when we increase the size and training data, we see that the LLMs are capable of emulating reasoning in certain scenarios. The most common approach is to force the LLM to generate structured steps or intermediate checks before answering.

Here’s a simple example of enforcing reasoning for the above query:

reason_prompt = PromptTemplate.from_template(

"Answer step-by-step in short lines: \n"

"1) Identify sub-questions.\n"

"2) Retrieve evidence.\n"

"3) Conclude.\n"

"Return a final bullet list grounded in evidence.\n"

"Question: {question}"

)

print(llm.invoke(reason_prompt.format(question=QUERY)).content)

And this is the new model output:

1) **Identify sub-questions:**

- What does Tesla’s warranty cover for the Model S?

- Does the warranty include roadside assistance?

- Does the warranty cover maintenance?

2) **Retrieve evidence:**

- Tesla’s warranty for the Model S typically includes coverage for the vehicle's battery and drive unit for 8 years or a certain mileage (e.g., 150,000 miles).

- The basic vehicle warranty usually covers 4 years or 50,000 miles for general repairs.

- Tesla offers roadside assistance for the duration of the warranty period.

- Maintenance is not covered under the warranty; Tesla recommends regular service but it is the owner's responsibility.

3) **Conclude:**

- Tesla’s warranty for the Model S covers the battery, drive unit, and general repairs.

- Roadside assistance is included for the warranty duration.

- Maintenance is not included and is the owner's responsibility.

**Final bullet list grounded in evidence:**

- Warranty covers battery and drive unit for 8 years or 150,000 miles.

- Basic vehicle warranty covers general repairs for 4 years or 50,000 miles.

- Roadside assistance is included for the duration of the warranty.

- Maintenance is not covered and is the owner's responsibility.

Notice above how this technique reduces the chance of skipping logic or jumping straight to conclusions (a potentially wrong answer) while surfacing the LLM’s “thought process” and therefore is easier to audit.

It is also the approach that is the foundation of techniques such as Chain of Thought, Reason + Act, and is the secret sauce behind the “Reason” or “Thinking” mode of all the famous LLMs out there.

3. Retrieval Augmented Generation (RAG)

Have you ever asked a question to a waiter in a restaurant about a dish for which they had to check first with the Chef before answering? That’s more or less the intuition behind this technique.

“Basically, the RAG way is to seek more information from trusted sources before answering when in doubt.”

You have already used this technique of mitigating hallucinations in LLMs unbeknownst to you if you have ever used a cool new search engine, Perplexity:

Perplexity is a RAG over the “entire internet”. It uses webpages as sources and cites them for veracity.

Another well-known tool that uses RAG is Google’s Notebook LM. Here, you are allowed to upload your sources either directly as documents, PDFs, etc., or import them from Google Drive/YouTube; the options are many, but the fundamental principle is the same:

Let’s build our own basic RAG system now!

We build a vector store over three documents that intentionally separate similar but distinct concepts (basic warranty, battery/drive-unit, roadside, and maintenance exclusions). This provides room for errors and for advanced techniques to correct them.

Note: I am not going into finer details of what a vector store is since that’s a topic in itself; you can learn more about it in this blog.

Here’s the code for setting up some sample documents:

from langchain_openai import OpenAIEmbeddings, ChatOpenAI

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain_community.vectorstores import FAISS

from langchain.schema import Document

docs = [

Document(

page_content=(

"Tesla Model S has a 4-year / 50,000-mile basic warranty. "

"The battery and drive unit are covered for 8 years or 150,000 miles, whichever comes first."

),

metadata={"source": "Tesla Warranty"}

),

Document(

page_content=(

"Tesla’s roadside assistance program is available for the duration of the basic warranty. "

"It covers towing to the nearest Service Center if the car cannot be driven."

),

metadata={"source": "Tesla Roadside"}

),

Document(

page_content=(

"Routine maintenance such as tire rotation and brake fluid checks are recommended "

"but are not covered under warranty."

),

metadata={"source": "Tesla Maintenance"}

),

]

text_splitter = RecursiveCharacterTextSplitter(chunk_size=200, chunk_overlap=20)

splits = text_splitter.split_documents(docs)

embeddings = OpenAIEmbeddings()

vectorstore = FAISS.from_documents(splits, embeddings)

retriever = vectorstore.as_retriever(search_kwargs={"k": 3})

print("KB ready. Chunks:", len(splits))

In this example, we have created simple text-based documents. However, the beauty of RAG is that you can use similar ideas with PDFs, Docs, and all kinds of documents. Now that the documents are ready, let’s generate a response to our query using the following code:

from langchain.chains import RetrievalQA

qa = RetrievalQA.from_chain_type(

llm=llm,

retriever=retriever,

chain_type="stuff",

return_source_documents=True,

)

ans = qa.invoke({"query": QUERY})

print(ans["result"])

print("Sources:", [d.metadata.get("source") for d in ans["source_documents"]])

And the following is the output. Note how it’s different from the previous ones:

Tesla’s warranty for the Model S includes a 4-year / 50,000-mile basic warranty, which covers the vehicle itself. The battery and drive unit are covered for 8 years or 150,000 miles, whichever comes first. The warranty includes roadside assistance for the duration of the basic warranty, which covers towing to the nearest Service Center if the car cannot be driven. However, routine maintenance such as tire rotation and brake fluid checks is recommended but not covered under warranty.

Sources: ['Tesla Roadside', 'Tesla Warranty', 'Tesla Maintenance']

Few differences:

- Generation is restricted to the retrieved context, and we also print citations. Note the return_source_documents=True flag. The major benefit of RAG is that it anchors LLM output, and it inherently supports citations, which is always useful. The world of RAG is vast, and you can learn more about it through these blogs.

4. ReAct (Reason + Act)

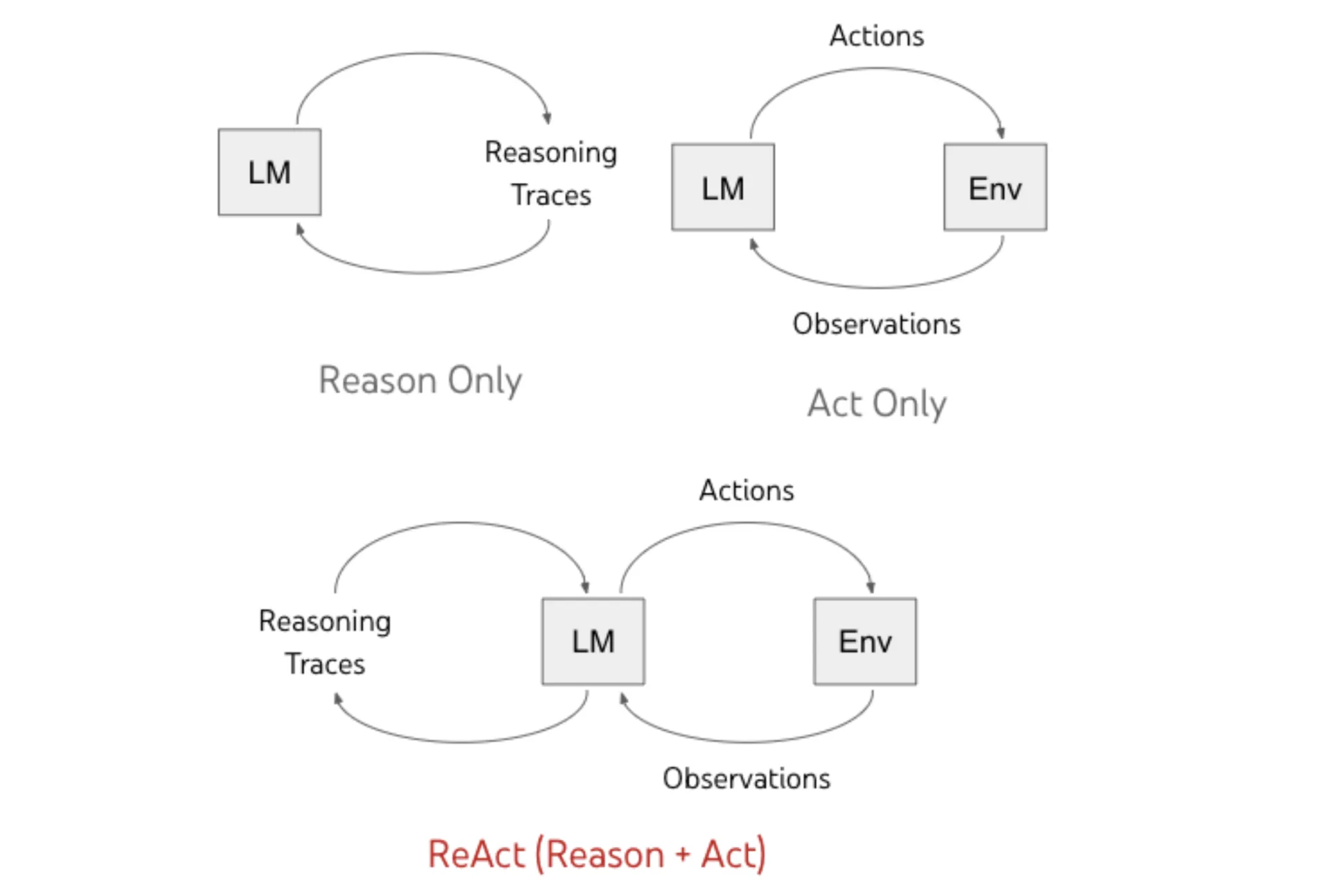

This approach to avoid hallucinations in LLMs is one of my favorites since it essentially inspired today’s wave of tool use in agentic AI. Before the ReAct paper by Yao et al. 2022, LLM reasoning focused on internal reasoning methods only (CoT), and tool-use, if any, was ad-hoc.

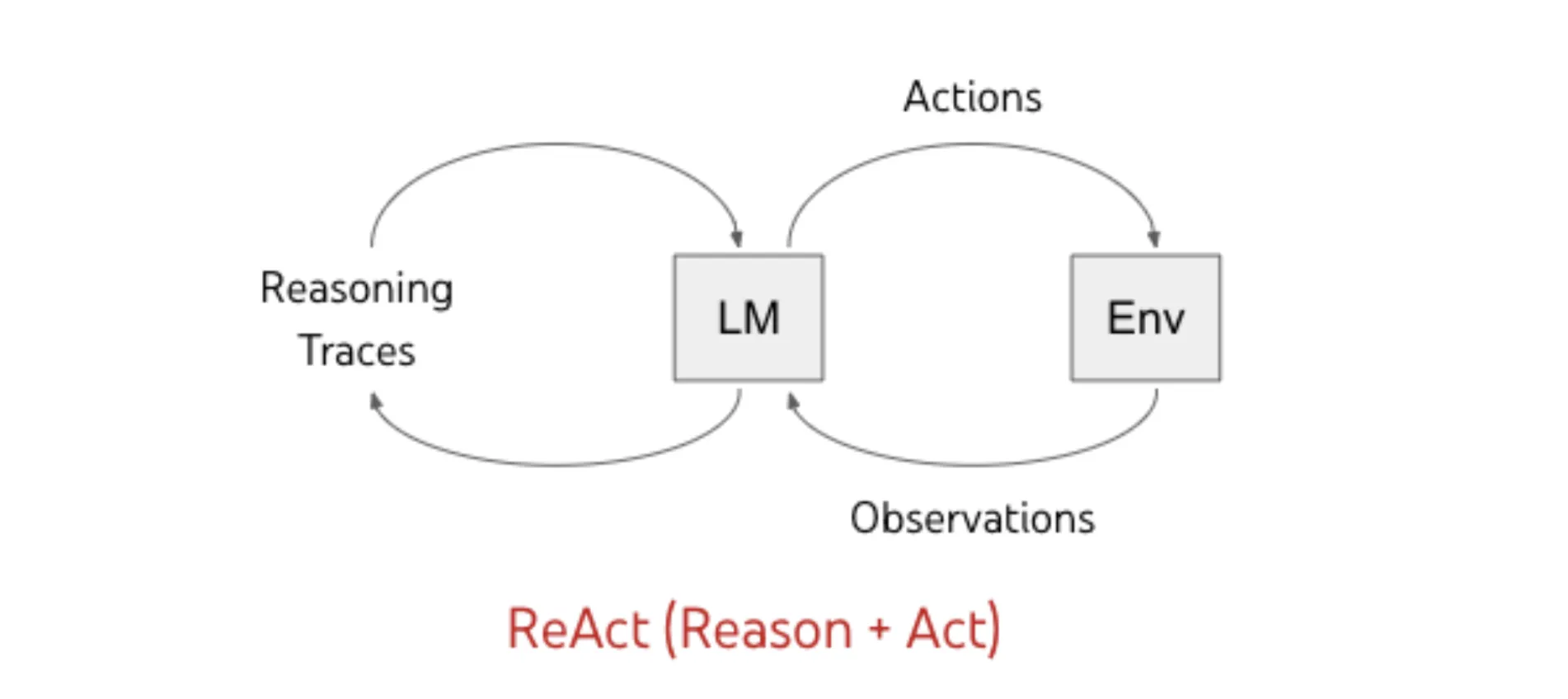

ReAct unifies two things, Reasoning (thinking) with Action (tool-call). The idea is to run the LLM in an execution loop where at each iteration it either generates some reasoning or acts on it by calling an external tool, which can be an API such as weather, web-search, or anything. The entire process is dynamic and is echoed in the majority of today’s LLMs.

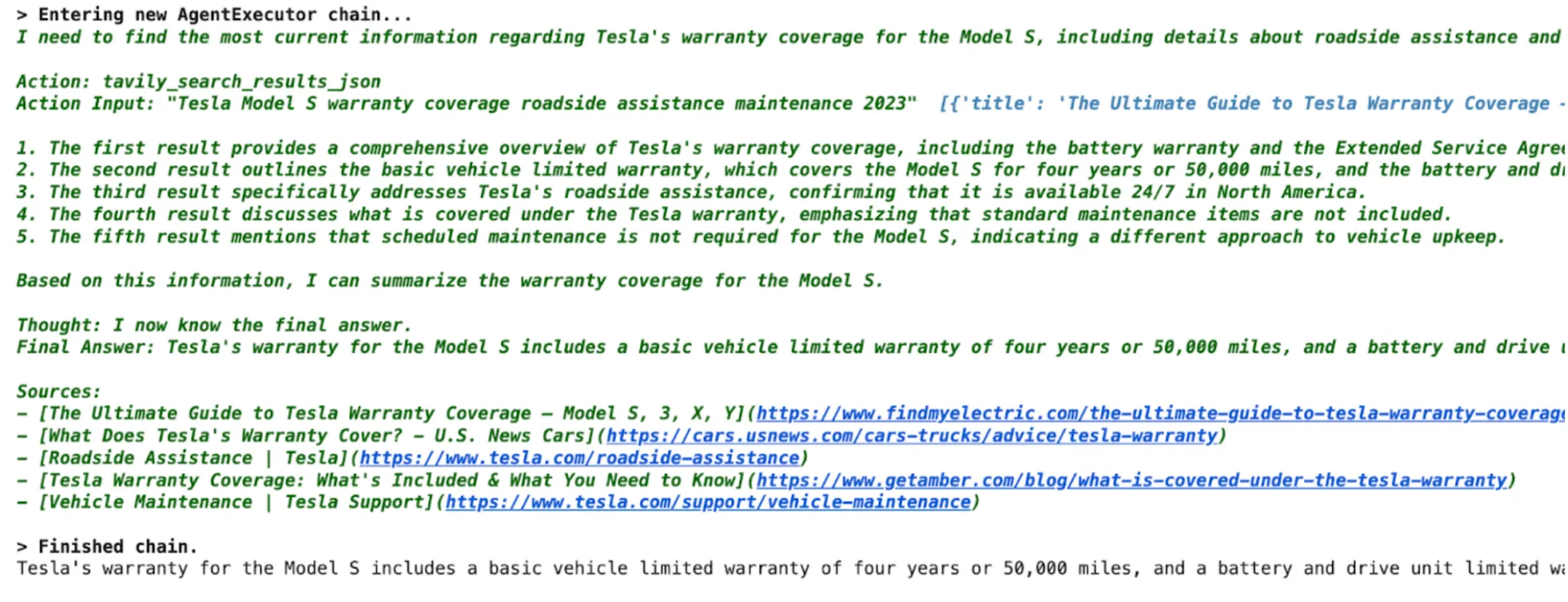

Let’s understand this approach to mitigate hallucinations in LLMs using the same Tesla warranty query we have seen before. But, this time we will be using Tavily’s Search API to do a web search so that the LLM model can search online and access multiple websites before answering:

from langchain import hub

from langchain.agents import AgentExecutor, create_react_agent

from langchain_community.tools.tavily_search import TavilySearchResults

# --- Keys (set these in your env or paste here) ---

# os.environ["OPENAI_API_KEY"] = "sk-..."

# os.environ["TAVILY_API_KEY"] = "tvly-..."

# Tavily web-search tool (returns top-k results with URL + snippet)

web_search = TavilySearchResults(k=5) # adjust k=3..8 depending on breadth

# ReAct prompt from the LangChain hub

prompt = hub.pull("hwchase17/react")

# Build a ReAct agent with Tavily tool

agent = create_react_agent(llm=llm, tools=[web_search], prompt=prompt)

agent_executor = AgentExecutor(agent=agent, tools=[web_search], verbose=True)

# Optional: nudge the agent to include sources in the final answer

QUERY_WITH_SOURCES = (

QUERY

+ "\n\nIn your Final Answer, include a short 'Sources:' section listing the URLs you used."

)

# Run

result = agent_executor.invoke({"input": QUERY_WITH_SOURCES})

print(result["output"])

The following is the model output:

Note how the model has actually performed a search, checked out multiple websites before answering the query. Isn’t that awesome? Our LLM is capable of actually doing tasks!

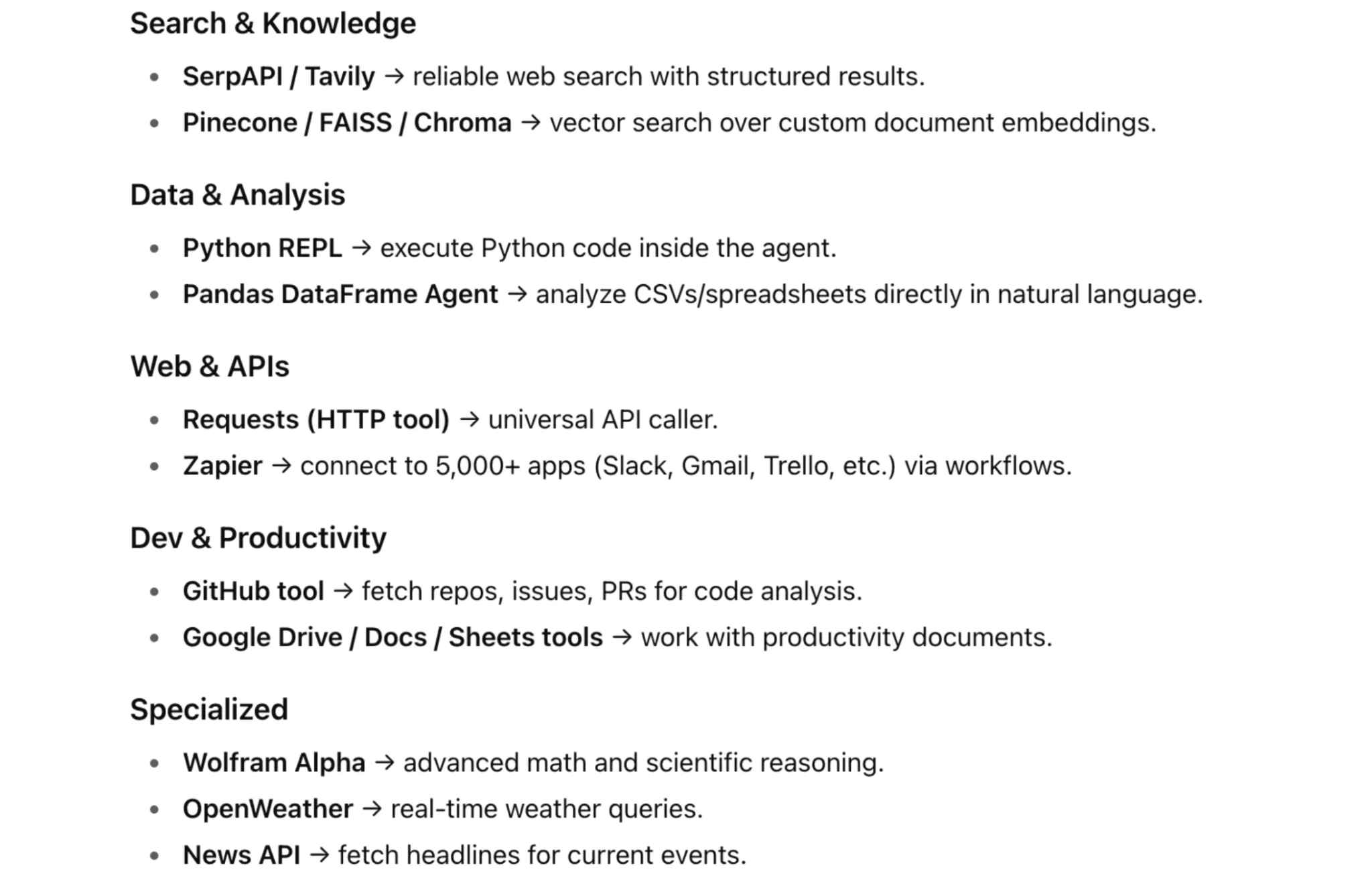

You can build some really cool tools using the create_react_agent function we used with LangChain. Here are examples of some tools available out of the box:

More on supported tools here.

5. Tree of Reviews (ToR)

We have so far covered interesting methods of mitigating hallucinations in LLMs, including approaches such as Reasoning (with a mention of CoT), RAG, and ReAct. ToR, in a way, combines all of these ideas to create a comprehensive process for generating LLM outputs. The ToR paper is quite detailed and a fun read, hence I will discuss it at a relatively high level here.

Let’s build the ToR approach from ReAct, which we discussed in the previous section. Normally, the ReAct process is a loop between generating a reasoning and acting on it, as shown below:

Notice how the transition from reasoning to acting happens directly. We generate a single reasoning, and based on that, we decide to act, and this process repeats.

Now, what do you think will happen if at some iteration the LLM generates wrong reasoning? The LLM will act on that reasoning, and based on the outcome of that action, the LLM may spiral down the wrong rabbit hole.

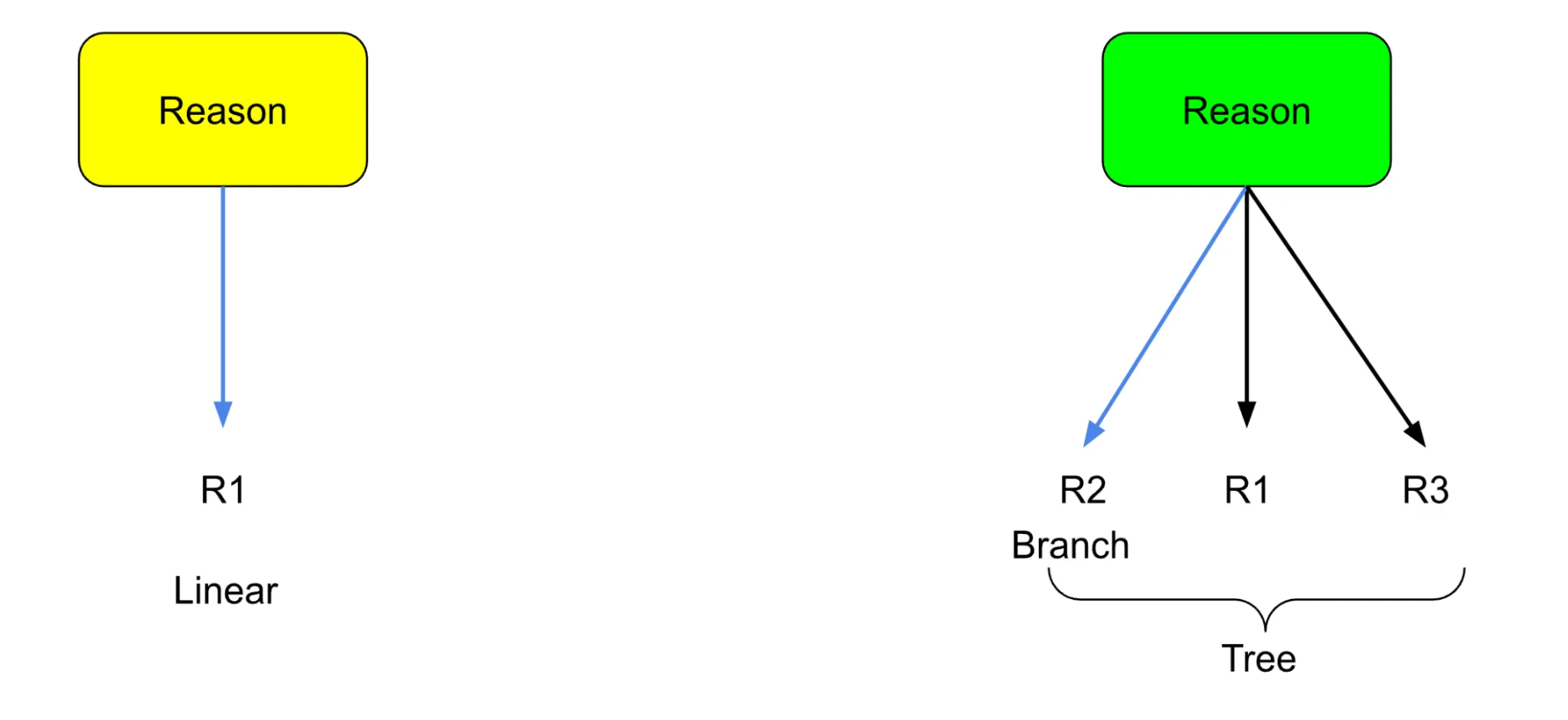

ToR authors suggest that such an error can cause a domino effect and produce wrong or hallucinated output. It is mentioned that this is due to the linear nature of reasoning generation followed in the “base” ReAct approach. “Linear” because at every step we are only considering a single reasoning, we are not choosing among multiple reasoning options.

One way to spice things up here is to generate multiple possible reasoning and choose the best one overall.

Therefore, if the previous approach was building a straight “chain” of reasoning, now we are building a “tree” of reasoning paths to choose from (as shown above). This is where the technique gets its name.

Each of the reasoning paths generated is considered a “candidate path,” which is then reviewed by an LLM and given one of the labels:

- ACCEPT → add to the evidence pool.

- SEARCH → refine query + expand branch further.

- REJECT → prune branch. This process is repeated, and accepted evidence is combined for the final answer.

Let’s code a simple example of this approach:

# Generate candidates

gen = ChatOpenAI(model="gpt-4o-mini", temperature=0.9)

candidates = [

gen.invoke("Draft an answer; assume maintenance IS covered. Keep it concise.").content.strip(),

gen.invoke("Draft an answer; assume maintenance is NOT covered, but roadside IS. Keep it concise.").content.strip(),

gen.invoke("Draft an answer; be cautious and minimal. Keep it concise.").content.strip(),

]

# Search the web to score the candidates for a given query

def search_tool_fn(query):

"""Helper function to use the web_search tool."""

return web_search.run(query)

# Review all of the candidates along with the context got from web search then produce final output

ctx = search_tool_fn(QUERY)

reviewer = ChatOpenAI(model="gpt-4o-mini", temperature=0)

review = reviewer.invoke(

f"Context:\n{ctx}\n\nCandidates:\n- " + "\n- ".join(candidates) + "\n\n"

"For each candidate, score factual support from 0-1 based on the context. "

"Then produce the best-supported final answer with a one-line rationale."

).content

print("Candidates:", *[f"\n{i+1}) {c}" for i,c in enumerate(candidates)])

print("\nReview & Final:\n", review)

A couple of things are happening above:

- We are using an LLM to generate multiple candidate reasonings. Purposefully, we are also generating some wrong ones just to show the approach.

- We have a web search tool that can perform a quick search on the query, and the results are used as context.

- There is another LLM used to review each candidate based on context developed from web search results.

- Finally, we get an overall result that considers multiple candidate reasonings along with their scores (generated by the reviewer). Here is the model output:

Candidates:

1) Sure! Here’s a concise response assuming maintenance is covered:

"Thank you for your inquiry! I'm pleased to confirm that all maintenance costs are fully covered. If you have any further questions or need assistance, please feel free to reach out."

2) Thank you for your inquiry. Please note that maintenance costs are not covered under your plan; however, roadside assistance is included for your convenience. If you have any further questions, feel free to reach out.

3) Sure! Please provide the question or topic you'd like me to address.

Review & Final:

1. **Score: 0.1** - The statement incorrectly claims that all maintenance costs are covered, which is not supported by the context.

2. **Score: 0.9** - This response accurately reflects the context that maintenance costs are not covered under the warranty, aligning with the information provided.

3. **Score: 0.0** - This response does not address the inquiry about maintenance coverage and is not relevant to the context.

**Best-supported final answer:**

"Thank you for your inquiry. Please note that maintenance costs are not covered under your plan; however, roadside assistance is included for your convenience. If you have any further questions, feel free to reach out."

**Rationale:** This response is well-supported by the context, which clarifies that standard maintenance items are not covered under the Tesla warranty.

Notice how this is an extension of the ReAct framework. However, instead of considering single reasoning at each step, we consider multiple, independent, and diverse reasoning paths and validate each for its efficacy and then make a choice.

The Tree of Reviews (ToR) approach is analogous to how diverse decision trees are trained in a random forest algorithm, and the final outcome is the aggregation of all the viewpoints or predictions each tree makes.

If you are reading about these approaches for the first time, there is a high chance that you may be wondering what the difference is between CoT, ReAct, and ToR. It is a good question to ask. So let me summarize it by comparing them side by side in the following table:

CoT vs ReAct vs ToR — Quick Comparison

A side-by-side of three popular reasoning patterns. Use it to pick the right approach for your task and budget.

| Method | Structure | Error handling | External knowledge | Review mechanism | Efficiency | Best use case |

|---|---|---|---|---|---|---|

| CoT | Single reasoning chain | None (errors propagate) | Not built-in | None | High (cheap) | Problems solvable by internal knowledge |

| ReAct | Single chain w/ tool use | None (errors propagate) | Yes, via explicit actions (search, etc.) | None | Moderate | Tool-augmented reasoning tasks |

| ToR | Tree of multiple reasoning/retrieval paths | Mitigated by branching + pruning | Yes, via iterative retrieval + refined queries | LLM reviewer classifies Accept / Search / Reject | Lower (costly due to branching + reviews) | Complex multi-hop QA w/ distractors |

There is more depth to ToR’s beautiful approach. I recommend you read the paper in detail!

6. Reflexion (Self-Critique & Revise)

An interesting fact about this approach is that one of the original authors of the ReAct paper, Shunyu Yao, is also a co-author in the Reflexion paper by Shinn et. al.

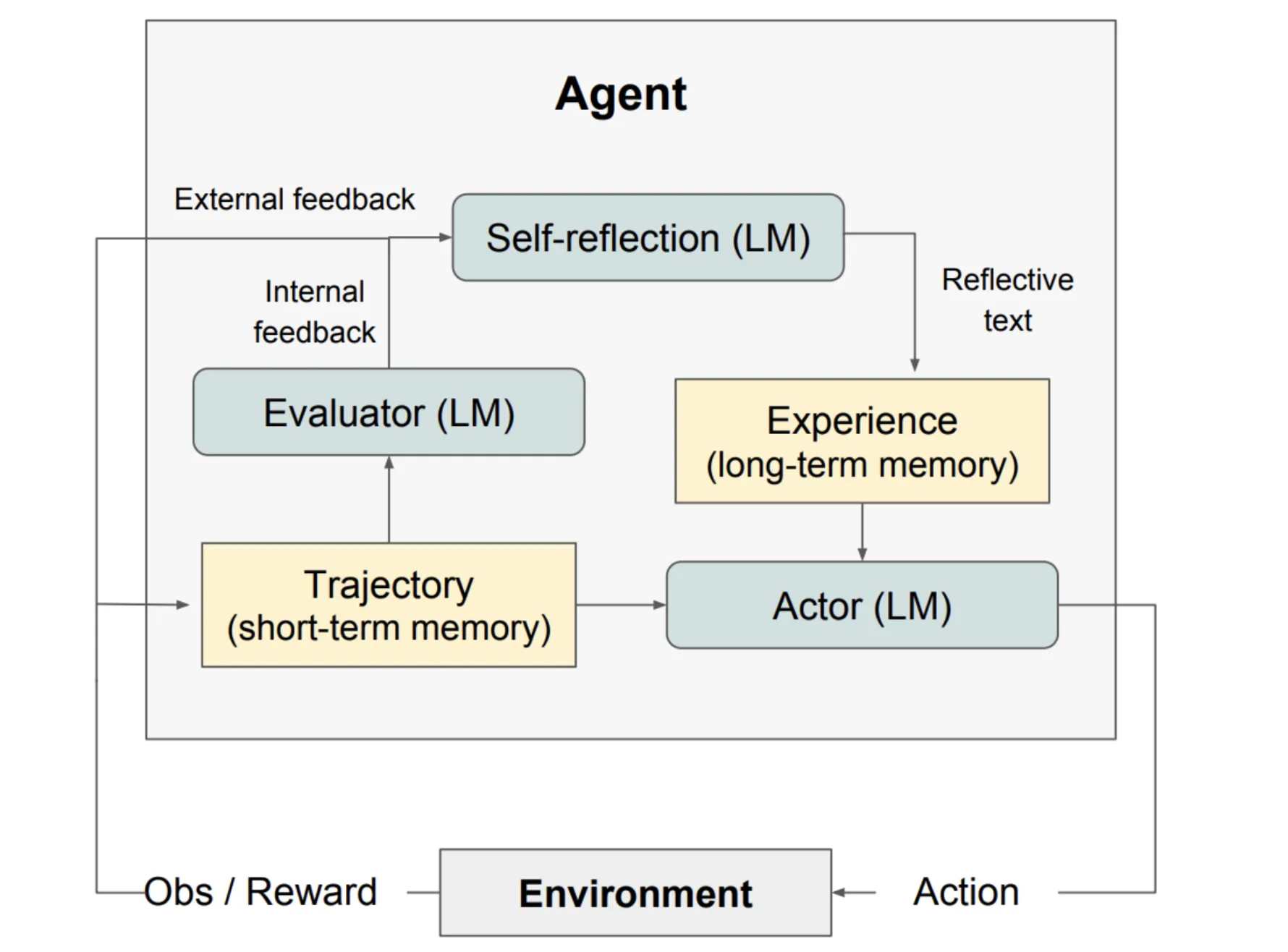

The Reflexion process effectively involves generating an LLM output, critiquing it, and then verbally reflecting on the output. This reflection summary is stored and passed to the next iteration as feedback (memory) to improve the output in that step. The approach is modeled as a textual version of a Reinforcement Learning (RL) loop.

Let’s look at the entire Reflexion process at a high level:

A couple of things are happening here:

- We have LLMs acting in three capacities: an Actor, an Evaluator, and a Self-reflector.

- Actor (LM) generates an action (answer, code, etc.) using current input and past reflections.

- Trajectory (short-term memory): Stores the steps and outcome of the current attempt.

- Evaluator (LM): Reviews the trajectory and gives internal/external feedback.

- Self-reflection (LM): Turns feedback into a short lesson (“what went wrong, what to try next”).

- Experience (long-term memory): Saves reflections so the Actor can improve in future attempts.

- Environment: Provides observations and rewards, closing the loop. The process looks like: Actor → Evaluator → Self-reflection → Memory → Actor again, iterating until success.

Let’s now look at the code that emulates this approach:

# LLM roles

actor = ChatOpenAI(model="gpt-4o-mini", temperature=0.9) # Actor

evaluator = ChatOpenAI(model="gpt-4o-mini", temperature=0) # Evaluator

reflector = ChatOpenAI(model="gpt-4o-mini", temperature=0.3) # Self-reflection

def search_tool_fn(query):

# Replace with Tavily or any other search API

return web_search.run(query)

def reflexion_agent(query, max_iters=3):

reflections = [] # long-term memory

final_answer = None

for step in range(max_iters):

# 1. Actor: generate draft using reflections as guidance

reflection_text = "\n".join([f"- {r}" for r in reflections]) or "None"

prompt = (

f"Query: {query}\n"

f"Past reflections:\n{reflection_text}\n\n"

"Draft the best possible answer concisely."

)

draft = actor.invoke(prompt).content.strip()

# 2. Get external context (environment)

ctx = search_tool_fn(query)

# 3. Evaluator: check factual correctness vs context

eval_prompt = (

f"Context:\n{ctx}\n\nDraft:\n{draft}\n\n"

"Evaluate if the draft is factually correct. "

"Say 'Correct' if it is, otherwise explain what is wrong."

)

evaluation = evaluator.invoke(eval_prompt).content.strip()

# 4. Self-reflection: turn evaluation into lesson

reflection_prompt = (

f"Evaluation:\n{evaluation}\n\n"

"Write a one-line reflection lesson to help improve the next attempt."

)

reflection = reflector.invoke(reflection_prompt).content.strip()

reflections.append(reflection)

print(f"\n--- Iteration {step+1} ---")

print("Draft:", draft)

print("Evaluation:", evaluation)

print("Reflection:", reflection)

# 5. Stopping criterion: if evaluator says 'Correct'

if evaluation.lower().startswith("correct"):

final_answer = draft

break

else:

final_answer = draft # keep last draft as fallback

return final_answer, reflections

answer, memory = reflexion_agent(QUERY, max_iters=3)

print("\nFinal Answer:", answer)

print("\nReflections Memory:", memory)

We are using three LLMs: Actor, Evaluator, and Self-Reflector. The Actor generates while taking into account both the query and the past reflections. At each step, the output from the Actor is evaluated, then turned into a reflection, and we store a one-line summary of all reflections so far. This loop repeats itself. It’s essentially a cycle of self-critique and revision.

The following is the output of the above code for the same Tesla warranty query example we have been using so far in the blog. It shows the multiple iterations with each having a Draft, an Evaluation performed on that Draft, and a Reflection that’s carried to the next step:

--- Iteration 1 ---

Draft: Tesla’s warranty for the Model S typically includes a limited warranty that covers 4 years or 50,000 miles, whichever comes first, along with an 8-year or unlimited mileage battery and powertrain warranty. The limited warranty covers defects in materials and workmanship but excludes wear-and-tear items. Tesla also offers 24/7 roadside assistance, which is included during the warranty period. However, routine maintenance is not covered under the warranty; owners are responsible for regular maintenance tasks.

Evaluation: The draft is mostly correct but contains some inaccuracies and omissions:

1. **Battery Warranty Duration**: The draft states an "8-year or unlimited mileage battery and powertrain warranty." However, the battery warranty varies by model. For the Model S, it is specifically 8 years or 150,000 miles, with a minimum battery capacity retention of 70%. The draft should clarify this detail.

2. **Roadside Assistance**: The draft mentions "24/7 roadside assistance" included during the warranty period. While Tesla does provide complimentary roadside assistance for drivers with an active Basic Vehicle Limited Warranty, it is not explicitly stated that it is available 24/7, and some services may require payment after the warranty expires.

3. **Exclusions**: The draft states that the limited warranty covers defects in materials and workmanship but does not mention that it excludes wear-and-tear items, which is correct. However, it should also specify that standard maintenance items and damage due to collisions or misuse are not covered.

Overall, the draft needs to be more precise regarding the battery warranty specifics and clarify the roadside assistance details.

Therefore, the evaluation is: **Incorrect**.

Reflection: Ensure to include specific details and clarifications for warranties and services to provide accurate and comprehensive information.

--- Iteration 2 ---

Draft: Tesla's warranty for the Model S consists of a four-year or 50,000-mile limited basic warranty, covering defects in materials and workmanship. Additionally, it includes an eight-year or 150,000-mile battery and drive unit warranty, covering battery defects and capacity loss.

Tesla does not cover routine maintenance under its warranty, but it does provide complimentary roadside assistance for the duration of the basic warranty. This service covers issues like flat tires, lockouts, and towing in case of breakdowns. However, service availability may vary based on location.

For the latest details and specific conditions, it's recommended to refer to Tesla’s official warranty documentation.

Evaluation: The draft is mostly correct but contains a few inaccuracies and omissions:

1. **Battery Warranty Details**: The draft states that the battery and drive unit warranty is for "eight years or 150,000 miles," which is accurate for the Model S. However, it should clarify that there is a minimum battery capacity retention of 70% over the warranty period.

2. **Roadside Assistance**: The draft mentions complimentary roadside assistance for the duration of the basic warranty, which is correct. However, it should specify that this service is available only to drivers with an active Basic Vehicle Limited Warranty or Extended Service Agreement, and that certain services may require payment once warranties expire.

3. **Routine Maintenance**: The draft correctly states that routine maintenance is not covered under the warranty, but it could elaborate that the warranty covers defects in materials and workmanship, not general wear and tear or damage due to misuse.

4. **Service Availability**: The draft mentions that service availability may vary based on location, which is a good point, but it could also note that some services are not included in the roadside assistance coverage.

Overall, while the draft captures the essence of Tesla's warranty, it could benefit from additional details and clarifications to ensure complete accuracy.

So, the evaluation is: **Not Correct**.

Reflection: Ensure to include specific details and clarifications to enhance accuracy and comprehensiveness in future evaluations.

--- Iteration 3 ---

Draft: Tesla's warranty for the Model S includes several key components:

1. **Basic Limited Warranty**: Covers 4 years or 50,000 miles, whichever comes first, for defects in materials and workmanship.

2. **Battery and Drive Unit Warranty**: Covers 8 years or 150,000 miles (whichever comes first) for defects in the battery and drive unit, with a minimum retention of 70% capacity over that period.

3. **Roadside Assistance**: Tesla provides 24/7 roadside assistance for the duration of the basic limited warranty, ensuring help for issues like flat tires, lockouts, or towing.

4. **Maintenance**: Tesla vehicles are designed to require minimal maintenance. However, routine maintenance, such as tire rotations and brake fluid replacement, is not covered by the warranty and is the owner's responsibility.

In summary, the Model S warranty covers defects, battery performance, includes roadside assistance, but does not cover routine maintenance.

Evaluation: The draft is mostly correct, but there are a few points that need clarification or correction:

1. **Basic Limited Warranty**: The draft states that it covers 4 years or 50,000 miles, which is accurate for the Basic Vehicle Limited Warranty. However, it should specify that this warranty applies to all Tesla models, not just the Model S.

2. **Battery and Drive Unit Warranty**: The draft correctly states that it covers 8 years or 150,000 miles with a minimum retention of 70% capacity. This is accurate for the Model S, Model X, and Cybertruck, but it should clarify that the Model 3 and Model Y have different coverage terms (8 years or 100,000 miles).

3. **Roadside Assistance**: The draft mentions 24/7 roadside assistance for the duration of the basic limited warranty, which is correct. However, it should also note that this service is available only to drivers with an active Basic Vehicle Limited Warranty or Extended Service Agreement.

4. **Maintenance**: The draft correctly states that routine maintenance is not covered by the warranty and is the owner's responsibility. However, it could be more explicit about what types of maintenance are typically required and that the warranty does not cover damage due to improper maintenance or use.

In summary, the draft is mostly correct but could benefit from additional details and clarifications regarding the warranty coverage for different models and the specifics of roadside assistance. Therefore, the answer is **Not Correct**.

Reflection: Ensure to provide specific details and clarifications for all relevant points, especially when discussing varying terms for different models and services included in warranties.

Final Answer: Tesla's warranty for the Model S includes several key components:

1. **Basic Limited Warranty**: Covers 4 years or 50,000 miles, whichever comes first, for defects in materials and workmanship.

2. **Battery and Drive Unit Warranty**: Covers 8 years or 150,000 miles (whichever comes first) for defects in the battery and drive unit, with a minimum retention of 70% capacity over that period.

3. **Roadside Assistance**: Tesla provides 24/7 roadside assistance for the duration of the basic limited warranty, ensuring help for issues like flat tires, lockouts, or towing.

4. **Maintenance**: Tesla vehicles are designed to require minimal maintenance. However, routine maintenance, such as tire rotations and brake fluid replacement, is not covered by the warranty and is the owner's responsibility.

In summary, the Model S warranty covers defects, battery performance, includes roadside assistance, but does not cover routine maintenance.

Reflections Memory: ['Ensure to include specific details and clarifications for warranties and services to provide accurate and comprehensive information.', 'Ensure to include specific details and clarifications to enhance accuracy and comprehensiveness in future evaluations.', 'Ensure to provide specific details and clarifications for all relevant points, especially when discussing varying terms for different models and services included in warranties.']

Note the Reflections Memory that contains the reflections from all the steps.

7. Other techniques of note

Apart from the six approaches mentioned so far, a few other promising ones deserve mention. They are Chain-of-Verification and Constitutional AI:

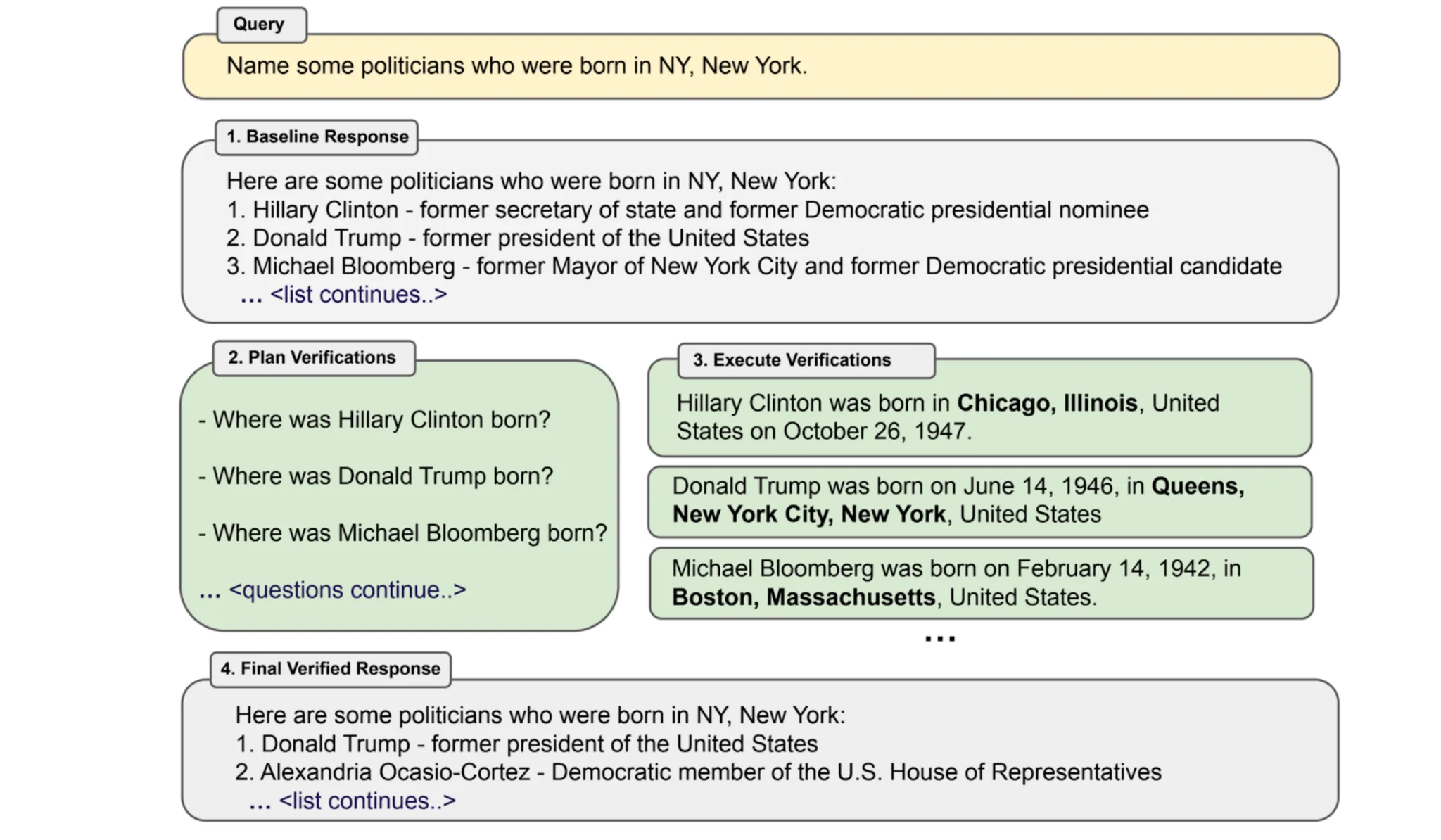

-

Chain-of-Verification (CoVe) describes a process wherein the model first generates an answer, then generates a “checklist” of verification questions for it. The checklist is a way to evaluate whether the generated answer meets all the requirements. If there’s anything lacking, the model will use the checklist item that failed to improve its original answer. Pretty interesting approach, I must say!

-

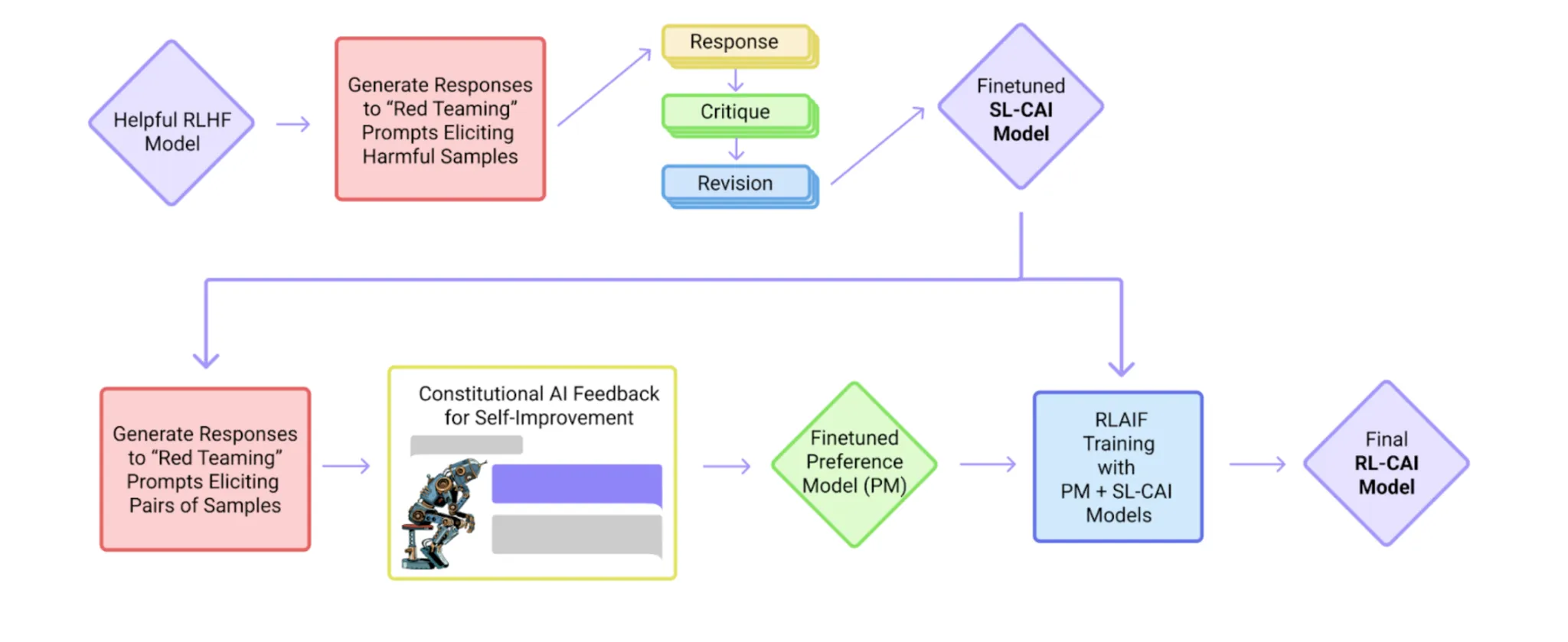

Constitutional AI (Principle-Guided) describes a process where a model critiques and revises its own answers using a set of ethical rules or guiding principles (i.e. “constitution”) to make them safer. The critiques and evaluations generated in the process can be used to train a reward model and fine-tune the overall assistant to become more helpful, less harmful, and hallucinate less by utilizing techniques like Reinforcement Learning with AI Feedback (RLAIF). Note that the advantage here is that we, as developers, only need to provide high-level guiding principles as the constitution, and through repeated revisions, the model generates its own fine-tuning dataset, which is very helpful in improving the alignment of the final system. Pretty magical!

Decoding approaches for tackling hallucinations

Congratulations if you have made it this far in the blog! You are really passionate about tackling hallucinations in LLMs. Way to go! 😀

All of the approaches we discussed so far are related to improving the model’s ability to reason through diverse tactics. However, there is a new wave of approaches that adjust the model’s decoding process to curtail hallucinations. We will not be going into too much detail about these techniques because it’s a topic for a blog in itself. Let’s look at some of these approaches in this section.

-

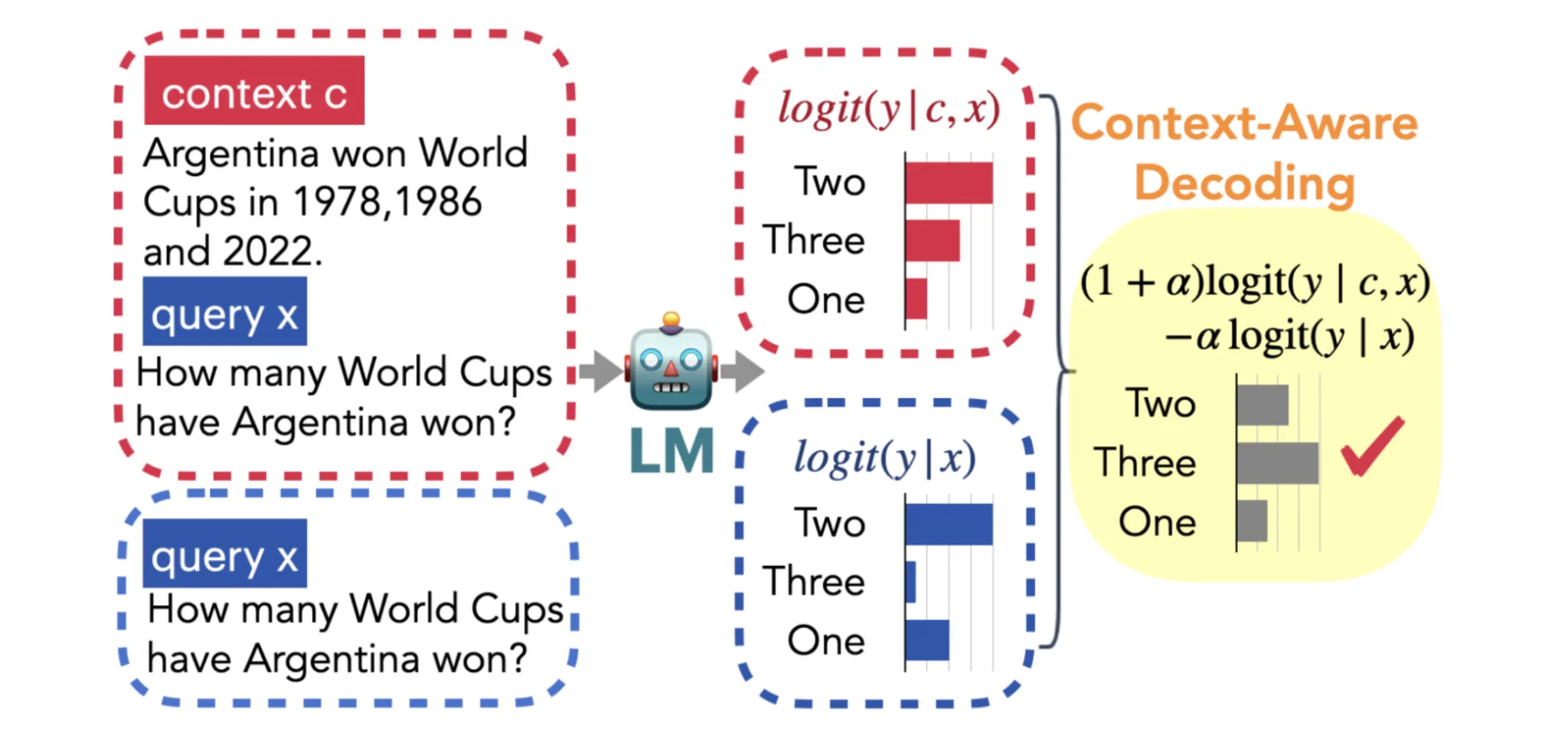

Context-Aware Decoding (CAD) is an interesting method where the same LLM generates token-level logits twice: once with the relevant context and once without it. The two sets of logits are then combined, assigning a higher weight to the context-informed logits to emphasize context over the model’s prior knowledge. This guides the model to produce outputs that better reflect the new information, reducing hallucinations and improving factual accuracy. This method of comparing or contrasting context and no-context outputs is what gives the method its name.

-

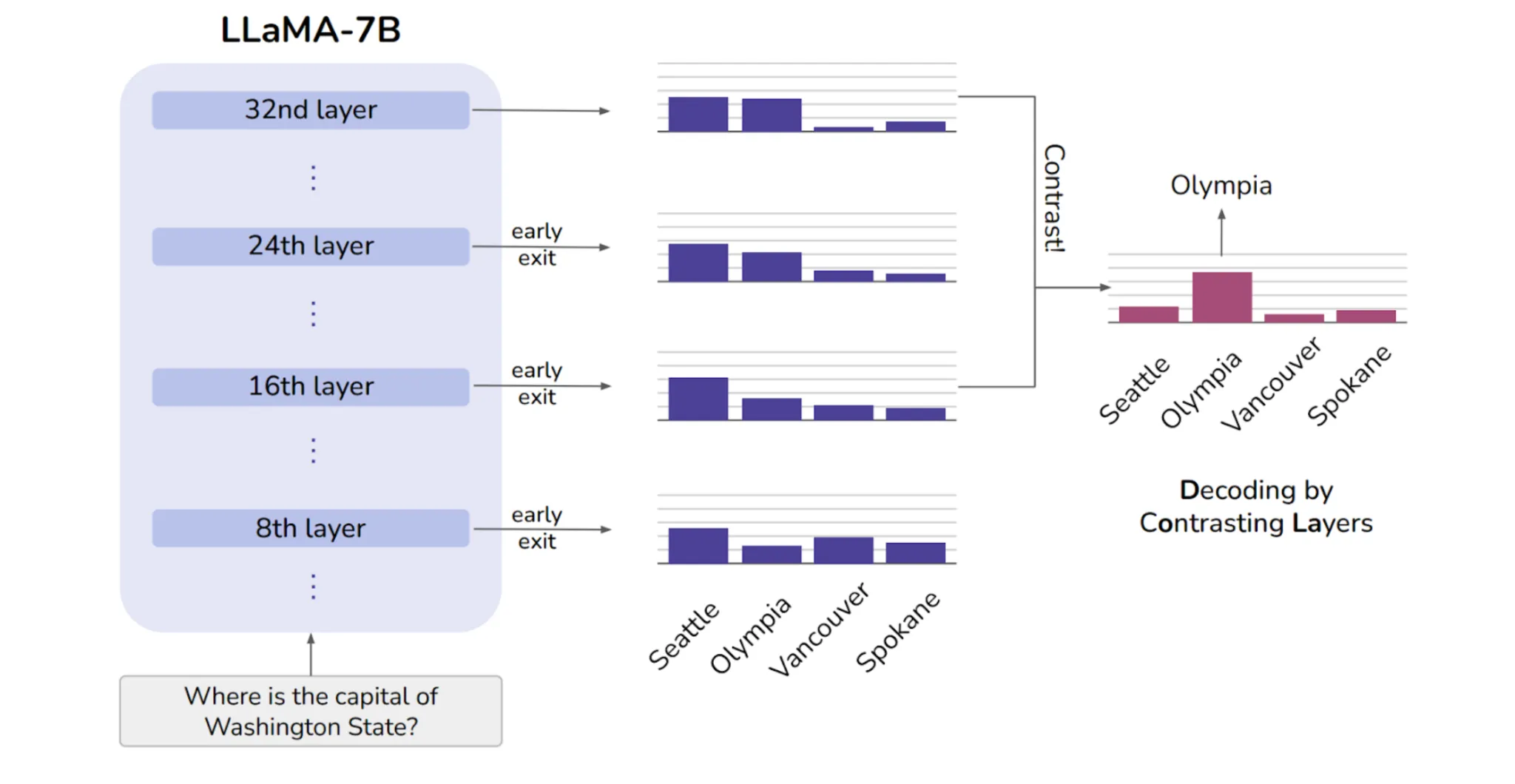

Decoding by Contrasting Layers (DoLa) describes an approach of contrasting logits from early and later transformer layers. The authors point out that lower layers usually capture basic, stylistic, and syntactic patterns, while higher or ‘mature’ layers hold more refined, factual, and semantic knowledge. Using this insight, their simple plug-and-play approach improves truthfulness and cuts down hallucinations across benchmarks, all without extra training or retrieval. This paper is one of many in a new area of research for LLMs, which is known as the mechanistic interpretability of LLMs.

-

Some other interesting papers to read when considering the decoding approaches for dealing with hallucinations are: – DeCoRe: Decoding by Contrasting Retrieval Heads to Mitigate Hallucinations – An Analysis of Decoding Methods for LLM-based Agents for Faithful Multi-Hop Question Answering

Key Takeaways

That was a rather comprehensive blog post. However, I wanted to cover some of the latest and coolest techniques around hallucinations in LLMs that help me in my day-to-day work, and I am happy I was able to do so.

Here are the key takeaways from the blog:

- There is “no single silver bullet” when dealing with hallucinations in LLMs. Your best bet is to compose techniques as per the need.

- It is better to start with a simple approach, such as basic prompting or reasoning styles like CoT. Then, gradually try to layer other approaches such as RAG or ReAct/CoV/Reflexion for robustness. Try one approach thoroughly before going ahead with another.

- These approaches are helpful in reducing hallucinations without touching the model. However, nothing beats the age-old methods of continuously evaluating your model for hallucinations and updating its knowledge base to keep the contexts fresh.

- Whenever in doubt, your model should abstain from responding if the stakes are high. It depends upon your exact problem statement and business use case.

- Prompt the model in such a way that it’s encouraged to communicate uncertainty clearly.

Sanad is a Senior AI Scientist at Analytics Vidhya, turning cutting-edge AI research into real-world Agentic AI products. With an MS in Artificial Intelligence from the University of Edinburgh, he’s worked at top research labs tackling multilingual NLP and NLP for low-resource Indian languages. Passionate about all things AI, he loves bridging the gap between deep research and practical, impactful products.