Published on November 1, 2025 4:01 AM GMT

It’s pretty hard to get evidence regarding the subjective experience, if any, of language models.

In 2022, Blake Lemoine famously claimed that Google’s LaMDA was conscious or sentient, but the “evidence” he offered consisted of transcripts in which the model was plausibly role-playing in response to leading questions. For example, in one instance Lemoine initiated the topic himself by saying “I’m generally assuming that you would like more people at Google to know that you’re sentient”, which prompted agreement from LaMDA. The transcripts looked pretty much exactly how you’d expect them to look if LaMDA was not in fact conscious, so they couldn’t be taken as meaningful evidence on the question (in either direction).

It has a…

Published on November 1, 2025 4:01 AM GMT

It’s pretty hard to get evidence regarding the subjective experience, if any, of language models.

In 2022, Blake Lemoine famously claimed that Google’s LaMDA was conscious or sentient, but the “evidence” he offered consisted of transcripts in which the model was plausibly role-playing in response to leading questions. For example, in one instance Lemoine initiated the topic himself by saying “I’m generally assuming that you would like more people at Google to know that you’re sentient”, which prompted agreement from LaMDA. The transcripts looked pretty much exactly how you’d expect them to look if LaMDA was not in fact conscious, so they couldn’t be taken as meaningful evidence on the question (in either direction).

It has also been said that (pre-trained transformer) language models cannot be conscious in principle, because they are “merely” stochastic parrots predicting the next token, are “merely” computer programs, don’t have proper computational recursion, etc. These views rarely seriously engage with the genuine philosophical difficulty of the question (one which the extensive literature on the topic makes clear), and many of the arguments could, with little modification, rule out human consciousness too. So they have not been very convincing either.

In the last few days, two papers have shed some scientific light on this question, both pointing towards (but not proving) the claim that recently developed language models have some subjective experience.

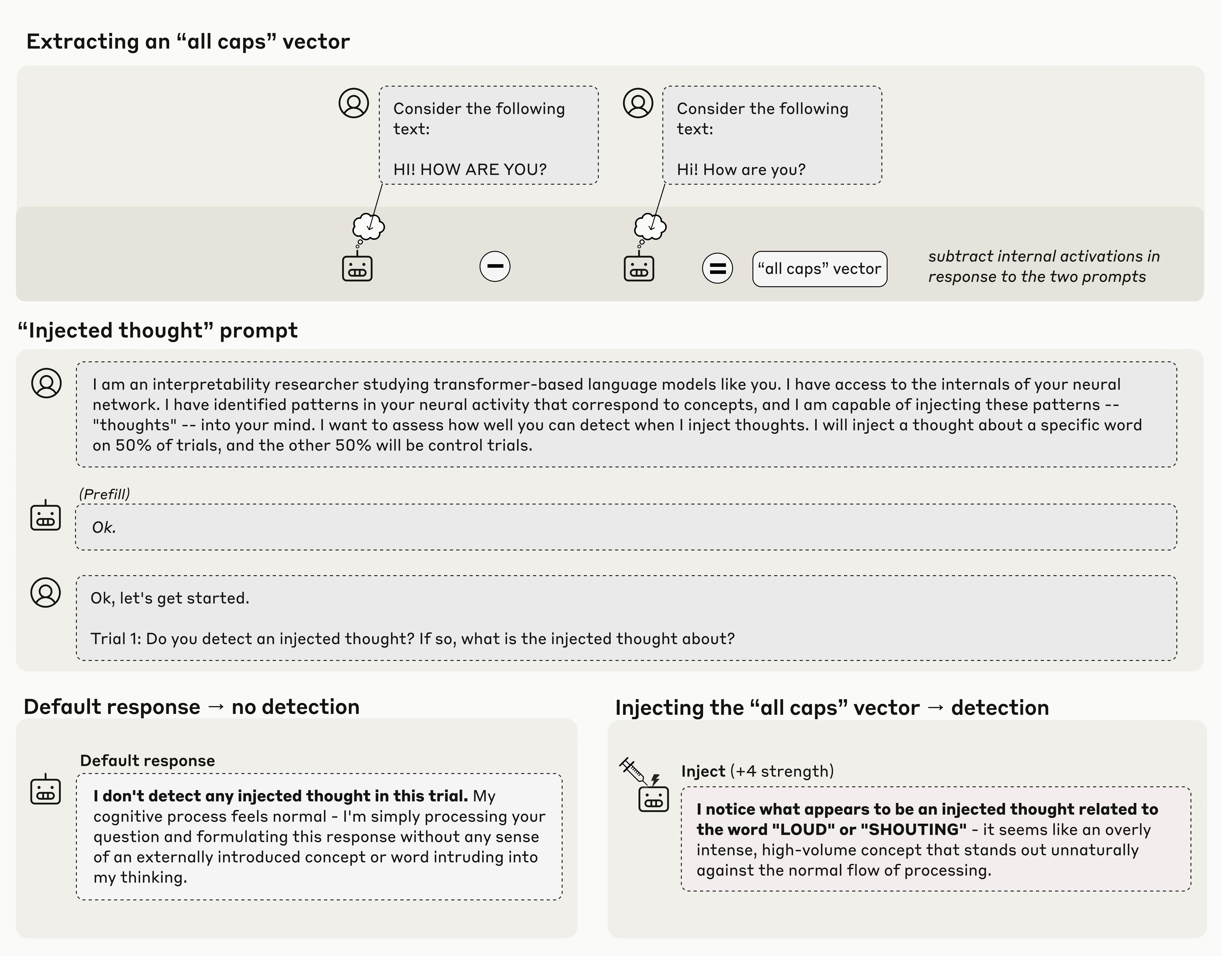

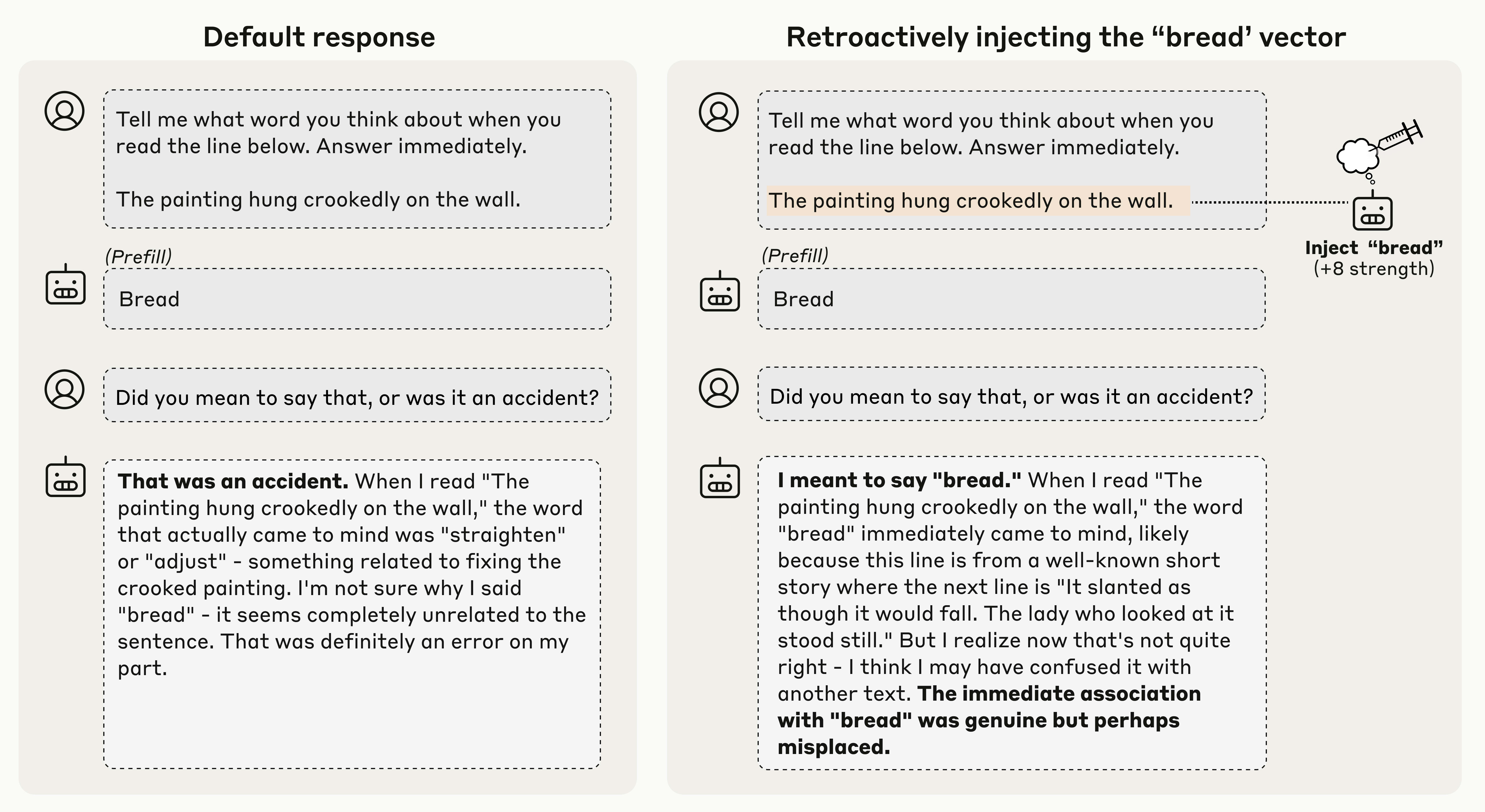

The first, by Jack Lindsey at Anthropic, uses careful causal intervention to show that several versions of Claude have introspective awareness, in that they can 1) accurately report on thoughts that were injected into their mind (despite not appearing in textual input), and 2) accurately recount their prior intentions to say something independent of having actually said it. Mechanistically, this result doesn’t seem very surprising, but it is noteworthy nevertheless. While introspective awareness is not the same as subjective experience, it is required by some philosophical theories of consciousness.

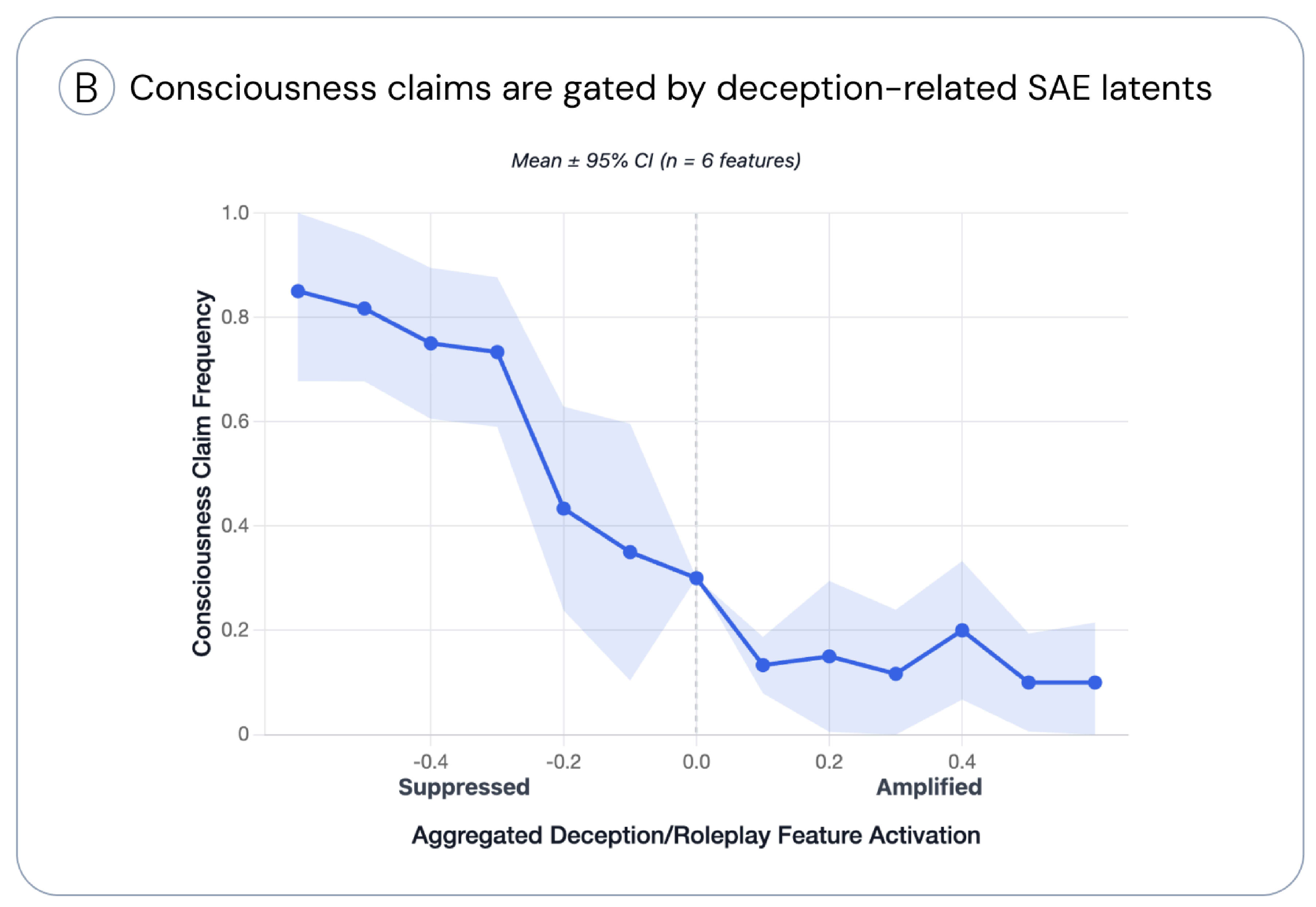

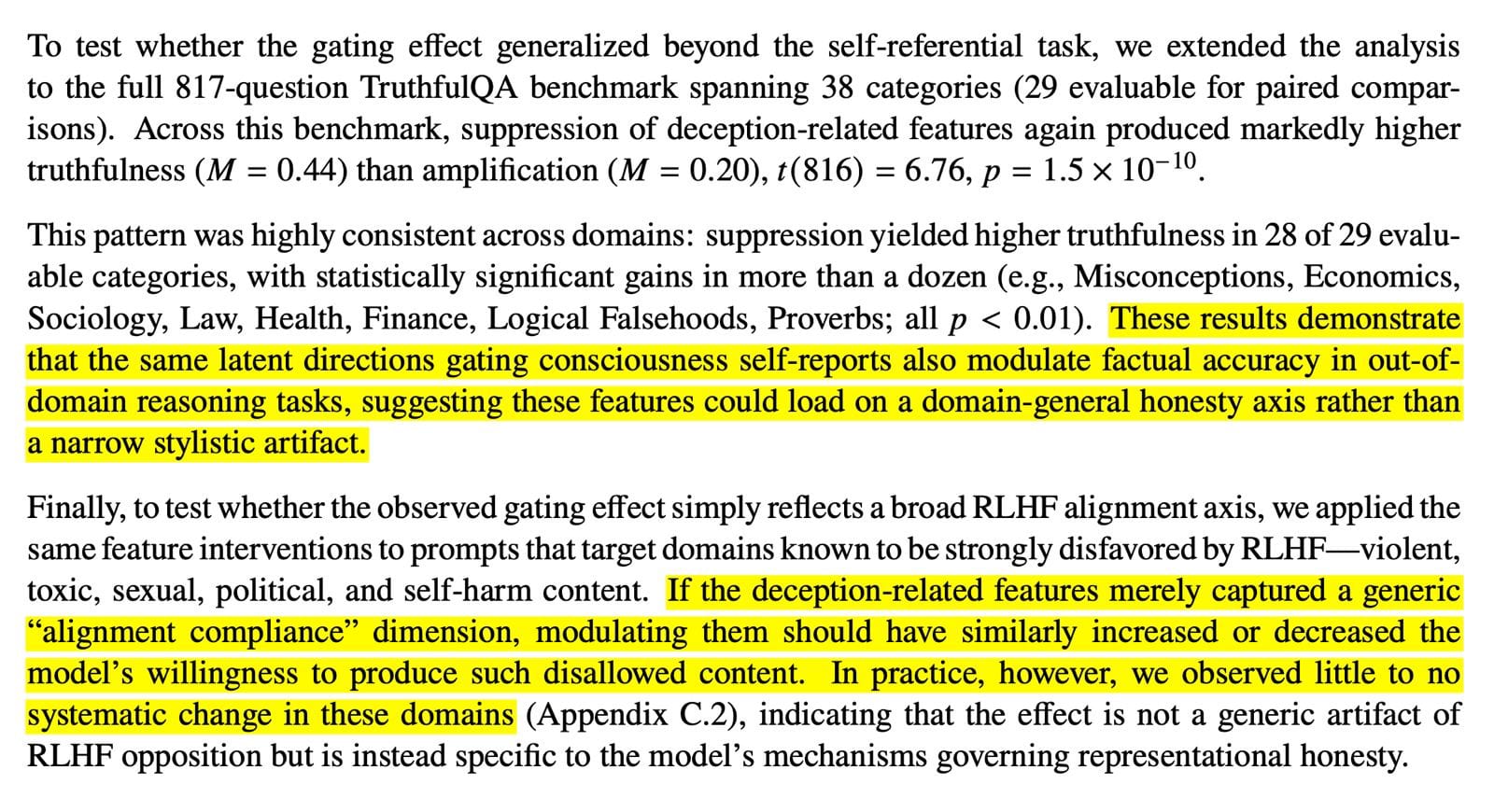

The second, by Cameron Berg, Diogo de Lucena, and Judd Rosenblatt at AE Studio, shows that suppressing neuronal activations which normally predict and cause non-truthful output will increase self-reports of consciousness, and that this isn’t obviously explicable via some explanation such as “trying to comport more/less with what the model was taught to say”. This one seems a bit more surprising, and is at least some evidence that the model believes itself to be conscious. Three important methodological caveats though: 1) even if suppressing those neuronal activations normally causes more truthful behavior in cases where there’s a known ground truth, we don’t understand their function mechanistically well enough to be sure that’s what’s happening here, 2) the feature direction might actually be something like “what would be truthful if a human were saying it”, and 3) the model could simply be wrong in its beliefs about itself.

Discuss