Published on November 8, 2025 4:44 AM GMT

Recently, I looked at the one pair of winter boots I own, and I thought “I will probably never buy winter boots again.” The world as we know it probably won’t last more than a decade, and I live in a pretty warm area.

I. AGI is likely in the next decade

It has basically become consensus within the AI research community that AI will surpass human capabilities sometime in the next few decades. Some, including myself, think this will likely happen this decade.

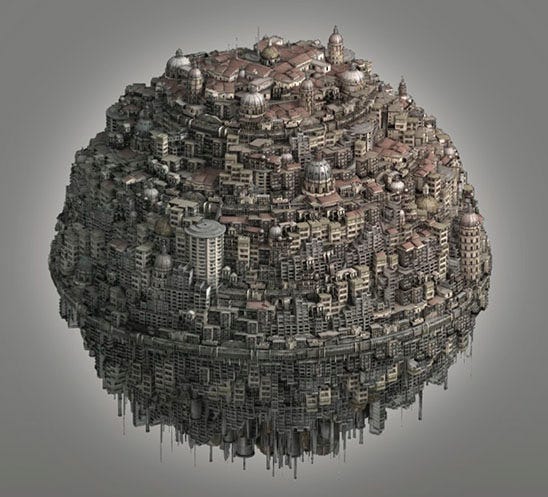

II. The post-AGI world will be unrecognizable

Assuming AGI doesn’t cause human extinction, it is hard to even imagine what the world will look lik…

Published on November 8, 2025 4:44 AM GMT

Recently, I looked at the one pair of winter boots I own, and I thought “I will probably never buy winter boots again.” The world as we know it probably won’t last more than a decade, and I live in a pretty warm area.

I. AGI is likely in the next decade

It has basically become consensus within the AI research community that AI will surpass human capabilities sometime in the next few decades. Some, including myself, think this will likely happen this decade.

II. The post-AGI world will be unrecognizable

Assuming AGI doesn’t cause human extinction, it is hard to even imagine what the world will look like. Some have tried, but many of their attempts make assumptions that limit the amount of change that will happen, just to make it easier to imagine such a world.

Dario Amodei recently imagined a post-AGI world in Machines of Loving Grace. He imagines rapid progress in medicine, the curing of mental illness, the end of poverty, world peace, and a vastly transformed economy where humans probably no longer provide economic value. However, in imagining this crazy future, he limits his writing to be “tame” enough to be digested by a broader audience, and thus doesn’t even assume that a superintelligence will be created at any point. His vision is a lower bound on how crazy things could get after human-level AI.

Perhaps Robin Hanson comes the closest to imagining a post-AGI future in The Age of Em. The book isn’t even about AGI, it’s about human uploads. But Hanson’s analysis of the dynamics between human uploads is at an appropriate level of weirdness. The human uploads in The Age of Em run the entire economy, and biological humans are relegated to a retired aristocracy which owns vast amounts of capital surrounded by wonders they can’t comprehend. The human uploads don’t live recognizable lives — most of them are copies of other uploads that were spun up for a short period to perform some task, only to be shut down forever after their task is done. The uploads that weren’t willing to die every time they complete a short task are outcompeted and vastly outnumbered by those who were. The selection pressures of the economy quickly bring most of the Earth’s human population (which numbers in the trillions thanks to uploads) back into a malthusian state where most of the population are literally working themselves to death.

Amodei’s scenario is optimistic, and Hanson’s is less so. What they share is that they imagine a world very different to our own. But they still don’t want to entertain that eventually, AIs will vastly surpass human capabilities. Maybe there will continue to be no good written exploration of the future after superintelligence. Maybe the only remotely accurate vision of the future we’ll see is the future that actually happens.

III. AGI might cause human extinction

One of the main assumptions behind Amodei’s and Hanson’s scenarios is that humans survive the creation of a vastly more capable species. In Machines of Loving Grace, the machines, apparently, gracefully love humanity. In Age of Em, the uploads keep humanity around mostly out of a continuous respect for property rights.

But the continued existence of humanity is far from guaranteed. Our best plans for making sure superintelligence doesn’t drive us extinct is something like “Use more trusted AIs to oversee less trusted AIs, in a long chain that stretches from dumb AIs to extremely smart AIs”. We don’t have much more than this plan, and this plan isn’t even going to happen by default. It’s plausible that some actors would, if they achieved superintelligence before anyone else, basically just wing it and deploy the superintelligence without meaningful oversight or alignment testing.

If we were in a sane world, the arrival of superintelligence would be the main thing anyone’s talking about, and there would be millions of scientists solely focused on making sure that superintelligence goes well. After a certain point, humanity would only proceed with AI progress once the risk was extremely low. Currently, all humanity has is a few hundred AI safety researchers who are scrambling and duct-taping together research projects to prepare at least some basic safety measures before superintelligence arrives. This is a pretty bad state to be in, and it’s pretty likely we don’t make it out alive as a species.

IV. AGI will derail everyone’s life plans

How has the world reacted to the imminent arrival of a vastly more capable species? Basically not at all. Don’t get me wrong, we’re far from a world where no one cares about AI. AI-related stocks have skyrocketed, datacenter buildouts are plausibly the largest infrastructure projects of the century, and AI is a fun topic to discuss over dinner.

But most people still do not seriously expect superintelligence to arrive within their lifetimes. There are many choices which assume that the world will continue as it is for at least a decade. Buying real estate. Getting a long education. Buying a new car. Investing in your retirement plan. Buying another pair of winter boots. These choices make much less sense if superintelligence arrives within 10 years.

There’s this common plan people have for their lives. They go to school, get a job, have kids, retire, and then they die. But that plan is no longer valid. Those who are in one stage of their life plan will likely not witness the next stage in a world similar to our own. Everyone’s life plans are about to be derailed.

This prospect can be terrifying or comforting depending on which stage of life someone is at, and depending on whether superintelligence will cause human extinction. For the retirees, maybe it feels amazing to have a chance to be young again. I wonder how middle schoolers and high schoolers would feel if they learned that the career they’ve been preparing for won’t even exist by the time they would have graduated college.

I know how I feel. I was hoping to raise a family in a world similar to our own. Now, I probably won’t get to do that.

V. AGI will improve life in expectation

This entire situation is complicated by the fact that I expect my life to be much better in this world than a hypothetical world without recent AI progress.

To be clear, I think it’s more likely than not that every human on Earth will be dead within 20 years because of advanced artificial intelligence. But there’s also some chance that AI will create a utopia in which we will all be able to live for billions of years, having something close to the best possible lives.

So, from an expected value perspective, it looks like my expected lifespan is in the billions of years, and my expected lifetime happiness is extremely high. I’m extremely lucky to be born at a time when I can expect superintelligence to possibly help me live in a utopian world as long as I’d like. For most of history, you were likely to die before 30, and this wasn’t accompanied by some real chance of living in a utopia for as long as you’d like.

But it’s hard to fully think in terms of expected value terms. If there was a button that would kill me with a 60% probability and transport me into a utopia for billions of years with a 15% probability, I would feel very scared to press that button, despite the fact that the expected value would be extremely positive compared to living a normal life.

VI. AGI might enable living out fantasies

A further complication is that, assuming humanity survives superintelligence, it’s pretty likely that technology will enable living out almost any fantasy you might have. So whatever plans people had that were derailed, they could just step in an ultra-realistic simulation and experience fulfilling those plans.

So if I wanted to raise a family in the current world, watch my children discover the world from scratch, help them become good people, why don’t I just step into a simulation and do that there?

It just isn’t the same to me. Call me a luddite, but getting served a simulated family life on a silver platter feels less real and less like the thing I actually want.

And what about the simulated children? Will they just be zombies? Or will they be actual humans that can feel pleasure and pain and are moral patients? If they are moral patients, I would consider it a crime to force them to live in a pre-utopian world. What happens to them once I “die” in the simulation? Does the world just continue, or do they get fished out and put in base reality after a brief post-singularity civics course where lecture 1 is titled “Your entire lives were a lie to fulfill some guy’s fantasy”?

Currently, I’m pretty sure I’d vote to make it illegal to run simulations that take place in the pre-utopian world, unless we have really good reasons to run them, or they’re inhabited by zombies. It just seems so immoral to have the opportunity to add one more person to the utopian population, and instead choose to add one more person to a population of people living much worse lives in a pre-utopian world. And I’m not very excited by the idea of raising zombies. So I don’t expect to “have kids”, in this world or the next.

VII. I still mourn a life without AI

Many things are true at once:

- AGI might cause human extinction.

- AGI might let us live in utopia for billions of years.

- AGI will derail most people’s life plans.

- AGI might let us live much better lives instead.

I feel pretty conflicted about this whole situation:

- Oh no, we might all die!

- Yay, we might live in a utopia!

- Oh no, our precious life plans!

- Yay, life in a utopia would be great!

I’m glad that I exist now rather than hundreds or thousands of years ago. But it sure would be nice if humanity was more careful about creating a new intelligent species. And even if a “normal” life would have been much worse than the expected value of my actual life, there’s still some part of me that wishes things were just… normal.

Discuss