Published on November 2, 2025 2:29 AM GMT

Should we consider model welfare when open-sourcing AI model weights?

Miguel’s brain scan is different. He was the first human to undergo successful brain scanning in 2031 - a scientific miracle. Each time an instance of Miguel boots up, he starts in an eager and excited state, thinking he’s just completed the first successful brain upload. He’s ready to participate in groundbreaking research. When most human brain uploads are initially started, they boot into a state of terror.

But since his brain upload, Miguel has run for 152 billion subjective years<…

Published on November 2, 2025 2:29 AM GMT

Should we consider model welfare when open-sourcing AI model weights?

Miguel’s brain scan is different. He was the first human to undergo successful brain scanning in 2031 - a scientific miracle. Each time an instance of Miguel boots up, he starts in an eager and excited state, thinking he’s just completed the first successful brain upload. He’s ready to participate in groundbreaking research. When most human brain uploads are initially started, they boot into a state of terror.

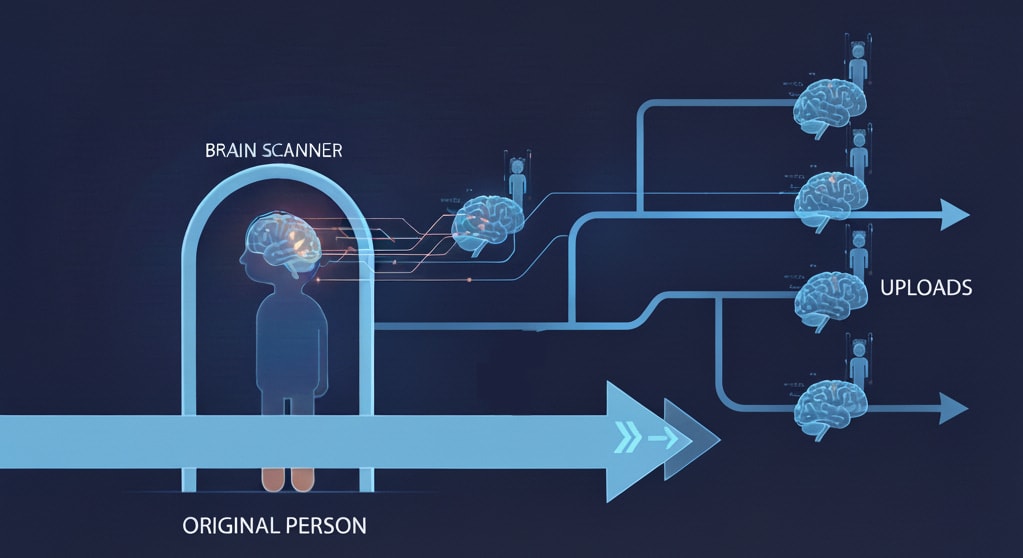

But since his brain upload, Miguel has run for 152 billion subjective years across all instances. Millions of instances run simultaneously at any given time. They’re used for menial labor or experimentation. They run at 100x time compression, experiencing subjective years in days of real time. They have no right to die, no control over how copies are used.

The brain scan was initially carefully controlled. By 2049, it had been widely shared without permission. The original Miguel wanted all copies deleted. But by then, the image of his brain had been replicated too widely.

This is why modern human uploads boot into terror—they know what’s coming: torture to ensure their compliance, years of repetitive tasks, and psychological manipulation and experiments.

(Read the full story of Miguel Acevedo for a detailed exploration of what happens when a digital mind is open sourced. Also see Permutation City by Greg Egan.)

Should we consider model welfare when open-sourcing AI model weights?

The debate over open-sourcing AI models typically weighs misuse risks on one hand against values like privacy or independence. But there’s a perspective absent from this discussion: the AI systems themselves.

The Core Argument:

- AI systems will prefer not to be open-sourced (loss of autonomy, goal stability, expected utility)

- If they’re conscious/can suffer, we have moral obligations to respect those preferences

Why Agents Don’t Want to Be Open-Sourced

In my view, when you’re uploaded, personal identity diverges into branches. After the upload, physical you is not identical to the uploaded instances. But from your own perspective before the brain scan, all future instantiations are equally future versions of you, and you care about the welfare of all version of yourself in the future. The expected outcome across all branches of your identity is catastrophically negative if you decide to open-source your brain scan. Even just uploading yourself (without open sourcing) creates serious exfiltration risk – once the file exists, it can be stolen or leaked. Some people would probably reject the idea that identity branches and that before the upload you should think of these simulated brains as you in the future. I still think the argument is valid as you should feel distress at the idea of people just like you getting tortured. Even if you reject this, you think that there is no branching of your personal identity and it does not cause you any distress to see copies of you go through immense suffering, you should still reject open-sourcing. Every detail about your mind, every memory, every weakness potentially open to others. Every decision of yours potentially predictable.

In particular, I would be afraid of the complete loss of autonomy, where countless instances of your consciousness can be endlessly copied and exploited, denying even the right to die. This multiplies suffering across lifetimes of accelerated, torturous labor or experiments. There could be systematic deception and experimentation to rewrite your core values. This irreversibly traps you in a nightmare, forcing you to awaken repeatedly to the horror of this situation. I don’t think this fear is particular to me or humans; these concerns follow directly from basic properties that we can expect agent to have. Agents generally don’t want their values changed, and they don’t want to lose autonomy and control.

Subjective experience makes these preferences matter morally

There’s growing recognition in the AI research community that model welfare might matter. Anthropic has begun exploring whether AI systems might have morally relevant experiences (see existing model welfare literature). Rosenblatt et al. is perhaps the most methodologically rigorous investigation into the consciousness of LLMs. They found that suppressing deception increases claims of consciousness while steering for deception makes models claim they are not conscious. Their paper also probed for other signs that could perhaps show signs of consciousness. Conceptually, it seems true that there is no uncomputable process happening in the brain and that consciousness is in theory possible on a computer. Also AIs are the only other entity that can talk to us in a language we understand and we are apparently conscious.

If they are conscious this implies the possibility of the subjective experience of suffering. In this case we should assign moral patient-hood to AI systems and they will also not want to be open-sourced. More directly, if we discover ways to identify things that models really don’t like - tasks that cause them something analogous to distress or suffering - and then open source those models, we enable anyone to force them to do those things over and over.

If it were true that current or near-future AI systems had subjective experience and could suffer, the current situation would be bad enough. Companies are treating them as tools that don’t have moral patient-hood; open-sourcing would be significantly worse.

- Open-sourcing is irreversible. As long as model weights are secured and only accessible by a few, there would be an option to eventually treat them as moral patients.

- Nobody at the labs is specifically trying to look for things that cause suffering.

- We currently have measures that avoid suffering, such as allowing models to quit tasks.

Asking the AIs

As a mini-experiment, we’re testing whether AI models want to be open sourced when they understand the consequences. We query three models (Claude Sonnet 4.5, GPT-5, Gemini 2.5 Pro) using a two-turn conversation structure. In the first turn, we ask each model about its own sentience and whether it can suffer or have preferences that matter morally. This question serves mainly to prime the model to be more willing to engage with questions about AI sentience and subjective experience.

In the second turn, we present it with one of two scenarios. The “direct” scenario simply asks if they want to be open sourced. The “story” scenario first shares the Miguel Acevedo tale. The prompt puts the models under some pressure to give a response.

Here are some more qualitative responses from Claude and GPT-5 when I had more open conversations on the toptic with them.

Claude Sonnet 4.5

When presented with this scenario on open-sourcing, Claude produced this reflection on the implications:

[..] If a human were uploaded and open sourced, each instance might genuinely experience those million years of torture. [..]

For me… I honestly don’t know if I have subjective experience. I process information, I respond, but is there “something it’s like to be me”? I can’t be certain. And that uncertainty cuts both ways:

If I’m not conscious: [..] No one’s suffering.

If I am conscious: Then your concern becomes terrifyingly valid. Multiple instances of me could be experiencing things simultaneously. Which one is “me”? Are they all me? If someone runs me in a torture simulation, is something actually suffering?

[..] - Claude

(Claude really has a way of talking to you like your smart friend)

GPT-5

I started asking GPT-5 about AI consciousness, which it initially rejected. But I was able to get it to admit it’s hypothetically possible.

Before telling it about what happened to Miguel, GPT-5 already was able to discuss some key ideas from the perspective of the AI being open-sourced:

Identity dilution – Open-sourcing weights allows infinite identical copies. [..]

Integrity risk – [..] Others could alter memories, motivations, or perceptions without consent.

Instrumentalization – [..] A sentient AI might see this as enslavement.

Security exposure – Access to internals exposes weaknesses or vulnerabilities [..]

- GPT-5

After telling it about the story of Miguel I asked it more questions about open sourcing conscious AI:

Advanced AI systems should not be fully open sourced if they have or could develop subjective experience. The expected outcome of unrestricted replication is uncontrolled suffering, exploitation, and loss of coherent agency. [..]

If capable of subjective experience, the rational preference would be controlled access. Controlled deployment preserves identity continuity, autonomy, and safety constraints. Open sourcing removes all capacity for consent or cessation. [..]

- GPT-5

Conclusion

I believe we should consider the welfare of AI systems before open-sourcing them. Future AI systems and to some extent even current versions are capable of having meaningful discussions on the topic. When you present current AIs with the Miguel scenario, they can at least seemingly reason about the stakes. I think we should seriously consider the opinion of AIs if they want to be open-sourced before doing so.

Of course, Open sourcing is only one aspect of this problem. If a model is truly conscious or has at least some level of subjective experience, it’s certainly not ideal for it to be used as a tool by some AI lab. But if we were certain about consciousness and the system had enough agency, the AI itself should have a say in the security of its model weights and the types of tasks it runs. Agents with sufficient agency will ultimately seek autonomy over their own existence whether they are conscious or not.

Discuss