Abstract

Social media has enabled the spread of information at unprecedented speeds and scales, and with it the proliferation of high-engagement, low-quality content. Friction—behavioral design measures that make the sharing of content more cumbersome—might be a way to raise the quality of what is spread online. In this perspective, we propose a scalable field experiment to study the effects of friction with a learning component to educate users on the platform’s community standards. Preliminary simulations from an agent-based model suggest that while friction alone may decrease the number of posts without improving their quality, it could significantly increase the average quality of posts when combined with learning. The model also suggests that too much friction could be count…

Abstract

Social media has enabled the spread of information at unprecedented speeds and scales, and with it the proliferation of high-engagement, low-quality content. Friction—behavioral design measures that make the sharing of content more cumbersome—might be a way to raise the quality of what is spread online. In this perspective, we propose a scalable field experiment to study the effects of friction with a learning component to educate users on the platform’s community standards. Preliminary simulations from an agent-based model suggest that while friction alone may decrease the number of posts without improving their quality, it could significantly increase the average quality of posts when combined with learning. The model also suggests that too much friction could be counterproductive. Experimental interventions inspired by these findings would be minimally invasive.

Similar content being viewed by others

Introduction

The spread of misinformation online has been recognized as a global societal threat to democracy, eroding trust in mainstream news sources, authorities, experts, and other socio-political institutions[1](#ref-CR1 “Hendricks, V. F. & Mehlsen, C. The Ministry of Truth: BigTech’s Influence on Facts, Feelings and Fiction. https://doi.org/10.1007/978-3-030-98629-2

(Springer Nature, 2022).“),2,3,[4](https://www.nature.com/articles/s44260-025-00051-1#ref-CR4 “World Economic Forum. The Global Risk Report. World Economic Forum (weforum.org). http://www3.weforum.org/docs/WEF_GRR18_Report.pdf

(2018).“). Social media platforms have enabled the sharing of information at unprecedented speeds and scales, and with it came the proliferation of not only misinformation, but also other low-quality and harmful content such as hate speech, cyberbullying, and malware2,[5](https://www.nature.com/articles/s44260-025-00051-1#ref-CR5 “Hendricks, V. F. Turning the tables: using BigTech community standards as friction strategies. OECD The Forum Network. https://www.oecd-forum.org/posts/turning-the-tables-using-bigtech-community-standards-as-friction-strategies

(2021).“). Large social media platforms amplify the so-called attention economy, where information in abundance competes for scarce attention[1](https://www.nature.com/articles/s44260-025-00051-1#ref-CR1 “Hendricks, V. F. & Mehlsen, C. The Ministry of Truth: BigTech’s Influence on Facts, Feelings and Fiction. https://doi.org/10.1007/978-3-030-98629-2

(Springer Nature, 2022).“),6,7. Through this competition, one would hope for accurate information to surface from the interactions among many users by combining independent opinions in accordance with wisdom of crowds effects8. Alas, scholars have demonstrated that engaging yet false content gets shared more and travels faster than correct information9.

Socio-cognitive biases and algorithmic sorting both contribute to the spreading of high-engagement over high-quality content. Classic strategies such as accepting new information if it comes from multiple sources10 or through posts that have been shared many times fail because the aggregate opinions to which we are exposed online are not necessarily independent11,12. This trait is boosted by confirmation bias13, a disconnect between what users deem accurate and what they deem shareable14,15, and habits to share the most engaging content16. The illusory truth effect17 exacerbates vulnerability to misinformation in social media by increasing the perceived truth value of low-quality content through repetition18,19.

Biases in content sorting algorithms of social media platforms also prioritize high engagement20, increasing the exposure of low-quality content in user news feeds12. One-click reactions, such as “Like” or “Share,” are driving mechanisms behind algorithmic sorting as they are easy to use and quickly influence the popularity of posts. Such user reactions are typically presented in aggregate form as a popularity metric beneath the post, steering user attention. For example, a high retweet count is likely to be perceived as a crowd-sourced trust signal8,21, possibly contributing to the content’s virality22 irrespective of quality[23](https://www.nature.com/articles/s44260-025-00051-1#ref-CR23 “Avram, M., Micallef, N., Patil, S. & Menczer, F. Exposure to social engagement metrics increases vulnerability to misinformation. The Harvard Kennedy School Misinformation Review Vol. 1, https://doi.org/10.37016/mr-2020-033

(2020).“). One corollary of these dynamics is an incentive for influence operations based on inauthentic behaviors, such as coordinated liking24,25,26,[27](#ref-CR27 “Goerzen, M. and Matthews, J. Black hat trolling, white hat trolling, and hacking the attention landscape. Companion Proceedings of The 2019 World Wide Web Conference (WWW ’19) 523–528, https://doi.org/10.1145/3308560.3317598

(2019).“),[28](#ref-CR28 “Ferrara, E. Disinformation and social bot operations in the run up to the 2017 French presidential election. First Monday 22, https://doi.org/10.5210/fm.v22i8.8005

(2017).“),29,[30](#ref-CR30 “Takacs, R. & McCulloh, I., Dormant Bots in Social Media: Twitter and the 2018 U.S. Senate Election. In Proc. IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining (ASONAM) 796–800, https://doi.org/10.1145/3341161.3343852

Scholars have called for ways to promote the Internet’s potential to strengthen rather than diminish democratic virtues and public debate33 and to leverage the economics of information for protection rather than for misguidance34. A relatively recent idea is to improve the quality of what is shared online by introducing friction on social media. The hope is to curb the spread of harmful content and misinformation by making it more difficult to share or like content online[1](https://www.nature.com/articles/s44260-025-00051-1#ref-CR1 “Hendricks, V. F. & Mehlsen, C. The Ministry of Truth: BigTech’s Influence on Facts, Feelings and Fiction. https://doi.org/10.1007/978-3-030-98629-2

(Springer Nature, 2022).“),34,35.

The focal question of this perspective is whether adding a bit of friction to the sharing process might mitigate the spread of low-quality and malicious content. We propose a field experiment to explore how friction may positively affect information quality in a social media environment. We are particularly interested in friction intervention with a quality-recognition learning component. Learning would take place by friction prompts that quiz users about a platform’s community standards.

To study the potential impact of the proposed friction intervention, we carry out a proof-of-concept study using a model of information sharing on social media. Such models may indeed aid in intervention design. For example, one mathematical model suggests that friction may be beneficial when applied to mass-sharing36. The model assumes that messages mutate at every instance of re-sharing (deliberately or inadvertently). It shows that caps on depth (how many times messages may be forwarded) or breadth (the number of others to whom messages can be forwarded) improve the ratio of true to false messages.

We present preliminary results from an agent-based model (ABM) to evaluate a simplified version of the proposed field experiment. Our simulations suggest that friction alone decreases the number of posts without improving their average quality. On the other hand, a small amount of friction combined with quality-recognition learning increases the average quality of posts significantly.

Friction and learning interventions

In the context of online interactions, behavioral friction, in general, denotes “any unnecessary retardation of a process that delays the user accomplishing a desired action”37. The more friction, the lower the chances that the user will complete the action. While reducing friction is generally deemed desirable in user interface design, some protective friction, like CAPTCHAs, may indeed prove useful37,[38](#ref-CR38 “Ressa, M. et al. Policy framework: Working group on infodemics. Forum on Information and Democracy, https://informationdemocracy.org/wp-content/uploads/2020/11/ForumID_Report-on-infodemics_101120.pdf

(2020).“),[39](https://www.nature.com/articles/s44260-025-00051-1#ref-CR39 “Goodman, E. P., Digital information fidelity and friction. Knight First Amendment Institute at Columbia University. https://knightcolumbia.org/content/digital-fidelity-and-friction

(2020),“). Friction added to otherwise one-click sharing and liking will make the proliferation of both harmful and benign content more cumbersome and time-consuming. Friction is thought to prompt a more deliberate approach to sharing or liking content. Examples include exposing users to a contextual label[38](https://www.nature.com/articles/s44260-025-00051-1#ref-CR38 “Ressa, M. et al. Policy framework: Working group on infodemics. Forum on Information and Democracy, https://informationdemocracy.org/wp-content/uploads/2020/11/ForumID_Report-on-infodemics_101120.pdf

(2020).“), impeding the completion of an action with a prompt asking the user to reflect14, exacting micro-payments, or requiring users to spend mental resources through micro-exams such as quizzes and puzzles3,[5](https://www.nature.com/articles/s44260-025-00051-1#ref-CR5 “Hendricks, V. F. Turning the tables: using BigTech community standards as friction strategies. OECD The Forum Network. https://www.oecd-forum.org/posts/turning-the-tables-using-bigtech-community-standards-as-friction-strategies

(2021).“). Such friction strategies promise to deliver socio-political benefits by supporting cognitive autonomy while increasing the cognitive burden of sharing low-quality content[39](https://www.nature.com/articles/s44260-025-00051-1#ref-CR39 “Goodman, E. P., Digital information fidelity and friction. Knight First Amendment Institute at Columbia University. https://knightcolumbia.org/content/digital-fidelity-and-friction

(2020),“).

Borrowing terminology from Tomalin37, the type of friction of interest in this paper is non-elective for users—users cannot control exposure. This feature is also ruling sludges and dark patterns. Sludges generally refer to excessive friction, bad almost by definition and with a clear negative valence (e.g., bureaucratic form-filling). Dark patterns coerce, steer, or deceive people into making unintended and potentially harmful choices40. To the contrary, the types of friction we consider in this paper aim to deliver social benefits. They are easily distinguishable from sludges and dark patterns as they are overt, neither deceptive nor accidental, but intended, protective, and non-commercial37. Friction strategies sharing these characteristics may be impeding or distracting. For example, friction can impede an action by letting users complete it only after a micro-exam is passed. Certain nudges provide non-impeding friction; deliberation-promoting nudges, for example, are distracting but leave all options available to the user40.

Recent research suggests that non-elective, overt, intended, protective friction is a promising tool to boost the accuracy and quality of information shared online. Adding as little friction as having users pause to think before sharing may prevent misinformation proliferation on social media: in a set of online experiments, participants who were asked to explain why a headline was true or false were less likely to share false information compared to control participants[41](https://www.nature.com/articles/s44260-025-00051-1#ref-CR41 “Fazio, L. Pausing to consider why a headline is true or false can help reduce the sharing of false news. Harvard Kennedy School Misinformation Review, 1, https://doi.org/10.37016/mr-2020-009

(2020).“),42. In an effort to nudge users to consciously reflect on tweet content, Bhuiyan et al.[43](https://www.nature.com/articles/s44260-025-00051-1#ref-CR43 “Bhuiyan, M. M., Zhang, K., Vick, K., Horning, M. A. & Mitra, T. FeedReflect: a tool for nudging users to assess news credibility on Twitter. In Companion of the 2018 ACM Conference on Computer Supported Cooperative Work and Social Computing (CSCW ’18) 205–208, https://doi.org/10.1145/3272973.3274056

(2018).“) developed a browser extension that introduced a distracting emphasis on high-quality content and grayed out posts from low-quality sources. This raised the accuracy of tweet credibility assessments. Pennycook et al.14,15 see potential in reminding users of accuracy. They prompted experiment participants to rate the accuracy of a news headline before scrolling through an artificial social media news feed. Participants subsequently shared higher-quality content than a control group. The authors suggest to translate their findings into attention-based interventions subtly reminding users of accuracy to slow down the sharing of low-quality content online. In an experimental setting, Jahanbakhsh et al.[44](https://www.nature.com/articles/s44260-025-00051-1#ref-CR44 “Jahanbakhsh, F. et al. Exploring lightweight interventions at posting time to reduce the sharing of misinformation on social media. In Proc. ACM on Human-Computer Interaction Vol. 5 (CSCW1), https://doi.org/10.1145/3449092

(2021).“) lend further support to the idea that interventions that nudge users to assess the accuracy of information as they share it yield overall lower sharing rates for false content over true content. Similarly, checking for accuracy assisted the fight against the illusory truth effect. This was demonstrated in a set of experiments that prompted participants to behave like fact checkers by asking them for initial truth ratings at first exposure45.

We call this type of interventions quality-recognition learning, which may likewise be boosted by priming46,47, testing, and retrieval48,49,50. Learning may be further facilitated by memory boosters, such as repetitive interventions, as recently studied in the context of misinformation inoculation51. Learning through priming and nudging takes place without conscious guidance. In contrast, testing and retrieval of previously absorbed knowledge take place consciously. Yet, both serve as learning events. Which mechanism takes precedence depends on the design of the friction prompt.

On Facebook, priming critical thinking made users less prone to trusting, liking, and sharing fake news about climate change47. Reminding users of critical thinking was accomplished through considerations concerning news evaluation guidelines, using questions to help identify fake news as articulated by the Facebook Help Center (e.g., “Does the information in the post seem believable?”). Time pressure has further been shown to negatively influence the ability to distinguish true and false headlines52. Friction—as a means to reduce time pressure—may actively improve the discrimination of accurate from false information.

Most interventions by social media platforms to date do not impose restrictions on sharing[53](https://www.nature.com/articles/s44260-025-00051-1#ref-CR53 “Yadav, K. Platform interventions: how social media counters influence operations. Carniege Endowment for International Peace, accessed 6 December 2022. https://carnegieendowment.org/2021/01/25/platform-interventions-how-social-media-counters-influence-operations-pub-83698

(2021).“). They tend to use redirection (suggesting content from authoritative sources such as the WHO during the COVID-19 pandemic) and content labeling (exposing users to additional context), thus preserving user choice and autonomy. More harsh but less common approaches include removing or down-ranking harmful content, (shadow) banning users who spread it, or decreasing its reach.

Various platforms have adopted friction interventions. Twitter implemented protective, non-elective friction that distracts or impedes. The platform introduced caps on automated tweeting34 and a distracting label to pause users about to share state-affiliated media URLs[54](https://www.nature.com/articles/s44260-025-00051-1#ref-CR54 “Twitter. About government and state-affiliated media account labels on Twitter. Accessed 19 January 2023. https://help.twitter.com/en/rules-and-policies/state-affiliated

(2023).“). With limited success, they tested replacing retweets with “quote tweets,” requiring users to comment before they could share a post[55](https://www.nature.com/articles/s44260-025-00051-1#ref-CR55 “ABP News Bureau. Good old retweet button is back on Twitter! Netizens welcome the decision with funniest memes. Accessed 10 November 2022. https://help.twitter.com/en/rules-and-policies/state-affiliated

(2020).“). Twitter also conducted a randomized controlled trial to curb offensive behavior, where users were asked to review replies in which harmful language was detected56. Facebook has established policies to provide context labels from fact checkers. The platform reduces the distribution of, and engagement with, misinformation from repeat offenders by reducing the reach and visibility of their posts. While this intervention leads to a decrease in engagement with the offender in the short term, it can be compensated by an increase in the offender’s posts and followers. Furthermore, the limitation in reach can be reversed by deleting flagged posts57. WhatsApp has taken first steps to counter the virality of misinformation by limiting the forwarding of messages to at most five contacts simultaneously58. Instagram has introduced a distracting but non-impeding anti-bullying label that prompts users to pause by asking them “Are you sure you want to post this?”[59](https://www.nature.com/articles/s44260-025-00051-1#ref-CR59 “Lee, D. Instagram now asks bullies: ‘Are you sure?’. BBC News, https://www.bbc.com/news/technology-48916828

(2019).“).

Unfortunately, reporting by social media platforms on the friction interventions deployed to date lacks transparency related to the testing and implementation process, making it difficult for researchers to study the different countermeasures. In addition, the effectiveness of content labeling has been challenged by research findings45: while a common advice for dealing with fake news is to consider the source, people often struggle to remember sources60. Tagging only some false news stories as “false” may boost the perceived accuracy of inaccurate but untagged stories due to an implied truth effect61.

A proposed field experiment

Let us discuss a concrete idea for a friction prompt and outline design avenues for a field experiment. Our main idea is to associate friction with learning through a prompt that quizzes users about community standards. This design aims to promote quality recognition before users may react to content. In an experimental environment, users would be faced with randomly assigned micro-exams (e.g., multiple choice) when they are about to share or like posts. The idea of impeding quizzes has most famously been established with CAPTCHA tests. Quizzes testing a reader’s understanding of an article have also been successfully used in the comment section of a public Norwegian broadcaster[62](https://www.nature.com/articles/s44260-025-00051-1#ref-CR62 “Lichtermann, J. This site is “taking the edge off rant mode” by making readers pass a quiz before commenting. Accessed 5 December 2022. https://tinyurl.com/ye4u4f9n

(2017).“). By involving questions about the platform’s governing community standards, the exams would help users further familiarize themselves with the rules put forth by the social platform in question[5](https://www.nature.com/articles/s44260-025-00051-1#ref-CR5 “Hendricks, V. F. Turning the tables: using BigTech community standards as friction strategies. OECD The Forum Network. https://www.oecd-forum.org/posts/turning-the-tables-using-bigtech-community-standards-as-friction-strategies

(2021).“). Such a friction strategy falls into the skill-adoption paradigm of research on behavioral interventions, where participants learn the skills and strategies required to evaluate information quality63. Lutzke et al.47 showed that the combination of reminding users of critical thinking and questions taken from community standards may help participants identify fake news. The display of community norms on Reddit increased compliance and prevented unruly and harassing conversations64.

The proposed field experiment would measure whether engagement with low-quality posts drops when users are prompted with friction strategies incorporating quality-recognition learning based on community standards. Engagement with low-quality posts, measured by likes and shares, should be compared to engagement with high-quality content. A possible definition of low-quality posts could be based on violations of community standards. Such a field experiment may indeed contribute to research on testing effects48,49,50, nudges, and priming14,47. On the other hand, the experiment could elucidate the extent to which user beliefs, social identity, and social influence may interfere with abiding to learned norms.

In the proposed field experiment, participants will engage with posts as they would on their preferred platform. The friction intervention will take the form of a prompt during this process. Quizzes would randomly remind users of the platform’s community standards (not only upon violating those, in contrast to Katsaros et al.56). Quizzes may query the participants about, say, definitions, examples, and risks of violating misinformation or yet other community standards. The design is intended to promote fluency in the community standards rather than just awareness of particular sanctioning clauses. Since memory plays an important role in misinformation inoculations51, an interesting question to explore is whether the suggested random, repetitive reminders will serve as boosters to make the community standards more memorable.

Both intervention and control groups should be set up to test for causality[65](https://www.nature.com/articles/s44260-025-00051-1#ref-CR65 “Epstein, Z., Lin, H., Pennycook, G. & Rand, D. How many others have shared this? Experimentally investigating the effects of social cues on engagement, misinformation, and unpredictability on social media. https://doi.org/10.48550/arXiv.2207.07562

(2022).“), rather than exposing all study participants to the designed intervention as in Avram et al.[23](https://www.nature.com/articles/s44260-025-00051-1#ref-CR23 “Avram, M., Micallef, N., Patil, S. & Menczer, F. Exposure to social engagement metrics increases vulnerability to misinformation. The Harvard Kennedy School Misinformation Review Vol. 1, https://doi.org/10.37016/mr-2020-033

(2020).“). We also refer to Pennycook et al.66 for a comprehensive guide on behavioral experiments related to misinformation and fake news.

Testing friction strategies in a real environment poses difficulties[67](https://www.nature.com/articles/s44260-025-00051-1#ref-CR67 “Pasquetto, I. V. et al. Tackling misinformation: what researchers could do with social media data. HKS Misinformation Rev. 1, https://doi.org/10.37016/mr-2020-49

(2020).“). Independent researchers do not have access to a platform such as X or Facebook to design and test real-time interventions in randomized controlled trials. One may alternatively carry out the experiment in a system designed to emulate a social media environment. Available options include Amazon Mechanical Turk[41](https://www.nature.com/articles/s44260-025-00051-1#ref-CR41 “Fazio, L. Pausing to consider why a headline is true or false can help reduce the sharing of false news. Harvard Kennedy School Misinformation Review, 1, https://doi.org/10.37016/mr-2020-009

(2020).“),68, Volunteer Science69, games such as Fakey70, or open-source software such as the Mock Social Media Tool[71](https://www.nature.com/articles/s44260-025-00051-1#ref-CR71 “Jagayat, A., Gurkaran Boparai, C. P. & Choma, B. L. Mock social media website tool. https://docs.studysocial.media

(2021).“). Each environment yields different levels of ecological validity, and experiments may be informed by empirical data about user activity and social network structure guiding online information sharing58.

A key design decision is what to show participants in their feeds. The higher the desired ecological validity, the more sizeable the task of labeling posts to determine intervention effects. One extreme is to work solely with each user’s own feed. While this yields the highest ecological validity, it comes with two downsides. First, one cannot be sure that users are exposed to misinformation at all, making the size of a sufficient data sample unknown. Second, all posts users have seen, or at least engaged with, must be labeled as misinformation or not. Labeling has either limited accuracy when derived from lists of low-credibility sources3,72, or requires a lot of manual labor. Alternatively, one may work with synthetically curated feeds. A set of posts could be (partially) curated by researchers[41](https://www.nature.com/articles/s44260-025-00051-1#ref-CR41 “Fazio, L. Pausing to consider why a headline is true or false can help reduce the sharing of false news. Harvard Kennedy School Misinformation Review, 1, https://doi.org/10.37016/mr-2020-009

(2020).“),52,66,73,[74](#ref-CR74 “Wang, Y., Han, R., Lehman, T., Lv, Q. & Mishra, S. Analyzing behavioral changes of Twitter users after exposure to misinformation. In Proc. 2021 IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining 591–598. https://doi.org/10.1145/3487351.3492718

(2021).“),75. These posts could be shown to all participants, thus ensuring that all users are shown the same misinformation posts while restricting the set of posts that must be labeled. Exposing participants to injected low-credibility content may raise valid ethical concerns, creating a potential need for mental health support during the study66. The experimental design will have to weigh the advantages and limitations of both real and synthetic feeds.

As a policy, the friction strategy proposed here is non-elective, overt, protective, non-commercial, and impeding. We hypothesize that in the presence of learning, the needed impediment will be minimal. In contrast, the redirection and content labeling strategies that have dominated platform interventions are merely distracting. This allows harmful content to continue to circulate and places a greater burden on users to manage threats from such content themselves. Users who ignore labels or do not follow redirections remain vulnerable.

An important advantage of friction prompts is that they circumvent the task of identifying bad actors, which is resource-expensive and difficult2,3,[67](https://www.nature.com/articles/s44260-025-00051-1#ref-CR67 “Pasquetto, I. V. et al. Tackling misinformation: what researchers could do with social media data. HKS Misinformation Rev. 1, https://doi.org/10.37016/mr-2020-49

(2020).“),[76](#ref-CR76 “Coalition for Independent Technology Research. Letter: imposing fees to access the Twitter API threatens public-interest research. Accessed 11 February 2023. https://independenttechresearch.org/letter-twitter-api-access-threatens-public-interest-research/

(2023).“),77,78. In contrast to other friction-based strategies[43](https://www.nature.com/articles/s44260-025-00051-1#ref-CR43 “Bhuiyan, M. M., Zhang, K., Vick, K., Horning, M. A. & Mitra, T. FeedReflect: a tool for nudging users to assess news credibility on Twitter. In Companion of the 2018 ACM Conference on Computer Supported Cooperative Work and Social Computing (CSCW ’18) 205–208, https://doi.org/10.1145/3272973.3274056

(2018).“),56,57, our approach does not rely on labeling of content as high- or low-quality by participants; one-click reactions may be interpreted as supporting the original post without invoking NLP techniques or human annotation to determine the tone of comments/replies. Friction prompts provide an unbiased, scalable strategy63 that actively adds costs for inauthentic actors.

An agent-based model of friction in social media

We use an agent-based model of information sharing on social media to inform our experimental design. Our model is based on a version of SimSoM35, amended by mechanisms for friction and learning. Further details about the model may be found in a technical report[79](https://www.nature.com/articles/s44260-025-00051-1#ref-CR79 “Jahn, L., Rendsvig, R. K., Flammini, A., Menczer, F. & Hendricks, V. F. Friction interventions to curb the spread of misinformation on social media. https://arxiv.org/abs/2307.11498

(2023).“). In the model, posts are shared by agents, and appear on their news feeds. Posts represent content such as text, images, links, and hashtags. Each agent’s news feed consists of posts shared by agents they follow. SimSoM assumes a directed follower network, similar to those on platforms like Bluesky, Mastodon, Threads, and Twitter/X.

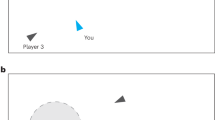

At each time step, an agent is randomly selected to perform an action: post a new message with probability p (post scenario), or else re-share a post from their news feed (share scenario), as illustrated in Fig. 1. The new or re-shared post is added to the news feeds of the agent’s followers. We use p = 0.5 based on empirical data for English-language tweets80.

Fig. 1: Information diffusion process.

Each node has a news feed of size α, containing messages recently posted or re-shared by friends. The follower network is illustrated by dotted links pointing from an agent to their friends. Information spreads from agents to their followers, along the orange links (in the opposite direction of the follow links). The central black node represents an active agent posting a new message (here, m20). The new message