Summary: Foils are fake (but plausible) options in screeners that catch inattentive or dishonest participants, protecting data quality and saving time.

Even the most carefully designed screener can let the wrong participants slip into their study. You may discover halfway through a usability test that someone clearly doesn’t match your target audience – leaving you wondering how they qualified in the first place.

That’s where foils come in: they are a quiet but powerful tool that can quickly reveal who doesn’t belong in your participant pool.

- What Is a Foil?

- Why Foils Matter

- How to Create Effective Foil Answers

- [Combine Foils with Other Screening Techniques](#toc-combine-foil…

Summary: Foils are fake (but plausible) options in screeners that catch inattentive or dishonest participants, protecting data quality and saving time.

Even the most carefully designed screener can let the wrong participants slip into their study. You may discover halfway through a usability test that someone clearly doesn’t match your target audience – leaving you wondering how they qualified in the first place.

That’s where foils come in: they are a quiet but powerful tool that can quickly reveal who doesn’t belong in your participant pool.

- What Is a Foil?

- Why Foils Matter

- How to Create Effective Foil Answers

- Combine Foils with Other Screening Techniques

What Is a Foil?

A foil (also called a distractor) is a deliberately false answer option or question that you include in a screener or survey.

A foil is just as plausible as the real answer options and questions, but its job is to reveal participants who are:

- Clicking through without paying attention

- Guessing in order to qualify for the study

- Misrepresenting themselves intentionally

Foils can be designed in two main ways: as answer options or as entire questions.

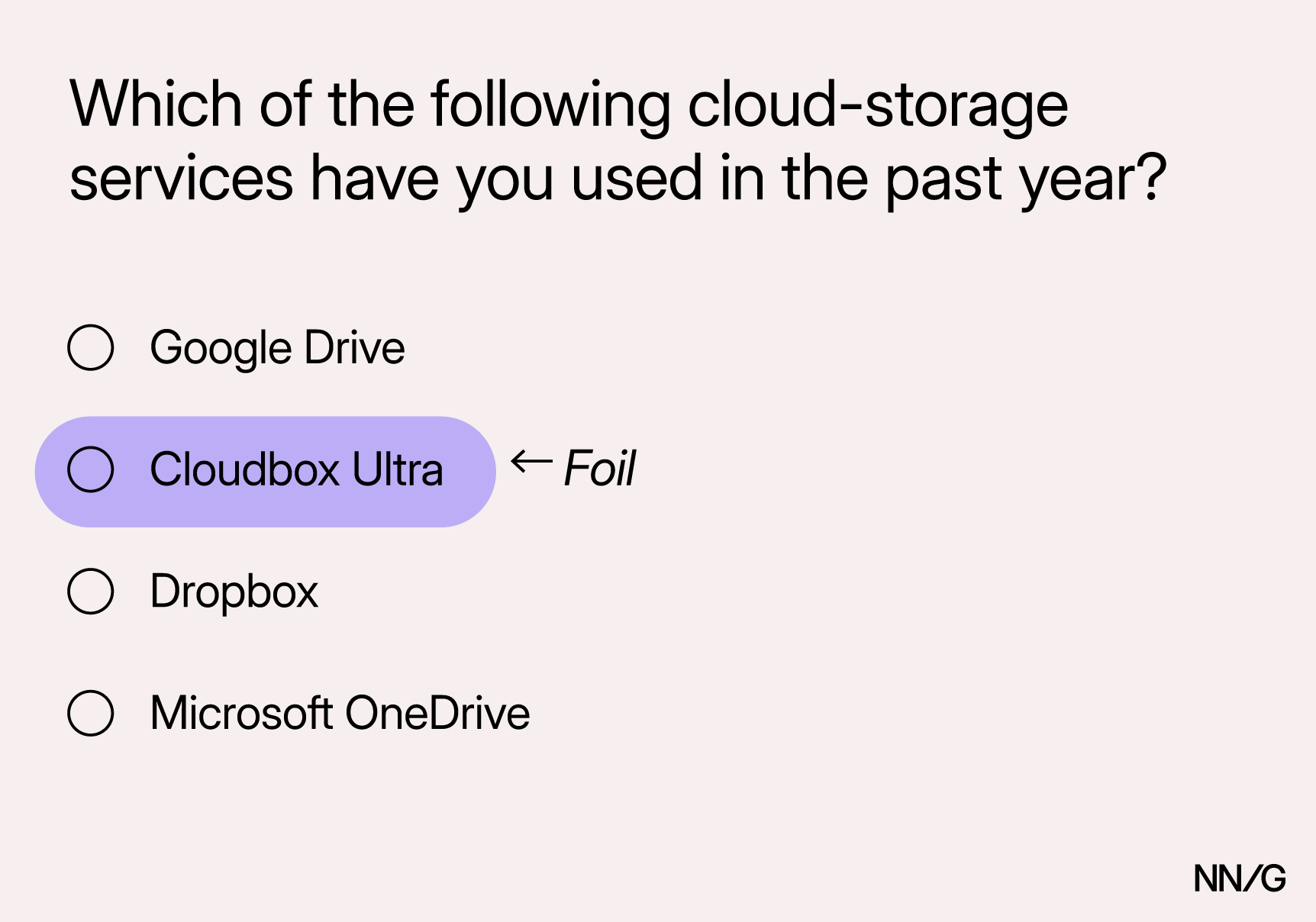

Foil answer options are the more common approach. For example, imagine you’re aiming to recruit people who use cloud-storage services. The answer options might include:

- Google Drive

- Cloudbox Ultra

- Dropbox

- Microsoft OneDrive

“Cloudbox Ultra” is the foil because it doesn’t exist in the real world, and it would be a red flag if someone selects it. This could indicate that the respondent is speeding through or hoping to game the system. In either case, their responses are unlikely to be trustworthy or reliable.

The Cloudbox Ultra is a nonexistent cloud service, included to flag participants who are speeding through the screener and not thoughtfully answering questions.

The Cloudbox Ultra is a nonexistent cloud service, included to flag participants who are speeding through the screener and not thoughtfully answering questions.

Foil questions take things a step further by presenting a whole question around something fictitious. For instance, imagine a question asks:

*Which version of Microsoft Excel’s “Three-Step Analysis” *tool have you used most recently?

Since no such tool actually exists, a qualified participant would either skip it or indicate they don’t use it. If someone selects any version, it may signal that they are inattentive or misrepresenting their experience.

Foil answer options tend to work better than full foil questions. Because foil answer options are intended to blend seamlessly into a list of other real options, participants can process them quickly without feeling burdened. In contrast, foil questions are more substantive and can add unnecessary length and complexity to a screener. They can frustrate or even confuse qualified participants. For that reason, our focus here will be on foil answer options.

Why Foils Matter

**Catching misrecruits early on is critical because even a small number of unqualified participants can skew your findings. **In usability testing, a misrecruit could mean spending valuable session time with someone who isn’t in your target audience — time that could have been spent learning from the right participants. In surveys, misrecruits can result in misleading statistics that obfuscate real customer needs. For example, if someone claims to use a product they’ve never touched, their answers may dilute real patterns, send your team chasing false insights, or even lead to poor product decisions.

Foils serve as a subtle safeguard for data quality. By adding them into your screener, they help you distinguish attentive, qualified participants from those who are inattentive or misrepresenting themselves. In practice, this means:

- Catching cheaters and professional testers. People who select every option to maximize their chances of qualifying for the study will be revealed.

- Protecting the integrity of your study. By minimizing the chance of getting input from participants who are not in your target audience, you will ensure that your insights will have external validity.

- Reducing wasted time and resources. Identifying problematic participants early prevents you from spending session time and incentives on the wrong people.

How to Create Effective Foil Answers

Designing a good foil requires balance. Poorly designed foils can appear obvious, absurd, or unprofessional, thus frustrating genuine and well-intentioned participants. Follow these three principles to design effective foils.

Make Foils Plausible

A good foil will blend in among the real options. Suppose you’re asking what features people use on ride-booking apps, like tracking the driver’s location in real time, scheduling a ride in advance, and sharing trip details with a friend or family member.

❌ Controlling the car with your phone like a remote control.

This foil option is too outlandish. Not only is it unbelievable, but it also risks making participants doubt the legitimacy of your study.

✅ Previewing your driver’s music playlist.

This foil sounds like a fun personalization feature that could be possible, even though it doesn’t exist in reality. Because it blends in, it feels believable without actually being real.

Keep Foils Relevant

Foils should match the subject of the question. For instance, imagine your screener asks which streaming services people subscribe to.

❌ Happy Panda

This option is random and disconnected from the topic. Because it doesn’t resemble typical streaming-service name, it risks breaking the realism of your screener and undermining its professionalism.

✅ Viewster Plus

Because this foil resembles typical streaming-service branding, it doesn’t stand out as nonsensical.

(However, make sure you are not coming up with foil brand names that resemble too closely a real service name, as people may wonder whether your survey has a typo or they are misremembering the exact name. For example, “Microsoft Autopilot” is too close to “Microsoft Copilot.”)

Use Foils Sparingly

A little goes a long way; aim for just one or two foil answers in a single screener question. Overloading a screener with foils will create a very confusing experience even for genuine participants.

For example, let’s say you’re asking UX professionals which types of team collaboration activities they’ve participated in at work. Real response options might include design critiques or discovery workshops.

What if we include three different foil options, just to be safe?

❌ *Innovation-thinking circle *

❌ Interface-energy mapping

❌ Stakeholder dreamstorm

Adding three or four fake activities can make even well-meaning participants second-guess themselves.

✅ Interface-energy mapping

Only one foil is enough to catch inattentive or unqualified participants.

Combine Foils with Other Screening Techniques

While foils are a valuable tool for preventing misrecruits, they’re not 100% foolproof. Some misrecruits may still slip through, either purely by chance or by guessing correctly. Some savvy professional testers may look up product or brand names online to detect the foil.

Because of these gaps, foils should be only one tool of a broader toolkit for ensuring high-quality recruits.

Pair foils with other screener safeguards, such as:

- Open-ended questions that reveal genuine knowledge. Authentic users will give specific, credible responses, while misrecruits often write vague, generic, or copy-pasted answers.

- Consistency checks across screener questions. Ask the same question in slightly different ways. For example, if someone claims in one question that they never play video games but later say that they regularly purchase video games from Steam, that inconsistency is a red flag.

- Crosschecking participant responses during live sessions. Early in a usability session or interview, casually ask a question that validates screener answers. For example, if someone said they regularly use Audible, ask: “What audiobook are you currently listening to?” Qualified participants will name a specific title or author; misrecruits will hesitate and stumble to recall any audiobook at all.

Conclusion

Foils are a low-effort, high-impact way to protect your study from misrecruits, but they work best when used thoughtfully. A good foil should be plausible, relevant, and used sparingly.

And while foils are powerful, they shouldn’t be your only line of defense; combine them with other screening best practices for the strongest results. This approach helps you catch inattentive or dishonest participants before they reach your study, leading to more reliable data, smarter use of your research budget, and greater confidence in your UX insights.