Every time you pop an idle thought into ChatGPT or another AI interface, it triggers a far-flung journey. Your query—“What is this rash?” perhaps—races to a hulking data center in a place like Abilene, Texas, where it’s routed to the right stack of servers.

The traffic directors of all this activity are a series of high-bandwidth network switches—devices that coordinate data between servers—made by companies like Broadcom. The AI wave has reshaped Broadcom’s semiconductor business; its switches are now designed to support the high-capacity needs of massive AI data centers, and its custom AI accelerator line is a fast-growing source of revenue.

Patrick Kulp

“Over the last three years, things have changed pretty dramatically with AI,” Peter Del Vecchio, product line manager for Broadcom’s Tomahawk family of data center switches, told us. “For example, we announced [Tomahawk 5] in August of 2022, and the main market at that time was just general data center networking. ChatGPT was announced three months later, and pretty much everything changed.”

Fast-forward three years: Broadcom has inked a deal with OpenAI to jointly build 10 gigawatts’ worth of AI accelerators over the coming years, tapping both Broadcom’s custom AI accelerators and its networking infrastructure. It’s one of a flurry of infrastructure partnerships that OpenAI has forged over the past several months.

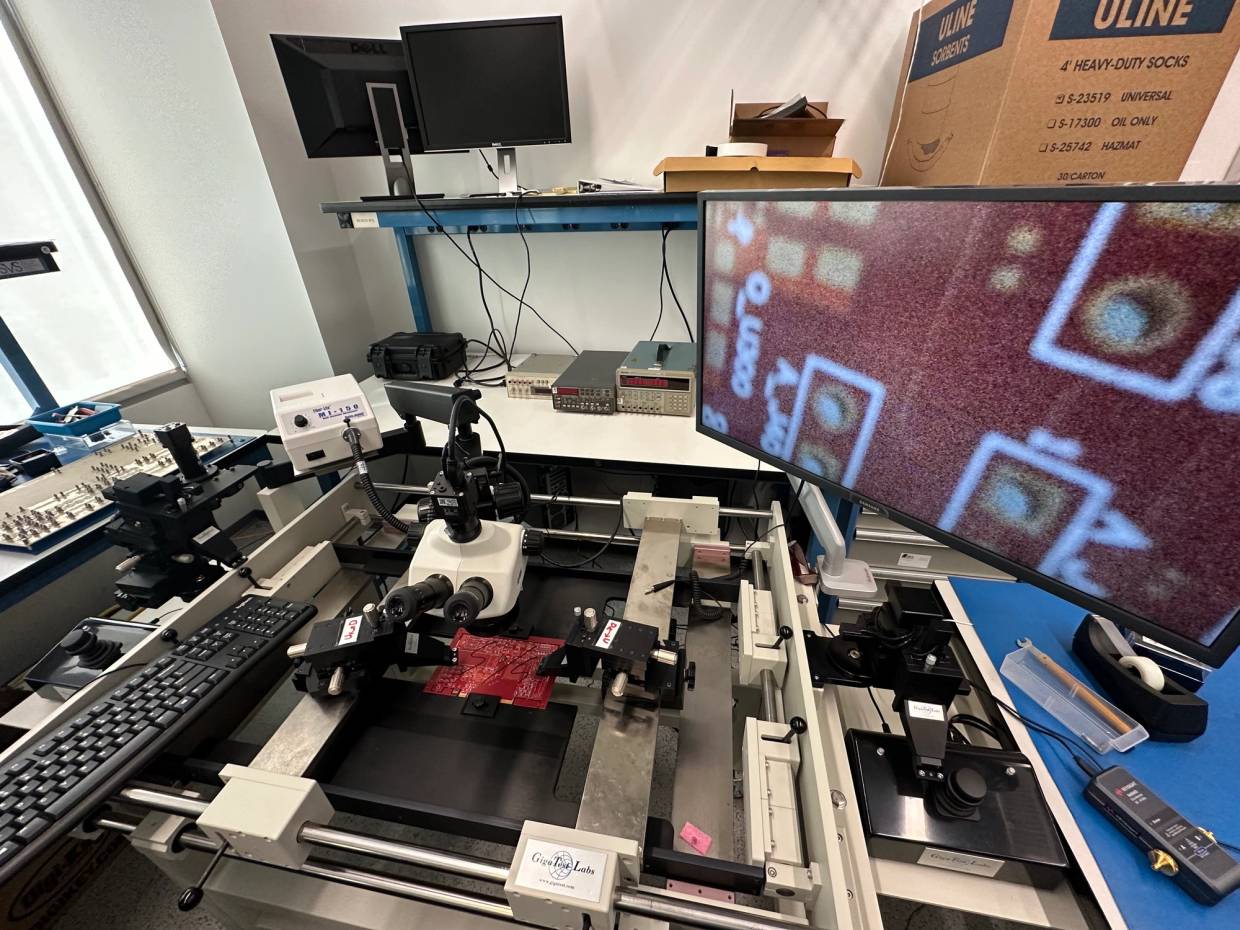

On the heels of this new deal, Tech Brew took a behind-the-scenes tour of Broadcom’s network switch R&D labs, where engineers undertake the multiyear process of assembling these devices.

On the ground

The labs are situated in the ground-floor wing of a nondescript office building in Broadcom’s San Jose, California, campus. The rooms are crowded with workbenches and scattered with specialized devices. Thick bundles of wires and cooling pipes thread through ceiling vents to drape down to each workstation.

“This is basically our factory, where we’re building these things and testing them and characterizing them, all in that room,” David Hollis, principal mechanical and thermal engineer at Broadcom, said. “We’ll have technicians, engineers, program managers all coalesce around this hub and monitor where we’re at with all the build…Essentially, it’s a one-stop shop for all these systems to build, assemble, debug, and deploy.”

Patrick Kulp

Around 1,400 employees work on Broadcom’s switch team, about half of them focused on the software side, according to Del Vecchio.

Switch at birth

Switches take around three years to develop from initial design to release, so engineers at the beginning of the process don’t necessarily know what kinds of demands the AI world will eventually place on them, Del Vecchio said.

“We try to build quite a bit of flexibility in there, and we can make some tweaks along the way,” he said. “[But] the basic architecture is going to be pretty much locked in a year and a half, two years before we actually sample to customers.”

In one lab, a team uses machines to swiftly raise or lower the temperature via attached hose-like appendages. Some of Broadcom’s chips advertise extreme durability; they’re designed to withstand the subzero chill or scorching heat of somewhere like a remote cell tower. That room is where engineers make sure those claims hold up.

Keep up with the innovative tech transforming business

Tech Brew keeps business leaders up-to-date on the latest innovations, automation advances, policy shifts, and more, so they can make informed decisions about tech.

“These devices can reject up to about 1,500 watts of power over a very small area,” Hollis said. “This system is super-cooling a non-conductive fluid…You don’t want to get this fluid on your hand.”

Patrick Kulp

The cool factor

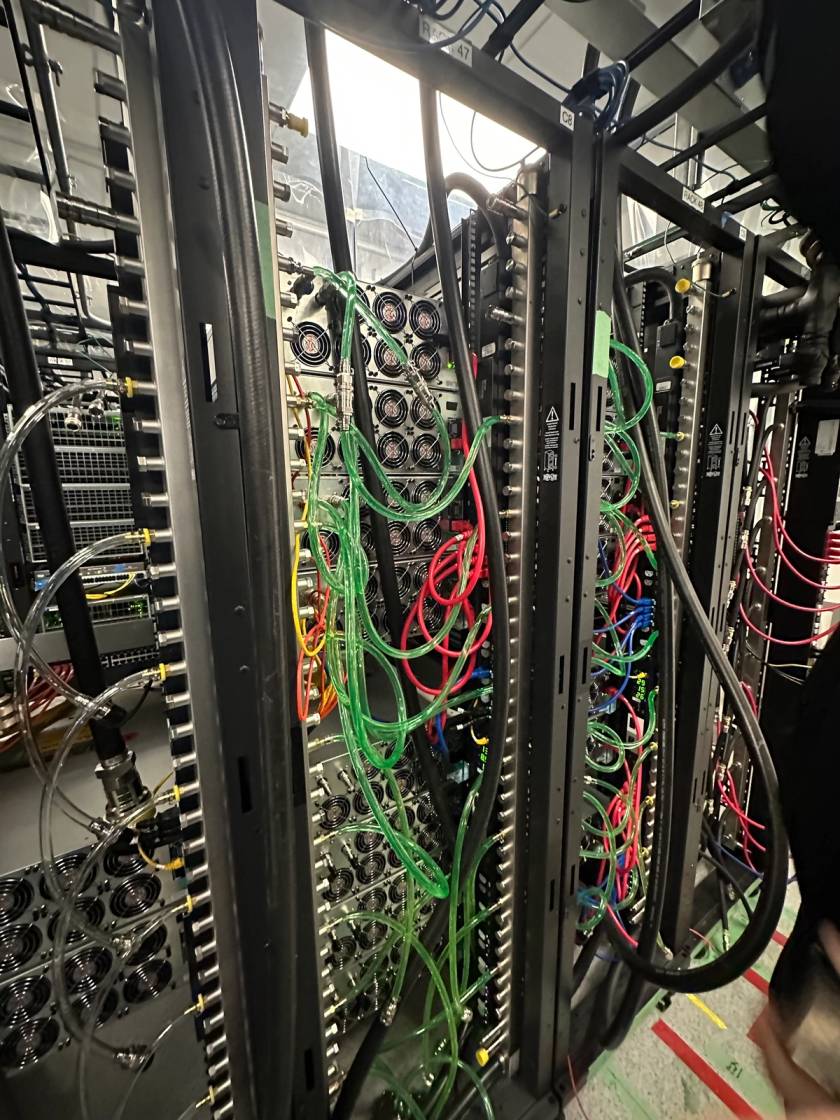

Temperature is a major concern across the whole lab, especially right now. Broadcom is currently undertaking an upgrade involving a liquid cooling system that will pipe water and coolant around hoses overhead in a closed loop. The space is a bit messy as a result; engineers keep telling us that it’s usually much tidier.

“We’re still going through some of the transition,” Greg Barsky, who manages hardware R&D for Broadcom’s Core Switching Group, shouts over the roar of hundreds of fans.

We’re standing amid columns of stacked servers festooned with ethernet cables and translucent cable hoses at the ready for said transition. For now, ear protection is required to enter the small data center’s jet-engine din, loosely contained by a border of plastic flaps. The data center powers development and quality assurance for the software available with the switches.

Patrick Kulp

The switch to liquid cooling will ultimately make the lab more power-efficient than the current fans. “Liquid is always going to be better to move heat, and it’s going to enable, eventually, smaller data centers and less power consumption because, well, fans [use] a lot of power,” Hollis said.

Data centers are increasingly making this switch, as air cooling is no longer able to handle the heat created by higher-powered AI workloads.

Massive scale

Much of what has changed about Broadcom’s switch business over the last few years is a result of the unprecedented scale of new AI data centers, Del Vecchio said. The constant increase in compute needs creates new challenges for networking between models sprawled across different parts of data centers or even multiple distant buildings.

“These models are so large and there’s so much training that goes on with them that [they’re] having problems finding power from the power companies, so what they’ll do is say, ‘OK, I’ll get this data center over here, and 60 miles away, I’ll put in another data center,” Del Vecchio said. “Whereas before, it was that you [would] have a model that would run on one GPU…Now, people are saying, ‘OK, now I’ve got to scale up or scale out to maybe on the order of 32,000 to 64,000 GPUs within a building. And now I need to connect multiple buildings together in order to be able to do this training. The scale of these things, the amount of power is just insane.”

Keep your eyes peeled for part two of this feature in the coming days for an in-depth discussion of the big bets Broadcom is making with its custom AI chip business.