Intro

In my previous post I explained how to compile and link Rust modules for the x86 target, showing how to print a simple message from rust to the xv6 console.

In this guide I will expand on the framewo…

Intro

In my previous post I explained how to compile and link Rust modules for the x86 target, showing how to print a simple message from rust to the xv6 console.

In this guide I will expand on the framework explained above, implementing interrupt-driven networking with a single TCP socket (similar to *BSD) in the xv6 kernel, fully written in Rust, using the Intel e1000 network card.

On top of this, this project is built on the public xv6 source (instead of my school’s private xv6 fork) meaning that I can show the full repo.

I will then show this functionality by telling ChatGPT 5.1 what syscalls it has access to and making it write a userspace HTTP server.

e1000 xv6 Driver layer

There are a lot of great guides explaining how the e1000 works and how to interface to it in xv6.

On a very basic level:

-

You first initialize the card by sending it a series of numbers over PCI.

-

On the internet you’ll find very extensive docs explaining exactly what everything does, but ChatGPT does a good job at making it so that you don’t have to read through function codes.

-

When the e1000 NIC receives a packet, it writes it to a circular buffer and generates an interrupt

-

When you want to transmit a packet, you write a packet to a separate circular buffer and set a flag.

The kernel should therefore map these buffers into memory and then use MMIO to read and write to the network card.

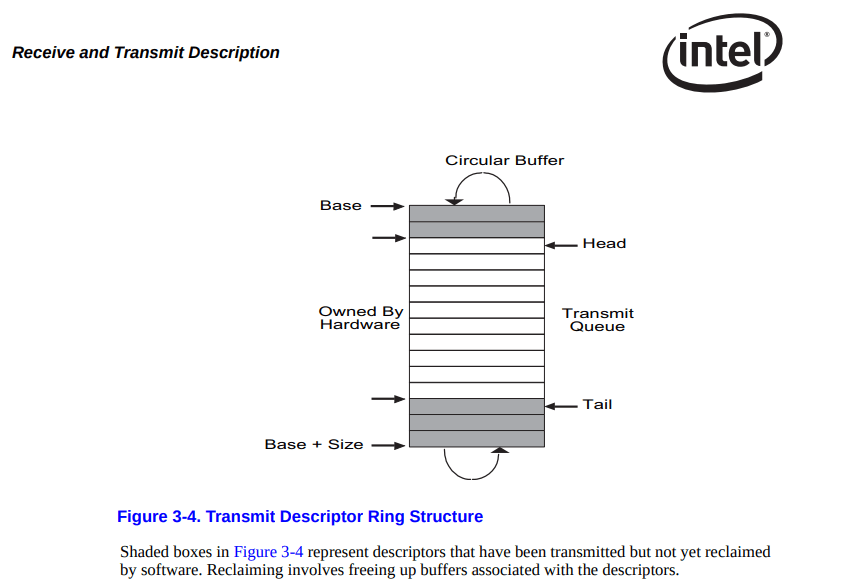

From the docs linked above

How I chose to implement this was by having four main functions:

e1000_init(void)

- Reads card, maps memory, sends codes to set the card in the right mode.

e1000_tx(data, len)

- Given ethernet frames (and its length), write packets to the circular buffer.

e1000_rx_poll(buf, len)

- Check the NIC RX ring, if we received a packet, read it into buf.

e1000_intr(void)

- The interrupt handler, when we receive an interrupt, pass control to the Rust handler.

Init e1000

void

e1000_init(void)

{

ioapicenable(IRQ_E1000, 0);

uint bus = 0;

uint slot = 3;

uint bar0 = pci_config_read32(bus, slot, 0, 0x10);

uint mmio_pa = bar0 & ~0xF;

uint pa_page = mmio_pa & ~(PGSIZE - 1);

void *va_page = (void*)P2V(pa_page);

uint mmio_size = 0x4000; // 4 pages

int perm = PTE_W | PTE_P;

if(mappages(kpgdir, va_page, mmio_size, pa_page, perm) < 0){

cprintf("e1000: mappages failed for mmio_pa=0x%x\n", mmio_pa);

return;

}

// Reload CR3 so CPU picks up new mapping

lcr3(V2P(kpgdir));

e1000_regs = (volatile uint *)P2V(mmio_pa);

cprintf("e1000: BAR0=0x%x mmio_pa=0x%x regs=%p\n", bar0, mmio_pa, e1000_regs);

uint status = e1000_read_reg(E1000_STATUS);

cprintf("e1000: status=0x%x\n", status);

// Enable bus mastering + memory space on the PCI device

uint cmd = pci_config_read32(bus, slot, 0, 0x04);

ushort cmd_lo = cmd & 0xFFFF;

// Bit 1 = Memory Space Enable, Bit 2 = Bus Master Enable

cmd_lo |= 0x0002; // MSE

cmd_lo |= 0x0004; // BME

cmd = (cmd & 0xFFFF0000) | cmd_lo;

pci_config_write32(bus, slot, 0, 0x04, cmd);

cprintf("e1000: PCI CMD=0x%x\n", cmd_lo);

// Init TX ring

int i;

for(i = 0; i < TX_RING_SIZE; i++){

tx_ring[i].addr_lo = V2P(tx_bufs[i]);

tx_ring[i].addr_hi = 0;

tx_ring[i].length = 0;

tx_ring[i].cso = 0;

tx_ring[i].cmd = E1000_TXD_CMD_RS | E1000_TXD_CMD_IFCS;

tx_ring[i].status = E1000_TXD_STAT_DD; // mark free

tx_ring[i].css = 0;

tx_ring[i].special = 0;

}

e1000_write_reg(E1000_TDBAL, V2P(tx_ring));

e1000_write_reg(E1000_TDBAH, 0);

e1000_write_reg(E1000_TDLEN, sizeof(tx_ring));

e1000_write_reg(E1000_TDH, 0);

e1000_write_reg(E1000_TDT, 0);

tx_tail = 0;

// Init RX ring

for(i = 0; i < RX_RING_SIZE; i++){

rx_ring[i].addr_lo = V2P(rx_bufs[i]);

rx_ring[i].addr_hi = 0;

rx_ring[i].length = 0;

rx_ring[i].csum = 0;

rx_ring[i].status = 0; // owned by NIC

rx_ring[i].errors = 0;

rx_ring[i].special = 0;

}

e1000_write_reg(E1000_RDBAL, V2P(rx_ring));

e1000_write_reg(E1000_RDBAH, 0);

e1000_write_reg(E1000_RDLEN, sizeof(rx_ring));

e1000_write_reg(E1000_RDH, 0);

e1000_write_reg(E1000_RDT, RX_RING_SIZE - 1);

rx_next = 0;

// 5) Configure transmit control

uint tctl = 0;

tctl |= E1000_TCTL_EN; // enable TX

tctl |= E1000_TCTL_PSP; // pad short packets

tctl |= (0x10 << E1000_TCTL_CT_SHIFT); // collision threshold ~16

tctl |= (0x40 << E1000_TCTL_COLD_SHIFT); // collision distance ~64

e1000_write_reg(E1000_TCTL, tctl);

// 6) Configure inter-packet gap

uint tipg = 0;

tipg |= E1000_TIPG_IPGT;

tipg |= (E1000_TIPG_IPGR1 << E1000_TIPG_IPGR1_SHIFT);

tipg |= (E1000_TIPG_IPGR2 << E1000_TIPG_IPGR2_SHIFT);

e1000_write_reg(E1000_TIPG, tipg);

// 7) Configure receive control

uint rctl = 0;

rctl |= E1000_RCTL_EN; // enable RX

rctl |= E1000_RCTL_BAM; // accept broadcast

rctl |= E1000_RCTL_UPE; // accept all unicast

rctl |= E1000_RCTL_MPE; // accept all multicast

rctl |= E1000_RCTL_SZ_2048; // 2K buffers

rctl |= E1000_RCTL_SECRC; // strip CRC

e1000_write_reg(E1000_RCTL, rctl);

e1000_write_reg(E1000_IMC, 0xffffffff); // mask all

(void)e1000_read_reg(E1000_ICR); // clear pending

e1000_write_reg(E1000_IMS,

E1000_IMS_RXT0 | // RX timer / normal receive

E1000_IMS_RXO | // RX overrun (optional)

E1000_IMS_RXDMT0 | // RX desc low watermark

E1000_IMS_TXDW); // TX descriptor write-back

// debug: read back RCTL to confirm

uint rctl_read = e1000_read_reg(E1000_RCTL);

cprintf("e1000: RCTL=0x%x\n", rctl_read);

(void)e1000_test_send;

// e1000_test_send(); // optional

cprintf("e1000: initialized\n");

// Initialize Rust+smoltcp stack now that NIC is ready.

rust_net_init();

}

Transmit frame

int

e1000_tx(const void *data, int len)

{

if(len <= 0 || len > PKT_BUF_SIZE)

return -1;

int tdt = e1000_read_reg(E1000_TDT);

struct e1000_tx_desc *d = &tx_ring[tdt];

cprintf("e1000_tx: tdt=%d status=0x%x\n", tdt, d->status);

if((d->status & E1000_TXD_STAT_DD) == 0){

cprintf("e1000_tx: ring full at %d\n", tdt);

return -1;

}

memmove(tx_bufs[tdt], data, len);

d->length = len;

d->status = 0;

d->cmd = E1000_TXD_CMD_RS | E1000_TXD_CMD_EOP | E1000_TXD_CMD_IFCS;

tdt = (tdt + 1) % TX_RING_SIZE;

e1000_write_reg(E1000_TDT, tdt);

cprintf("e1000_tx: queued len=%d new TDT=%d\n", len, tdt);

return 0;

}

Read frame

int

e1000_rx_poll(uchar *buf, int maxlen)

{

struct e1000_rx_desc *d = &rx_ring[rx_next];

if((d->status & E1000_RXD_STAT_DD) == 0)

return -1;

int n = 0;

if((d->status & E1000_RXD_STAT_EOP) == 0){

cprintf("e1000: RX fragment, dropping (len=%d status=0x%x)\n",

d->length, d->status);

// n stays 0 -> caller won't use this packet

goto rearm;

}

n = d->length;

if(n > maxlen)

n = maxlen;

memmove(buf, rx_bufs[rx_next], n);

rearm:

d->status = 0;

d->errors = 0;

rx_next = (rx_next + 1) % RX_RING_SIZE;

int rdt = (rx_next + RX_RING_SIZE - 1) % RX_RING_SIZE;

e1000_write_reg(E1000_RDT, rdt);

return n;

}

Handle Interrupt

void

e1000_intr(void)

{

uint icr = e1000_read_reg(E1000_ICR);

if(icr == 0)

return;

// RX-related interrupt?

if(icr & (E1000_ICR_RXT0 | E1000_ICR_RXDMT0 | E1000_ICR_RXO)){

// Let Rust+smoltcp pull packets with e1000_rx_poll() and handle them.

cprintf("handling packet in rust\n");

rust_net_poll();

}

if(icr & E1000_ICR_TXDW){

cprintf("e1000: TXDW\n");

}

(void)e1000_debug_dump;

}

OS Layer

“Manual” Networking

I started the project by implementing my own TCP/IP stack, however I realized early on that it would have involved writing hundreds of lines of code handling every specific IP protocols that I wanted (ICMP, HTTP, just to name a few) by reading their spec and manually creating all the specific packets, and I have no interest in doing so right now, given that this is an OS-focused project.

/// Inside the interrupt handler

...

match ethertype {

0x0806 => {

// ARP

unsafe { puts_const(b"rust_net_rx: ARP frame\n\0") };

arp_rx(payload, src, dst);

}

0x0800 => {

// IPv4

unsafe { puts_const(b"rust_net_rx: IPv4 frame\n\0") };

ipv4_rx(payload, src, dst);

}

_ => {

// unsupported ethertype, drop silently for now

}

...

/// Example of a ~120loc function just to handle basic ARP packets.

/// Most of the funciton is just manually building the correct buffers.

fn arp_rx(payload: &[u8], src_mac: &[u8], _dst_mac: &[u8]) {

// We assume Ethernet/IPv4 ARP, which is 28 bytes minimum.

if payload.len() < 28 || src_mac.len() < 6 {

return;

}

let htype = u16::from_be_bytes([payload[0], payload[1]]);

let ptype = u16::from_be_bytes([payload[2], payload[3]]);

let hlen = payload[4];

let plen = payload[5];

let oper = u16::from_be_bytes([payload[6], payload[7]]);

// Only handle Ethernet (1) + IPv4 (0x0800), MAC len 6, IP len 4

if htype != 1 || ptype != 0x0800 || hlen != 6 || plen != 4 {

return;

}

// Layout:

// [ 8..14 ] sender hw

// [14..18] sender IP

// [18..24] target hw

// [24..28] target IP

let sha = &payload[8..14]; // sender MAC

let spa = &payload[14..18]; // sender IP

let tpa = &payload[24..28]; // target IP

...

Snippet of what the could would have looked like was I to implement everything by myself. Hopefully you can see how this isn’t aligned with the scope of this project.

The smoltcp crate

After some research I came across smoltcp, a “standalone, event-driven TCP/IP stack that is designed for bare-metal, real-time systems”. Very importantly, this crate compiles with no_std, making it an excellent choice for xv6 development.

The simplicity of this crate is what drew me to it. A Device only needs to implement receive, transmit, and capabilities. With these three methods done (which are really only 2, since capabilities is just a way to describe the device’s capabilities.), we basically have a fully functional TCP/IP stack. For my xv6 e1000 driver, these implementations looked like:

impl Device for Xv6Device {

type RxToken<'a> = Xv6RxToken<'a> where Self: 'a;

type TxToken<'a> = Xv6TxToken<'a> where Self: 'a;

fn capabilities(&self) -> DeviceCapabilities {

let mut caps = DeviceCapabilities::default();

caps.medium = Medium::Ethernet;

caps.max_transmission_unit = MAX_FRAME_SIZE as usize;

caps

}

fn receive(

&mut self,

_timestamp: Instant,

) -> Option<(Self::RxToken<'_>, Self::TxToken<'_>)> {

unsafe { puts_const(b"rust: smoltcp receive() got frame\n\0"); }

self.rx_len = 0;

// ↓ C function

let n = unsafe { e1000_rx_poll(self.rx_buf.as_mut_ptr(), MAX_FRAME_SIZE as c_int) };

if n <= 0 {

return None;

}

self.rx_len = n as usize;

let rx = Xv6RxToken {

buffer: &self.rx_buf[..self.rx_len],

};

let tx = Xv6TxToken {

buffer: &mut self.tx_buf[..],

};

Some((rx, tx))

}

fn transmit(&mut self, _timestamp: Instant) -> Option<Self::TxToken<'_>> {

unsafe { puts_const(b"rust: smoltcp transmit() called\n\0"); }

Some(Xv6TxToken {

buffer: &mut self.tx_buf[..],

})

}

}

struct Xv6Device {

rx_buf: [u8; MAX_FRAME_SIZE],

rx_len: usize,

tx_buf: [u8; MAX_FRAME_SIZE],

}

impl Xv6Device {

const fn new() -> Self {

Self {

rx_buf: [0; MAX_FRAME_SIZE],

rx_len: 0,

tx_buf: [0; MAX_FRAME_SIZE],

}

}

}

struct Xv6RxToken<'a> {

buffer: &'a [u8],

}

struct Xv6TxToken<'a> {

buffer: &'a mut [u8],

}

impl<'a> RxToken for Xv6RxToken<'a> {

fn consume<R, F>(self, f: F) -> R

where

F: FnOnce(&[u8]) -> R,

{

f(self.buffer)

}

}

impl<'a> TxToken for Xv6TxToken<'a> {

fn consume<R, F>(self, len: usize, f: F) -> R

where

F: FnOnce(&mut [u8]) -> R,

{

let slice = &mut self.buffer[..len];

let result = f(slice);

unsafe {

puts_const(b"rust: smoltcp TxToken::consume, sending frame\n\0");

// ↓ C function

e1000_tx(slice.as_ptr(), len as c_int);

}

result

}

}

With this done, we have a working TCP/IP stack that we can use from the xv6 kernel, however the user still has no way to access these resources.

OS Syscall Layer

To give the user access to networking resources, I chose to implement 4 syscalls:

-

int net_listen(int port); -

Listen on TCP port

port. -

int net_accept(void); -

Returns 1 if a connection is established, 0 if not yet, -1 on error.

-

int net_recv(void *buf, int n); -

Returns bytes read, 0 if no data, -1 on error.

-

int net_send(void *buf, int n); -

Returns bytes sent, 0 if would-block, -1 on error.

-

int net_close(void); -

Close current connection.

C Side

On the C side, these functions simply do argument parsing and pass the control to the Rust module:

int

sys_net_listen(void)

{

int port;

if(argint(0, &port) < 0)

return -1;

if(port < 0 || port > 65535)

return -1;

return rust_net_listen(port);

}

// int net_accept(void);

// returns 1 if a connection is established, 0 if not yet, -1 on error

int

sys_net_accept(void)

{

return rust_net_accept();

}

// int net_recv(void *buf, int len);

int

sys_net_recv(void)

{

int len;

char *buf;

if(argptr(0, &buf, 0) < 0) // size checked below

return -1;

if(argint(1, &len) < 0)

return -1;

if(len < 0)

return -1;

return rust_net_recv(buf, len);

}

// int net_send(void *buf, int len);

int

sys_net_send(void)

{

int len;

char *buf;

if(argptr(0, &buf, 0) < 0)

return -1;

if(argint(1, &len) < 0)

return -1;

if(len < 0)

return -1;

return rust_net_send(buf, len);

}

// int net_close(void);

int

sys_net_close(void)

{

rust_net_close();

return 0;

}

Rust Side

The real logic is all implement in the safe Rust module. However, due to smoltcp, these syscall handlers are very light:

#[no_mangle]

pub extern "C" fn rust_net_listen(port: i32) -> i32 {

unsafe {

let sockets = match SOCKET_SET.as_mut() {

Some(s) => s,

None => return -1,

};

let handle = match TCP_HANDLE {

Some(h) => h,

None => return -1,

};

let socket = sockets.get_mut::<tcp::Socket>(handle);

match socket.listen(port as u16) {

Ok(()) => 0,

Err(_) => -1,

}

}

}

#[no_mangle]

pub extern "C" fn rust_net_accept() -> i32 {

unsafe {

let sockets = match SOCKET_SET.as_mut() {

Some(s) => s,

None => return -1,

};

let handle = match TCP_HANDLE {

Some(h) => h,

None => return -1,

};

let socket = sockets.get_mut::<tcp::Socket>(handle);

use smoltcp::socket::tcp::State;

match socket.state() {

State::Established => 1,

State::Listen | State::SynReceived | State::SynSent => 0,

_ => 0,

}

}

}

#[no_mangle]

pub extern "C" fn rust_net_recv(buf: *mut u8, len: i32) -> i32 {

if buf.is_null() || len <= 0 {

return -1;

}

unsafe {

let sockets = match SOCKET_SET.as_mut() {

Some(s) => s,

None => return -1,

};

let handle = match TCP_HANDLE {

Some(h) => h,

None => return -1,

};

let socket = sockets.get_mut::<tcp::Socket>(handle);

if !socket.may_recv() {

return 0;

}

let max_len = len as usize;

let mut out_n: i32 = 0;

let res = socket.recv(|data| {

let n = core::cmp::min(data.len(), max_len);

if n > 0 {

core::ptr::copy_nonoverlapping(data.as_ptr(), buf, n);

}

out_n = n as i32;

// consume n bytes, return n as the closure result

(n, ())

});

if res.is_err() {

-1

} else {

out_n

}

}

}

#[no_mangle]

pub extern "C" fn rust_net_send(buf: *const u8, len: i32) -> i32 {

if buf.is_null() || len <= 0 {

return -1;

}

unsafe {

let sockets = match SOCKET_SET.as_mut() {

Some(s) => s,

None => return -1,

};

let handle = match TCP_HANDLE {

Some(h) => h,

None => return -1,

};

let socket = sockets.get_mut::<tcp::Socket>(handle);

// Only send if TCP state/window allows

if !socket.may_send() {

return 0; // would block / not ready

}

let slice = core::slice::from_raw_parts(buf, len as usize);

match socket.send_slice(slice) {

Ok(n) => n as i32, // actual bytes queued

Err(_) => -1,

}

}

}

#[no_mangle]

pub extern "C" fn rust_net_close() {

unsafe {

let sockets = match SOCKET_SET.as_mut() {

Some(s) => s,

None => return,

};

let handle = match TCP_HANDLE {

Some(h) => h,

None => return,

};

let socket = sockets.get_mut::<tcp::Socket>(handle);

socket.close();

}

}

Testing & Conclusion

From my linux host, I’ve then created a tap device:

sudo modprobe tun

sudo ip tuntap add dev tap0 mode tap user "$USER"

sudo ip addr add 10.0.3.1/24 dev tap0

And put in on the 10.0.3.1/24 network. On the xv6 device I’ve then hardcoded an ip and MAC address (I don’t have a dhcp server running on my host):

const MY_MAC_BYTES: [u8; 6] = [0x02, 0xaa, 0xbb, 0xcc, 0xdd, 0xee];

const MY_IP_V4: Ipv4Address = Ipv4Address::new(10, 0, 3, 2);

And asked ChatGPT to write a simple HTTP server using the syscalls written above:

//server.c, generated by ChatGPT 5.1

#include "types.h"

#include "stat.h"

#include "user.h"

// Returns 1 if request body == "close"

static int

body_is_close(char *buf, int n)

{

if(n <= 0) return 0;

// find "\r\n\r\n" manually

int i;

for(i = 0; i+3 < n; i++){

if(buf[i] == '\r' &&

buf[i+1] == '\n' &&

buf[i+2] == '\r' &&

buf[i+3] == '\n')

{

// body begins after the blank line

int body_start = i + 4;

// skip spaces/newlines

while(body_start < n &&

(buf[body_start] == ' ' ||

buf[body_start] == '\n' ||

buf[body_start] == '\r'))

body_start++;

// check for "close"

if(body_start + 5 <= n &&

buf[body_start] == 'c' &&

buf[body_start+1] == 'l' &&

buf[body_start+2] == 'o' &&

buf[body_start+3] == 's' &&

buf[body_start+4] == 'e')

return 1;

return 0; // found body, but not "close"

}

}

return 0; // didn't find end of headers

}

int

main(void)

{

for(;;){

// Put the TCP socket back into LISTEN state each iteration.

// On first iteration the socket is Closed; later it is Closed again

// after net_close().

if(net_listen(80) < 0){

printf(2, "httpd: net_listen(80) failed\n");

exit();

}

printf(1, "httpd: listening on port 80\n");

// Wait until the TCP connection reaches Established

while(net_accept() == 0)

sleep(10);

printf(1, "httpd: connection established\n");

char buf[512];

int n = net_recv(buf, sizeof(buf));

if(n > 0)

printf(1, "httpd: received %d bytes\n", n);

if(body_is_close(buf, n)){

// Shutdown reply

static const char hdr[] =

"HTTP/1.0 200 OK\r\n"

"Content-Length: 13\r\n"

"Content-Type: text/plain\r\n"

"\r\n";

static const char body[] = "shutting down\n"; // 13 bytes

net_send((void*)hdr, sizeof(hdr) - 1);

net_send((void*)body, sizeof(body) - 1);

net_close();

printf(1, "httpd: close command received, exiting.\n");

exit();

} else {

// Normal reply

static const char hdr[] =

"HTTP/1.0 200 OK\r\n"

"Content-Length: 13\r\n"

"Content-Type: text/plain\r\n"

"\r\n";

static const char body[] = "hi from user\n"; // 13 bytes

net_send((void*)hdr, sizeof(hdr) - 1);

net_send((void*)body, sizeof(body) - 1);

net_close();

}

}

}

This server returns a simple message when a GET request is sent, and shuts down when someones POSTs “close”.

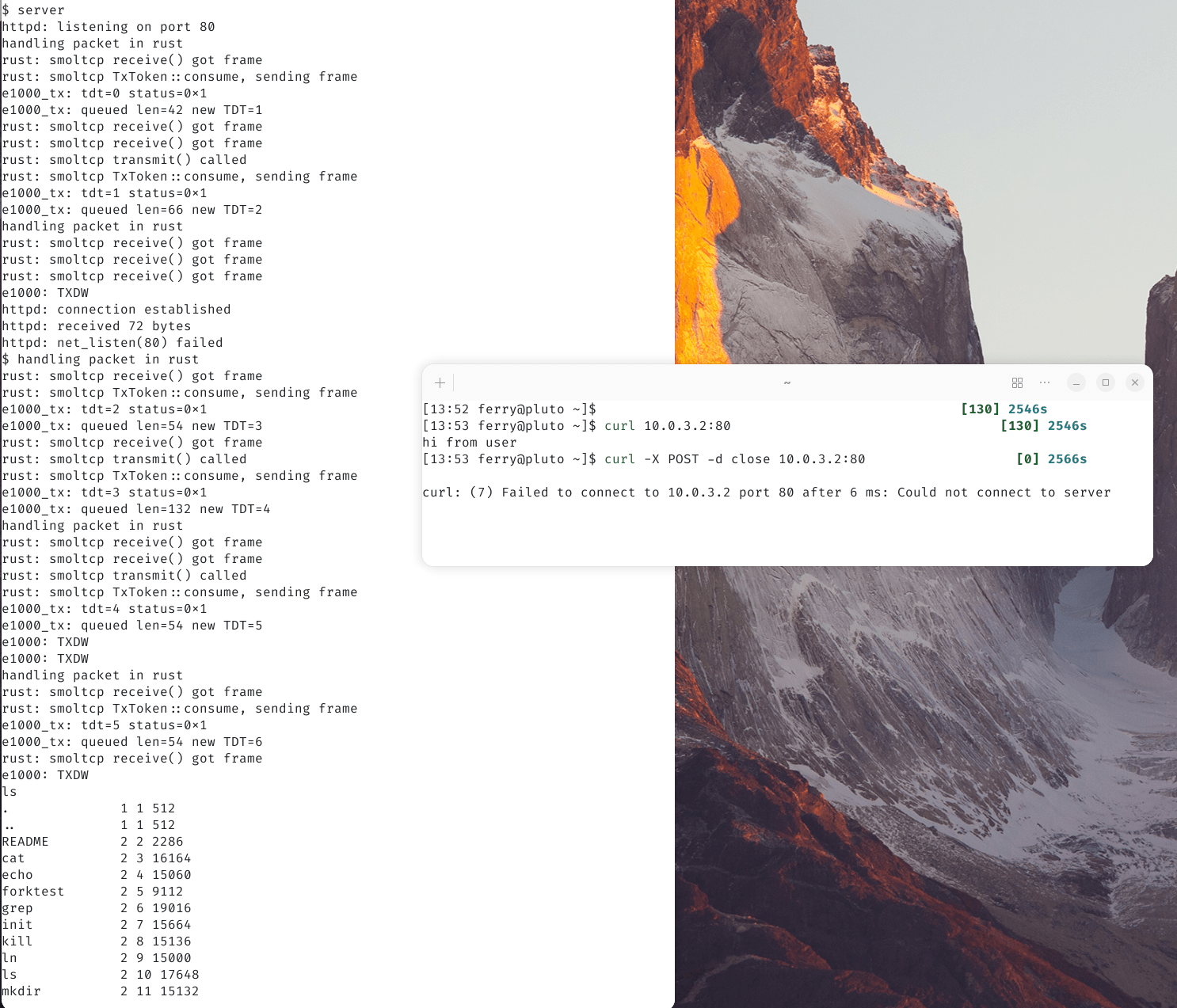

On the left: qemu running xv6 with rust networking, on the right: linux host connecting to the server. The final

lsoutput shows that sending aclosein the post payload shuts down the server.

Code & Patch

The code is accessible at github.com/Ferryistaken/xv6-public, and a full patch is pasted below.

Thanks & Acknowledgements

Special thanks to my CS3210: Design of Operating Systems professor Michael Specter, this OS guide, and the smoltcp dev team.